- Home

- :

- All Communities

- :

- Products

- :

- ETL Patterns & Data Interoperability

- :

- ArcGIS Data Interoperability

- :

- Data Interoperability Blog

- :

- Fast Batch Geocoding In Any Environment With ArcGI...

Fast Batch Geocoding In Any Environment With ArcGIS Data Interoperability

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

If your system of record is one of the many options available to you which are not supported geoprocessing workspaces in ArcGIS Pro you might be tempted to adopt less than optimal workflows like manually bouncing data through CSV or file geodatabase just to get the job done. This blog is about avoiding that, simply reading your data from where it lives, geocoding it and writing the output where you want it, all from the comfort of your Pro session, or even as an Enterprise web tool.

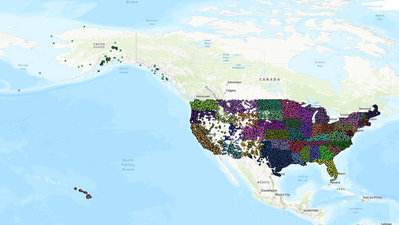

Here are 1 million addresses I geocoded from Snowflake and wrote back to Snowflake without the data touching the ground:

An aside - I got the Snowflake data into a memory feature class using this tool.

We're seeing people who need flexibility in one or both storage technologies where their address data is managed and where the geocoded spatial data lives. Data has gravity and there is no need to fight that. Lets see how to achieve this.

Full disclosure, in the blog download is the fmw source for a Data Interoperability ETL tool for ArcGIS Pro 2.8, which at writing isn't released. I'm using that as it has the FME 2021 engine which supports parallelized http requests, which is relevant for performance. If you don't have Pro 2.8 then ask our good friends at Safe Software for an evaluation of FME 2021 to surf the workspace.

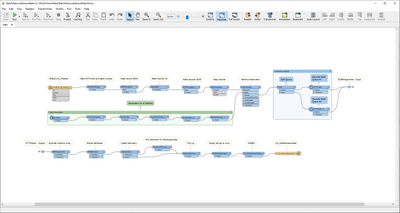

Here is how it looks when edited, and I always like this bit, you can't see any code because there isn't any! We're 21% through the 21st century, who writes code any more just to get work done? 😉

Partly I'm talking about moving data around, but you already knew you could do that, its the batch geocoding that is the value here, lets dig into that.

When you're batch geocoding data that is in flight between systems of record the Geocode Addresses geoprocessing tool isn't suitable, it requires table input and writes feature classes. I'm using the geocodeAddresses REST endpoint. To make the endpoint available I published a StreetMap Premium locator to my portal. I could have used ArcGIS Online's World Geocoding Service or a service published from a locator I built too, the API is identical. Which way you go will depend on a few decision points:

- StreetMap locators on-premise don't send your data out to the internet

- StreetMap locators can be scaled how you want on your own hardware

- StreetMap locators have a fixed cost

- Online requires no setup but has a variable cost based on throughput

- Online sends your address data out to the internet (albeit securely)

I want to make the point you can scale your batch geocoding how you want, so I went with StreetMap.

Now how to drive: Under the covers the geocoding engine considers an address in its entirety, how well it matches all known addresses, picking a winner (or failing to). You will notice you can supply address content in parts (base address, zone fields) or as one value - SingleLine. If you supply address parts the engine in fact concatenates them in some well-known orders based on address grammar for the area covered before submitting them to the engine. Assuming you know the structure of your address data as well as Esri does, you may as well do this yourself and supply the whole address as SingleLine values, so you'll see this is what I do in my sample. The only other data dependency is you'll need an integer key field in your data you can name ObjectID, this comes out the other end as the ResultID field which you can use to join back to your source data.

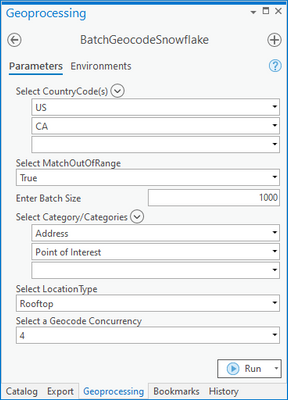

Lets go through the parameters of the ETL tool.

CountryCode is a hard filter for country. If your locator supports multiple countries like StreetMap North America does and your data is from known countries, customize and use this parameter. If you only have a single country locator don't supply a value.

MatchOutOfRange is a switch for finding addresses a small way beyond known house number ranges for street centerline matches.

The BatchSize should not be larger than the maximum allowed for your locator. You can see this number in the locator service properties. The blog download also has a handy Python script for reporting service properties, edit it for your URL.

Categories allow you to filter the acceptable match types, you could customize this parameter to support only certain types of Address matches for example.

LocationType lets you select rooftop or roadside coordinates for exact house point matches.

GeocodeConcurrency isn't a locator property, its a property of the batch handling of the ETL tool. My sample uses 4 - meaning at any time the service is handling 4 batch requests. Make sure to configure as many geocode service instances as you need and make this property agree. In my case I don't have serious metal to run on, I have a small virtual machine somewhere in the sky, but if you have a need for a 64-instance setup then go for it. At some point though you'll be limited by how fast you can read data, send it out and catch the results. My guess is few people will need more than 8 instances in a large geography.

If you're a Data Interoperability or FME user you'll know there is already a Geocoder transformer in the product and it can use Enterprise or Online locators. However it works on one feature at a time and I want multiple concurrent batch processing for performance. In my particular case I'm using Snowflake and its possible to configure an external function to geocode against an Esri geocode service, this is also one feature at a time (but stay tuned for news from Esri on this function in Snowflake).

That's pretty much it, surf the ETL tool for seeing how I made batch JSON and how to interpret the result JSON, and implement your own workflows. Enjoy!

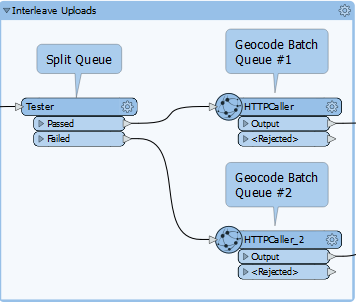

But wait there's more! I edited the tool 27th April 2021 to squeeze more performance (30%!) out of the already parallelized geocode step by managing the http calls in two queues. While one set of 4 batches is processing another set is uploading. This is the bookmark to look for.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.