- Home

- :

- All Communities

- :

- Products

- :

- ETL Patterns & Data Interoperability

- :

- ArcGIS Data Interoperability

- :

- Data Interoperability Blog

- :

- Building a Data Driven Organization, Part #1: Hos...

Building a Data Driven Organization, Part #1: Hosted Feature Service Webhooks

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

ArcGIS Online hosted feature services are foundational. If changes in feature service data require a response you don't have to step outside the ArcGIS system to automate it. This blog is about using feature service webhooks to trigger geoprocessing service jobs that operate on the change set to do anything you want.

It is conceptually simple, here is the Model in the blog download that defines my geoprocessing service:

The geoprocessing service (also known as a 'web tool') receives the webhook JSON payload, extracts the changesUrl object, then gets and operates on the change data using a Spatial ETL tool built with ArcGIS Data Interoperability. You could use a Python script tool too, but then you'll have to write code, and Data Interoperability is no-code technology, so start from here if you want to get going quickly 😉.

I used an 'old friend' data source for my feature service, public transport vehicle positions in Auckland, New Zealand, available on a 30 second interval:

The data is quite 'hot' - no shortage of trigger events! - which was handy for writing this blog. Your data might not be so busy.

Anyway, I initially defined a webhook on the feature service that sent the payload to webhook.site so I could see what a payload looks like. Here is one:

[

{

"name": "WebhookSite",

"layerId": 0,

"orgId": "FQD0rKU8X5sAQfh8",

"serviceName": "VehiclePosition",

"lastUpdatedTime": 1625684942414,

"changesUrl": "https%3a%2f%2fservices.arcgis.com%2fFQD0rKU8X5sAQfh8%2fArcGIS%2frest%2fservices%2fVehiclePosition%2fFeatureServer%2fextractChanges%3fserverGens%3d%5b2322232%2c2322500%5d%26async%3dtrue%26returnDeletes%3dfalse%26returnAttachments%3dfalse",

"events": [

"FeaturesCreated",

"FeaturesUpdated"

]

}

]

You'll see in my Model I used a Calculate Value model tool to extract and decode the percent-encoded changesUrl object. In theory this could easily be done in the Spatial ETL tool and I wouldn't need the Model at all, the Spatial ETL tool could be published standalone, but I ran into issues so fell back to a Python snippet (i.e. Calculate Value). I'm breaking my no-code paradigm story aren't I, but its the only code in this show and you don't have to write it for yourselves now!

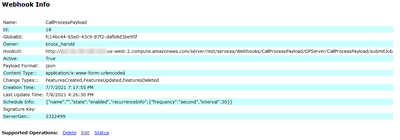

I'll get to the saga of payload processing but first a word on the form of webhook you'll need for your feature service. Here is mine:

Firstly notice the HookURL goes via HTTP. ArcGIS Online feature services are hosted on arcgis.com while my geoprocessing service is on amazonaws.com. I'm not an IT administrator and didn't know how to set up a certificate that would support HTTPS trust between the domains, so I enabled HTTP on my server. Payloads contain no credentials and all downstream processing uses HTTPS to access change data, if you have questions (or advice!) then please comment in the blog.

Secondly notice the Content Type is application/x-www-form-urlencoded. If you use application/json your geoprocessing service will not receive the payload.

Now about processing, here is the ProcessPayload tool:

Webhook change data is accessed (by default) in a secure fashion that is asynchronous. The changesUrl is used to start an extraction job and returns a statusUrl. The statusUrl is used to request a resultsUrl which can be used (when it has a value) to return a response body that contains the change data. This requires looping, which is done in the ArcGISOnlineWebhookDataGetter custom transformer (available on FME Hub). This transformer will download as a linked transformer by default, make sure you embed it into your workspace (a right click option). I edited my copy to check for job completion on 10 second intervals and give up after 10 retries, my data usually arrived on the second retry so that worked for me.

The change data comes out of the ArcGISOnlineWebhookDataGetter as a big ugly JSON object which is hard to unpack, so I avoid the whole issue by reading the change data directly out of the source feature service using queries on ObjectID I get from the change data response. Sneaky, lazy, but effective, and best of all you can recycle this approach for any feature service!

When the change data is read you can perform whatever integration you want to do with it. In my case I just write OGC GeoPackages of the Adds and Updates features and email them to myself, so not a real integration, but you get the idea.

For the record, the 'rules' for publishing Spatial ETL tools as geoprocessing services are that the FME workspace must be entirely embedded, you must export any web connection or database connection credentials used by the tools to the arcgis service owner's account on each processing server (get an XML export file by right clicking on the connection in the FME Options control, as the arcgis user on the server start Workbench from fmeworkbench.exe (AO11 version for portal machines) then import the XML file) and most importantly before you start make sure Data Interoperability is installed and licensed!

For my purposes I used a server that was not federated to a portal, but you can go either way. All this was created in Pro 2.8.1 and Enterprise 10.9.

The blog download has my web tool and Model, let me know how you get on out there. Have fun!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.