- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Error Adding Field to HFS in HA Cluster (Portal)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error Adding Field to HFS in HA Cluster (Portal)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I was able to add fields to my hosted feature service in my dev and stg environments using this tutorial...

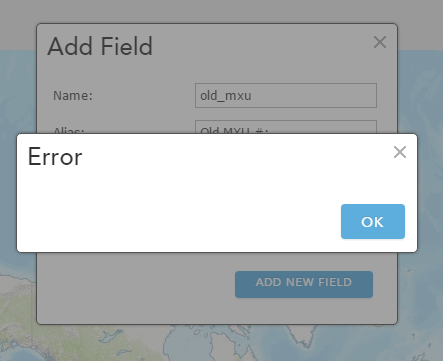

When I attempt to follow the same workflow in my clustered HA environments I get this error...

It would appear that adding a field in a HA environment behaves different. I HAVE had success before so I am not sure why I am getting this USELESS error.

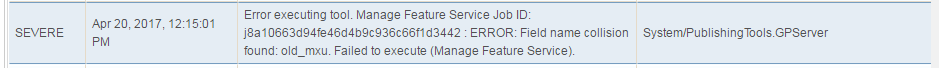

When I check the logs I see this error...

6

6

I have restarted the PublishingTools GP, I have restarted the HFS. I have restarted the Datastore.

Is there a second way to add fields to a HFS?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm surprised that this would be a Portal HA problem, as the failure is being returned from Server and the Publishing Tools GP service. Can you take a look at the dev tools or Fiddler and see what the JSON response of the request is? I assume you don't have a field called "old_mxu" already in the hosted feature service?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I was eventually able to add the fields I needed, however, now I run into this issue...

I am experiencing trouble adding a field to multiple Hosted feature services in our HA environment using this method - http://support.esri.com/technical-article/000012167.

I am able to use the method above in our non HA environments but not in our HA.

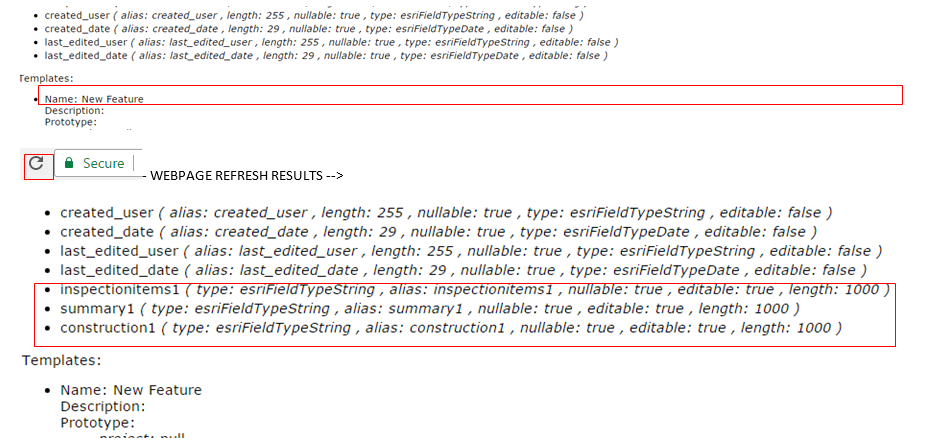

The HA server machines seem to not sync properly as when I refresh the rest end point, the added fields toggle on/off.

- WEBPAGE REFRESH RESULTS -->

ArcGIS 10.4.1 Server Federated with Portal in a HA Cluster.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

So if you were to go to each rest endpoint through 6443 or 6080, one machine would show one thing and the other would show something different? If you restart the Server that isn't showing the right information, then it's likely it's a cache related issue that will be fixed at 10.5.1.