- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- See individual commands from script in history

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

See individual commands from script in history

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am running a python script as a tool in Pro.

The tool runs many gp commands (with some other arcpy functions) and take some time.

In order to understand where time is spend it would be nice if I can see the individual gp tools in the history (with the time it took them to run). This way I do not have to put endless time messages in my code.

I could not find a way to do it.

Is there a way or I should put it in the ideas site?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

GetMessages—ArcGIS Pro | Documentation

import arcpy

fc = arcpy.GetParameterAsText(0)

arcpy.GetCount_management(fc)

# Print all of the geoprocessing messages returned by the

# last tool (GetCount)

print(arcpy.GetMessages())

arcpy.AddMessage(arcpy.GetMessages())Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

One of us do not understand the other...

I have a script (just an example):

buffer(fc1,fcbuf1,15)

buffer(fc2,fcbuf2, 22)

buffer(fc3,fcbuf3,31)

It takes 10 minutes to run.

I would like to see in history 3 different entries, one for each buffer command so I know how the 10 minutes split between the different gp tools.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

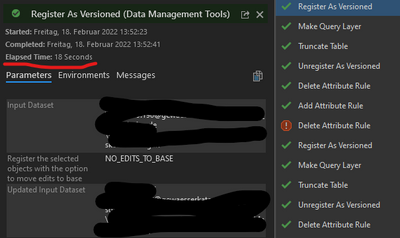

So you want to see the tools in the geoprocessing history? This is already the case (at least for me):

- go to the geoprocessing history

- Hovering over the completed tools should give you a window with the tool's parameters, environment, messages and elapsed time:

This works for both scripts and custom tools (python toolbox, haven't tested script tools).

My code snippet gets the log messages of the tool you ran last (which should include start time, end time, elapsed time, warnings, and errors) and prints them or adds them to your script tool's messages.

Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi,

If I understand right you need code like this:

import time

start_time = time.time()

buffer(fc1,fcbuf1,15)

elapsed_time = time.time() - start_time

arcpy.AddMessage(time.strftime("First operation elapsed %H:%M:%S", time.gmtime(elapsed_time)))

start_time = time.time()

buffer(fc2,fcbuf2, 22)

elapsed_time = time.time() - start_time

arcpy.AddMessage(time.strftime("Second operation elapsed %H:%M:%S", time.gmtime(elapsed_time)))

start_time = time.time()

buffer(fc3,fcbuf3,31)

elapsed_time = time.time() - start_time

arcpy.AddMessage(time.strftime("Last operation elapsed %H:%M:%S", time.gmtime(elapsed_time)))- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I don't think you can log individual geoprocessing steps to the geoprocessing history. The closet thing would be logging the execution times to the arcpy message console or a log file. You can get pretty creative with how you set this up to avoid repetitive code. For example, using dictionaries to select and execute a function that is wrapped in a timer function:

import time as t

import datetime as dt

bufferDict = {

'buffer1': lambda: buffer1(fc1,fcbuf1,15),

'buffer2': lambda: buffer2(fc2,fcbuf2,22),

'buffer3': lambda: buffer3(fc3,fcbuf3,31)

}

total_time = t.time()

for i in ['buffer1', 'buffer2', 'buffer3']:

startTime = t.time()

bufferDict.get(i)()

arcpy.AddMessage(t.strftime(f'{i} completed in {dt.timedelta(seconds=t.time() - startTime)}'))

arcpy.AddMessage(t.strftime(f'Total time taken: {dt.timedelta(seconds=t.time() - total_time)}'))

edited to add total time taken

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Don't you think it can be helpful option?

In Pro SDK there is GPExecuteToolFlags.AddToHistory that add each gp tool to history.

This will save many AddMessage in python (spatially when you have a lot of code that is not just gp tools) and can let you see in history what the real parameters to each tool was.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I think getting that granular would add too much clutter to the history. As @JohannesLindner mentioned, you can currently hover over the task (or right click, view details) and access the messages generated within that task so information is not too far away.

Pro SDK and arcpy serve two different purposes and I think adding two lines of code where you want to capture time throughout a script is a lot more maintainable than extending Pro through the SDK to display individual task parameters ran within a script. The beauty of the SDK is customization and maybe you can create an extension to display what you're after, but aren't you are adding a lot more code in other places to avoid adding redundant lines of code to a script?

You can always ask for it in the ideas board, but I think the answer will be to use AddMessage()