- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- reclassify using Numpy, out of memory

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

EDIT: although I marked Dan's answer re: making the array an integer array, which was the last piece I needed, I created a summary at the end https://community.esri.com/message/610325#comment-610325 to put all the pieces that worked together. I suggest starting there if running into this problem.

Bottom line: trying to get reclass elevation raster (in m), that include “water” and NoData, and Denali foothills, to 3-4 class (based on feet---keeping the converted layer, not in memory), then convert to polygons.

For the study are I used for development (and one other), the raster Reclassify and arcpy.RasterToPolygon_conversion this worked relatively fast. (max few minutes)

I’m now running it on an area about 2x larger (~25m cells, 6657 col 13035 row), with a wider range of input values. When using my script/tool or testing in the python window, I run out of memory after about 4 hours.

So I’m diving into Numpy and https://community.esri.com/people/Dan_Patterson ‘s blog https://community.esri.com/blogs/dan_patterson/2016/03/12/reclassify-raster-data-simply

After a clean reboot, I’m trying my test script in the python window, but I’m still getting an out of error message. I’m not sure if I have something in error in my script, or it is just too large for the 32bit-python to handle. So, I’m open to suggestions…..maybe processing half at a time?? I have tried but a full raster with no nodata, and one that has nodata outside the side area boundary.

Test script I am using (parts borrowed from Dan's demo scripts)

import time

import os

import arcpy

from arcpy import env

from arcpy.sa import *

import numpy as np

from textwrap import dedent

arcpy.CheckOutExtension("spatial")

def arr_reclass(a, bins=[], new_bins=[], mask=False, mask_val=None):

"""a - integer or floating point array to be reclassed using

bins - sequential list/array of the lower limits of each class

include one value higher to cover the upper range.

mask - whether the raster contains nodata values or values to

be masked with mask_val

array dimensions will be squeezed

"""

a_rc = np.zeros_like(a)

if (len(bins) < 2): # or (len(new_bins <2)):

print("Bins = {} new = {} won't work".format(bins,new_bins))

return a

if len(new_bins) < 2:

new_bins = np.arange(1,len(bins)+2)

new_classes = zip(bins[:-1],bins[1:],new_bins)

for rc in new_classes:

q1 = (a >= rc[0])

q2 = (a < rc[1])

z = np.where(q1 & q2, rc[2],0)

a_rc = a_rc + z

return a_rc

theWorkspace = r'C:\___bear2016\_Skwentna2017\Skwentna_prep.gdb'

arcpy.env.workspace = theWorkspace

arcpy.env.overwriteOutput = True

wsPath, wsGDB = os.path.split(theWorkspace)

print("Project gdb (vector, i.e. for points, lines, polygons): {0}".format(theWorkspace))

inStudy = r'C:\___bear2016\_Skwentna2017\Skwentna_prep.gdb\Study16b'

stdyPath, stdyName = os.path.split(inStudy)

print("Study boundary: {0}".format(stdyName))

spatialref = arcpy.Describe(inStudy).spatialReference

rasterIn = r'C:\___bear2016\_Skwentna2017\Skwentna_raster.gdb\elevExtent'

#rasterIn = r'C:\___bear2016\_Skwentna2017\Skwentna_raster.gdb\elevClip'

inRas = arcpy.Raster(rasterIn)

lowerLeft = arcpy.Point(inRas.extent.XMin, inRas.extent.YMin)

cellSize = inRas.meanCellWidth

rasterGDB, rasterFile = os.path.split(rasterIn)

elevUnits = "meters"

elevCutoffFt = "6000"

flatElevBreak = "2000"

elevBreaks = flatElevBreak.split("; ")

elevBreaks.sort(key=float)

flatElevBreak = ";".join(elevBreaks)

flatElevBreak

'2000'

hydroBuff = r'C:\___bear2016\_Skwentna2017\Skwentna_prep.gdb\hydroBuff250'

finalTransZones = arcpy.os.path.join(theWorkspace, "TransAreas")

outRiparian = arcpy.os.path.join(theWorkspace, "TransRiparian")

pElevClass = arcpy.os.path.join(theWorkspace, "elevReclassPoly")

outDEMtmp = arcpy.os.path.join(theWorkspace, "elevReclass")

if elevUnits == "meters": # 3.280839895013123

toElevFeet = 3.28084

else: # note, IF had to go from feet to meters, factor would be 0.3048

toElevFeet = 1

elevFeet = (inRas * toElevFeet)

saveElevFeet = arcpy.os.path.join(rasterGDB, "elevFt")

elevFeet.save(saveElevFeet)

elevMin = elevFeet.minimum - 1

elevMax = elevFeet.maximum + 1

noDataReclass = 99999

elevSplit = flatElevBreak.split(";")

lstCnt = 1

#reclassArg = []

bins = []

new_bins = []

for reclassValue in elevSplit:

if lstCnt == 1:

#reclassArg.append([int(elevMin), int(reclassValue), int(reclassValue)])

bins.append(int(elevMin))

bins.append(int(-1))

bins.append(int(reclassValue))

new_bins.append(int(-1))

new_bins.append(int(reclassValue))

else:

#reclassArg.append([int(prevValue), int(reclassValue), int(reclassValue)])

bins.append(int(reclassValue))

new_bins.append(int(0))

new_bins.append(int(reclassValue))

lstCnt += 1

prevValue = reclassValue

#reclassArg.append([int(prevValue), int(elevCutoffFt), int(elevCutoffFt)])

#reclassArg.append([int(elevCutoffFt), int(elevMax), 9])

bins.append(int(elevCutoffFt))

bins.append(int(elevMax))

new_bins.append(int(elevCutoffFt))

new_bins.append(9)

new_classes = zip(bins[:-1],bins[1:],new_bins)

print(" ...Old Ranges:\n {0}".format(bins))

print(" ...New values:\n {0}".format(new_bins))

print(" ...New classes:\n {0}".format(new_classes))

arr = arcpy.RasterToNumPyArray(inRas, nodata_to_value=9999999)

mask = False

mask_val = None

print(arr)

arr_rc = arr_reclass(arr, bins=bins, new_bins=new_bins)The error I am getting is on the last line, however, looking at the resources, there is plenty of RAM available, so I'm assuming it is just the Arcmap/Python limit.

Output from script (in ArcMap python window)

Project gdb (vector, i.e. for points, lines, polygons): C:\___bear2016\_Skwentna2017\Skwentna_prep.gdb

Study boundary: Study16b

...Old Ranges:

[-107504, -1, 2000, 6000, 20036]

...New values:

[-1, 2000, 6000, 9]

...New classes:

[(-107504, -1, -1), (-1, 2000, 2000), (2000, 6000, 6000), (6000, 20036, 9)]

[[ 205.16717529 205.25009155 205.29598999 ..., 1037.9387207

1037.86242676 1037.83959961]

[ 205.22860718 205.3127594 205.37818909 ..., 1037.93701172

1037.84533691 1037.76965332]

[ 205.29447937 205.38237 205.46986389 ..., 1037.91918945

1037.75964355 1037.76477051]

...,

[ 1481.97961426 1492.3326416 1504.91308594 ..., 510.62741089

504.97958374 501.19992065]

[ 1469.56079102 1486.19445801 1505.37487793 ..., 506.6071167

501.30090332 497.77194214]

[ 1453.34509277 1471.94152832 1493.07250977 ..., 501.66564941

497.09884644 494.20864868]]

Runtime error

Traceback (most recent call last):

File "<string>", line 115, in <module>

File "<string>", line 30, in arr_reclass

MemoryError

>>>

If I need to split it due to size, some guidance for that would be good. The one nice thing about this numpy process, I get the error immediately instead of 4-hours in....

Thanks for any help!

ps - Dan....is this question seen by more than the 13 members/followers? Nice "place" but want to make sure others have a chance to find/reply

tagging a few more areas Python python snippets Imagery and Remote Sensing

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The data type of the array must be integer, I think you still have floating point values, so here is a sample, converting one of my random integer .npy files into an integer, an image, then a polygon

>>> az

array([[ 0., 0., 6., ..., 0., 0., 4.],

[ 0., 0., 0., ..., 0., 2., 0.],

[ 0., 4., 0., ..., 10., 0., 0.],

...,

[ 2., 0., 0., ..., 0., 0., 2.],

[ 0., 0., 0., ..., 0., 8., 0.],

[ 10., 4., 0., ..., 0., 0., 6.]])

>>>

>>> eee_zee = az.astype(int)

>>> eee_zee

array([[ 0, 0, 6, ..., 0, 0, 4],

[ 0, 0, 0, ..., 0, 2, 0],

[ 0, 4, 0, ..., 10, 0, 0],

...,

[ 2, 0, 0, ..., 0, 0, 2],

[ 0, 0, 0, ..., 0, 8, 0],

[10, 4, 0, ..., 0, 0, 6]])

>>> out = arcpy.NumPyArrayToRaster(eee_zee)

>>> out.save("f:/temp/ez.tif")

>>>

>>> p = arcpy.RasterToPolygon_conversion("f:/temp/ez.tif","f:/temp/ez_.shp","NO_SIMPLIFY")

>>>Now, you have to be aware that it will make many unique areas IF you have many areas. That could be limitation

I just used the no_simplify and sent it out to a shapefile which loaded into arcmap

The keep line is eee_zee = az.astype(int) ... that sent the floating point array to an integer view. Then I saved it to a raster (tif) then to a shapefile

Next step... I want to see an image of what you are working with

PS in Global Reach, it has had 2163 view but only 12 people...

so these people...

- have no life other than to view this thread multiple times,

- there a whole load of unsigned in people reading this thread or

- there is something wrong with global reach views

Timothy Hales can you provide some insight

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

BTW - I ran the script above on the other study area, and it ran without any issue, i.e. no out of memory error, and I was able to use

test20a = arcpy.NumPyArrayToRaster(arr_rc)

to get it back into a raster, so that makes me believe that is it just an issue with the size of the raster (Col x row), so again, maybe there is an easy way for me to process half at a time, then merge them back....without having overlap/gap issues? That is the approach I will take next if I don't get any better suggestions/options. thanks again for looking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

quick questions

- python version (please say 3.4)

- numpy version (please say 1.9.x)

comment

- how many rasters are you saving (count them) before you actually get to the array reclass???!!!

- too many... they are still in memory and references to them don't get deleted until AFTER the array is reclassed

solution

- prepare your array for reclassification or whatever, then do

- np.save('c:/test/your_data.npy', your_array) # saves in binary format

- in_array = np.load('c:/test/your_data.npy')

Homework

>>> np.save('/tmp/123', np.array([[1, 2, 3], [4, 5, 6]]))

>>> a = np.load('/tmp/123.npy')

>>> a

array([[1, 2, 3],

[4, 5, 6]])

Now if memory really sucks, you can read in bits

Mem-map the stored array, and then access the second row

directly from disk:

>>> X = np.load('/tmp/123.npy', mmap_mode='r')

>>> X[1, :]

memmap([4, 5, 6])

I will be up most of the night writing, so get back to me.

And if we can't solve this, you may have to MOVE it to python, since it won't be 'seen' unless they have access to the N.R

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

quick questions

- python version (please say 3.4) Default ArcGIS 10.3.1, so assuming 2.7

- numpy version (please say 1.9.x) same, so ??

comment

- how many rasters are you saving (count them) before you actually get to the array reclass???!!! only dealing with one raster/array...size specified in OP

- too many... they are still in memory and references to them don't get deleted until AFTER the array is reclassed Just one reclass, and it crashes...rebooted to clear memory,

solution

- prepare your array for reclassification or whatever, then do

- np.save('c:/test/your_data.npy', your_array) # saves in binary format

- in_array = np.load('c:/test/your_data.npy')

Homework

>>> np.save('/tmp/123', np.array([[1, 2, 3], [4, 5, 6]]))

>>> a = np.load('/tmp/123.npy')

>>> a

array([[1, 2, 3],

[4, 5, 6]])

Now if memory really sucks, you can read in bits

Mem-map the stored array, and then access the second row

directly from disk:

>>> X = np.load('/tmp/123.npy', mmap_mode='r')

>>> X[1, :]

memmap([4, 5, 6])

I'll give that a try. Thanks for responding Dan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

See my comments about saving and loading the array from disk. Arcpy is notorious for stealing and not giving back. Once you have your raster converted to an array, you don't need arcmap any more

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I did the "homework" so see how it should work, but when doing it with the real data, still getting memory issues

..... arr = arcpy.RasterToNumPyArray(inRas, nodata_to_value=9999999) np.save(r"C:\___bear2016\_Skwentna2017\outras.npy", arr) #save in binary format in_array = np.load(r"C:\___bear2016\_Skwentna2017\outras.npy") mask = False mask_val = None print(arr) #arr_rc = arr_reclass(arr, bins=bins, new_bins=new_bins) arr_rc = arr_reclass(in_array, bins=bins, new_bins=new_bins)

So, I think my best bet work be to find a "clean" and automated (since this will be done in different study areas) to split, reclass, con, and then convert to poly. I'll go ahead and move this to the python space to get more input. (I like the design of you numpy space...very organized!)

I'm not sure how much more I'll be able to work on this until Monday, but will try if I find anything that looks promising.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

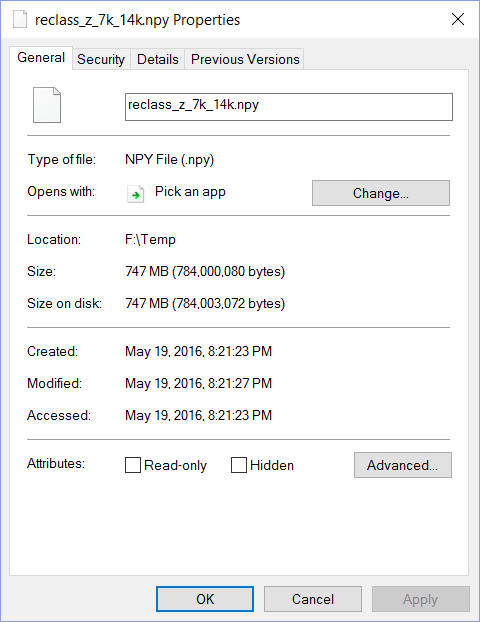

It works... here is the script and how I created a floating point array in the range 0-100.0 and reclassed to 8 bins of 1 to 8.

# coding: utf-8 """ Script: reclass_array_demo.py Author: Dan.Patterson@carleton.ca Modified: 2016-05-19 Purpose: For Rebecca... array has no, nodata to simplify References: RasterToNumPyArray and NumPyArrayToRaster can be used to and from raster formats of different types. """ import numpy as np from textwrap import dedent def arr_reclass(a, bins=[], new_bins=[], mask=False, mask_val=None): """a - integer or floating point array to be reclassed using bins - sequential list/array of the lower limits of each class include one value higher to cover the upper range. mask - whether the raster contains nodata values or values to be masked with mask_val array dimensions will be squeezed """ a_rc = np.zeros_like(a) if (len(bins) < 2): # or (len(new_bins <2)): print("Bins = {} new = {} won't work".format(bins,new_bins)) return a if len(new_bins) < 2: new_bins = np.arange(1,len(bins)+2) new_classes = zip(bins[:-1],bins[1:],new_bins) for rc in new_classes: q1 = (a >= rc[0]) q2 = (a < rc[1]) z = np.where(q1 & q2, rc[2],0) a_rc = a_rc + z return a_rc if __name__=="__main__": """For Rebecca Uncomment the #z and #np.save lines to create the array, then you can load it blah blah""" r = 7000 c = 14000 #z = np.abs(100 * np.random.random_sample((r,c)) -100) # make an array with rand 0-100 #np.save("f:/temp/z_7k_14k.npy",z) z = np.load("f:/temp/z_7k_14k.npy") bins = [0,5,10,15,20,25,30,60,100] new_bins = [1, 2, 3, 4, 5, 6, 7, 8] mask = False mask_val = None a_rc = arr_reclass(z, bins=bins, new_bins=new_bins) np.save("f:/temp/reclass_z_7k_14k.npy",a_rc)

So it works, but it too about 2 seconds to complete, so I may have to see if I can vectorize it some more to keep within in my one coffee sip requirement

And here are the results which are as expected for the classes given and the sampling framework

>>> a_rc

array([[ 7., 6., 8., ..., 8., 7., 7.],

[ 7., 7., 7., ..., 8., 7., 8.],

[ 7., 7., 8., ..., 8., 7., 7.],

...,

[ 8., 8., 7., ..., 8., 4., 6.],

[ 8., 7., 7., ..., 7., 7., 8.],

[ 2., 1., 8., ..., 8., 3., 8.]])

>>> a_rc.shape

(7000, 14000)

>>> np.histogram(a_rc,bins=[1, 2, 3, 4, 5, 6, 7, 8])

(array([ 4903001, 4898616, 4900343, 4899584, 4898414, 4901199, 68598843]), array([1, 2, 3, 4, 5, 6, 7, 8]))

>>>

array size on disk

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks for that Dan, but even creating your random array, saving etc (and ~765 MB...2x the size of mine), I'm getting a memory error, although different than the "out_of_memory" I was getting before

Runtime error

Traceback (most recent call last):

File "<string>", line 51, in <module>

File "<string>", line 33, in arr_reclass

MemoryError

My desktop is older, but semi-beefy...better than what most get. I may try to run your script on my home machine to see if it works.

I still think my best bet will be to split the array "in half" or thirds, process each separately, then merge them back together. Just thinking out loud, but my guess that this would be a simple thing to do, once it is in an array. Query the number of items, based on the size, decide how many parts, write each part to a file, load one at a time (overwriting the memory space) to do the reclass, write it back to disk, process the next. Once done, stitch them back together and get it back into a raster for processing. what do you think??

Also, is there aeasy what to flush the memory if using this in a tool? This will be part of my Toolbox (and maybe addin), run by various users and areas, so making it work...even if in chunks..is important. I've tried using the function clearINMEM() by James Crandall, but my guess this isn't actually using the "IN_MEMORY" space ??

(time for me to head home...)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Rebecca, the RasterToNumpyArray function supports extracting smaller chunks from the raster using the {lower_left_corner}, {ncols}, {nrows} parameters:

RasterToNumPyArray (in_raster, {lower_left_corner}, {ncols}, {nrows}, {nodata_to_value})

So you could loop through and reclass a smaller array each time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Luke that is true, but you can keep arcpy out of it using mem-map which is essentially the same.

Mem-map supports 2 GB of memory, so I am not sure if it because I am using python 3.4.x and numpy 1.9.x for these tests (just using the ArcGIS Pro 1.2 install versions... nothing fancy this time)

I might be worth a while, since you have the array saved to disk, you can tile it, horizontally just to make stacking easier when you are done. I will post an example later

From the help topic in np.load.....

Mem-map the stored array, and then access the second row

directly from disk:

>>> X = np.load('/tmp/123.npy', mmap_mode='r')

>>> X[1, :]

memmap([4, 5, 6])