- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Python script to copy feature classes from feature...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Python script to copy feature classes from feature datasets

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi All,

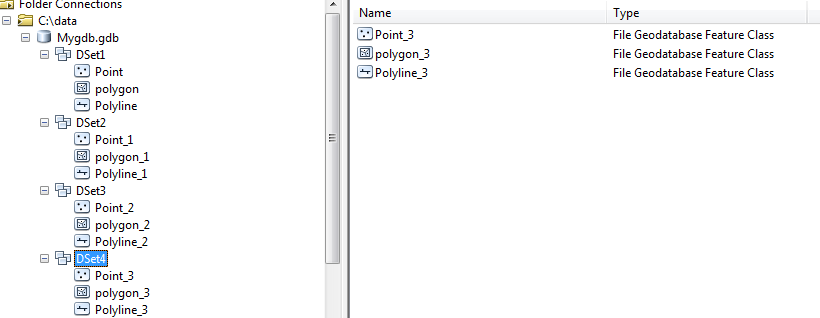

I have a geodatabase which consists of about 100 feature datasets, and each feature dataset contains 2 to 4 feature classes (Please see an example of my geodatabase and its contents).

I want to copy a polyline feature class (this feature class's file name in every feature dataset starts with "polyline") from every feature dataset to a new geodatabase as stand alone feature class. I want the new feature classes to be renamed as ("polyline_" + the name of the feature dataset). For example if the original feature dataset and feature class are "Dset4" and "Polyline10_3" respectively, the new feature class will have a file name of "Polyline_Dset4". I also want the new feature class to have less number of fields than the original feature class. Can anyone help me in writing the python script that can do these functions? Thanks in advance.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You will want to use arcpy.da.walk and list datasets with a "Feature" dataset type filter and then get a list of polylines using list feature classes with a "Polyline" filter. Then for each polyline you can run Copy Features to copy into your new geodatabase. The os module would come in handy.

Something like this would work, but you can adapt it to what you need:

import arcpy, os

from arcpy import env

env.workspace = "Your geodatabase path"

outputGDB = "The new geodatabase path"

for gdb, datasets, features in arcpy.da.Walk(env.workspace):

for dataset in datasets:

for feature in arcpy.ListFeatureClasses("Polyline_*","POLYLINE",dataset):

arcpy.CopyFeatures_management(feature,os.path.join(outputGDB,"Polyline_"+dataset))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You will want to use arcpy.da.walk and list datasets with a "Feature" dataset type filter and then get a list of polylines using list feature classes with a "Polyline" filter. Then for each polyline you can run Copy Features to copy into your new geodatabase. The os module would come in handy.

Something like this would work, but you can adapt it to what you need:

import arcpy, os

from arcpy import env

env.workspace = "Your geodatabase path"

outputGDB = "The new geodatabase path"

for gdb, datasets, features in arcpy.da.Walk(env.workspace):

for dataset in datasets:

for feature in arcpy.ListFeatureClasses("Polyline_*","POLYLINE",dataset):

arcpy.CopyFeatures_management(feature,os.path.join(outputGDB,"Polyline_"+dataset))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks Luke,

This is perfect.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The ArcPy Data Access Walk function is robust enough to handle it without involving the ListFeatureClasses function:

import arcpy, os gdb_in = #path to input geodatabase gdb_out = #path to output geodatabase walk = arcpy.da.Walk(gdb_in, datatype="FeatureClass", type="Polyline") for root, dirs, files in walk: if root != gdb_in: for f in files: arcpy.CopyFeatures_management( f, os.path.join(gdb_out,"Polyline_" + os.path.split(root)[1])) )

The above assumes there is only 1 polyline feature class in each feature dataset, but the name of the polylines feature classes could also be dealt with in the for loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

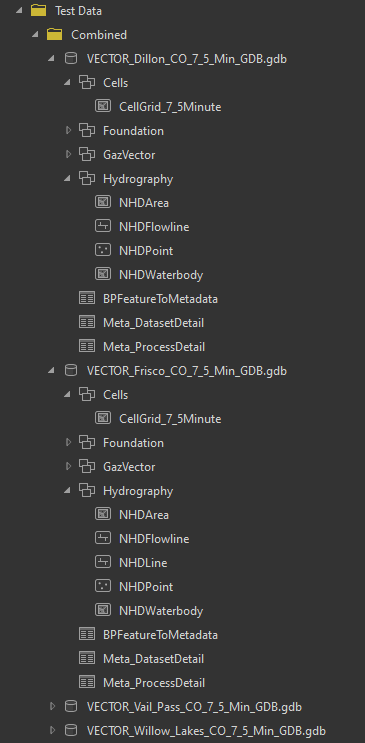

I am interested in this because I have a similar but slightly different problem. I have multiple geodatabases that I would like to 'walk' through contained in a folder. Each feature dataset and feature class within have the same names as the other feature datasets and feature classes in the other geodatabases. I expanded a few feature datasets below to show this.

How would I go about 'walking' through a workspace that contains multiple geodatabases, copying the feature classes in each and appending the respective geodatabase name to each copied feature class?