- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Could this be better (data transfer Access -> ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Could this be better (data transfer Access -> SDE)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Of all the programming languages I know and use, Python is at the bottom so I want to post this script to see if what I have is as efficient as it can be. The Backstory: Our organization has a web map showing stream gage information. The gage information comes into and is stored in an Access. The third party stream gage software runs an annual maintenance on the Access database so we cannot just link to the table directly because that would conflict with database maintenance that occurs.

My solution was to develop a simple script which would connect to the Access database, select all records in a given query, and then transfer that information over to a table in SDE which, in turn, is consumed by the map's services. The script runs as a windows task every five minutes and performs an initial test to see if it's "safe" to connect to the Access Database. If it is, the data is read and passed on to the table. The number of rows in the Access database on average is roughly 2200-2400 records. Initially, the script took about 40 seconds to run but lately it has taken longer. (hence my post about it).

So- here is the script. I've left all the comments in it so hopefully there's not any confusion about what's happening. Is there a better way to approach and accomplish this task? Thanks!

Steve

Script:

import sys

import os

import linecache

import logging

import arcpy

from datetime import datetime

from arcpy import env

file01 = r"\\pmc-floodwatch\DIADvisorDatabases\DvLive.mok" #This file must exist

file02 = r"\\pmc-floodwatch\DIADvisorDatabases\DvLive.mno" #This file must NOT exist

expression = '1=1' #SQL shorthand which select all records

theTable = "tblGageData"

#Establish the error log file

logger = logging.getLogger('errorLog')

hdlr = logging.FileHandler(r'\\snoco\gis\pw\tes\spwscc\python\errorLog.log')

logger.addHandler(hdlr)

# The tables within DIADvisor must not be accessed during its daily database maintenance.

# OneRain recommends checking for the existence and non-existence of two specific files.

# If both conditions are true, it is safe to proceed with connecting to the data within

# the dvLive Access database

if os.path.exists(file01) and not os.path.exists(file02):

print "Processing start time: " + str(datetime.now())

env.workspace = r"C:\gishome\tasks\flood_warning_system\_SPW_GDBMGR@GIS_PW_SWM.sde"

try:

# Set some local variables

tempTableView = "gageTableView"

# Execute MakeTableView

arcpy.MakeTableView_management(theTable, tempTableView)

# Execute SelectLayerByAttribute to select all records

arcpy.SelectLayerByAttribute_management(tempTableView, "NEW_SELECTION", expression)

# Execute GetCount and if some records have been selected, then execute

# DeleteRows to delete the selected records.

if int(arcpy.GetCount_management(tempTableView).getOutput(0)) > 0:

arcpy.DeleteRows_management(tempTableView)

# Now connect to the DIADvisor access database and import the most recent data

# This requires the OLD DB connection previously established using ArcCatalog

counter = 0

accessRows = arcpy.SearchCursor(r"C:\gishome\tasks\flood_warning_system\jetConnectForDvLive.odc\last3days")

curSde = arcpy.InsertCursor(r"C:\gishome\tasks\flood_warning_system\_SPW_GDBMGR@GIS_PW_SWM.sde\tblGageData")

# Loop through the results returned via the OLE DB connection

for cRow in accessRows:

curSensorId = cRow.sensor_id

curEpoch = cRow.epoch

curData = cRow.data

curDataValue2 = cRow.dataValue2

counter += 1

#Insert a new row into the SDE table with the current DIADvisor record's information

row = curSde.newRow()

row.SENSOR_ID = curSensorId

row.EPOCH = curEpoch

row.DATA = curData

row.dataValue2 = curDataValue2

curSde.insertRow(row)

# We're done so perform some variable cleanup

del row

del accessRows

del curSde

del cRow

print "Number of record(s) in the DIADvisor database: " + str(counter)

print "Processing end time: " + str(datetime.now())

except Exception as e:

# If an error occurred, print line number and error message

exc_type, exc_obj, exc_tb = sys.exc_info()

fname = os.path.split(exc_tb.tb_frame.f_code.co_filename)[1]

theMessage = "\n" + 80*"#" + "\n" + 80*"#" + "\n"

theMessage = theMessage + "DATE/TIME: " + str(datetime.now()) + ":" + "\n"

theMessage = theMessage + "EXECPTION: " + str(e) + "\n" + "\n"

theMessage = theMessage + "CALLBACK TRACE: " + "\n"

theMessage = theMessage + 20*" " + "File: " + str(exc_tb.tb_frame.f_code.co_filename) + "\n"

theMessage = theMessage + 20*" " + "Line " + str(exc_tb.tb_lineno) + ": " + str(linecache.getline(exc_tb.tb_frame.f_code.co_filename, exc_tb.tb_lineno))

theMessage = theMessage + 20*" " + "Exception Type: " + str(exc_type)

print theMessage

logger.error(theMessage)

else:

sys.exit()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

It would depend on who the owner is. If you look in ArcCatalog, what is the fully qualified name of the feature class/table?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

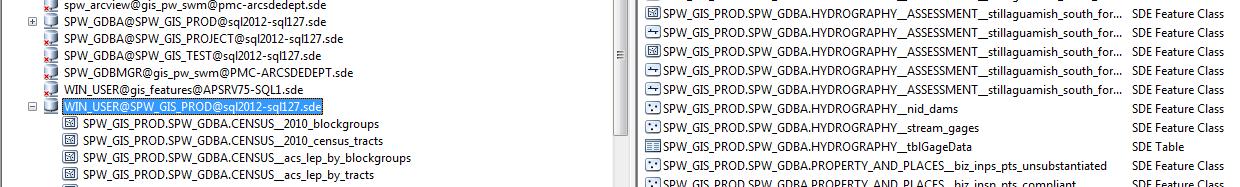

...and here's where things probably get messy. We have two connection possibilities, depending on if the user is simply just viewing data or if they are "editors" of data in the SDE. Here's the screenshot of the connection as an editor:

The other connection (view only) would appear as WIN_USER@SPW_GIS_PROD@sql2012-sql127.sde:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

In this case, you would have to make a connection as SPW_GDBA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks. guys. This is exactly the kind of feedback I was hoping for.

Christian Wells yes- the SDE table is wiped clean before the records are transferred. The table is not registered as versioned so that won't be a problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Why not just setup a scheduled import package on the RDBMS to just update the table? Seems like the best way to manage tabular data is at the database rather than some external app that needs to be executed and maintained.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Honestly, I don't know. This just seemed like a reasonable approach to me at the time. ![]()

I'm not completely sure about what you're suggesting. Are you talking about simply importing the table into SDE everytime the task scheduler runs? I kinda thought about that but since the table is also being consumed in a map service, I wondered if deleting it would cause issues (albeit it would only be deleted for a fraction of time).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Well... this sure sounds like a database management task. Handling it at the database seems far more reasonable, scalable and maintainable compared to messy scripting that will be subjected to failure over time as system-level changes occur.

I'd get with the DBA and work it out.

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »