- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- convert shapefile in nested folders to mdb

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

convert shapefile in nested folders to mdb

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

i have a folder that has line 700 sub folders. each one has at least one shape file in it. i would like to take all 700+ and put them in a geodatabase and then start going thru them. how do i do this. python or model builder is ok

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

1.) Walk through your nested folders: either os.walk() or arcpy.da.Walk() would work

2.) Import, one by one, into your mdb (many possible tools. Perhaps, Copy Features)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

ya but how do you put that together.

walk thru this folder and when you get .shp move it to mdb.?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The examples in the help show the basic pattern: use walk() to populate a list of files, for each file (possibly meeting a criteria, like being a shapefile) do something. Your something is to copy into a mdb.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Have you tried anything yet?

Can't find any pre-made solution?

How familiar you with modelbuilder or python? Do you have a sample you are having problems with?

What do you intend to do with them when you get them there? Just look at them? Why no just use arccatalog to preview them if that is all you need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I reworked a bit of an old script I had, but this should be close to what you need (Untested).

The original post that is based off of is here if you need more refernece. https://community.esri.com/thread/107816

import arcpy import os workspace = r"workspacepath" out_mdb = r"filepathtomdbhere" for dirpath, dirnames, filenames in arcpy.da.Walk(workspace,datatype="FeatureClass"): for filename in filenames: arcpy.CopyFeatures_management(os.path.join(dirpath , filename) , os.path.join(out_mdb , filename.strip(".shp")))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi lela,

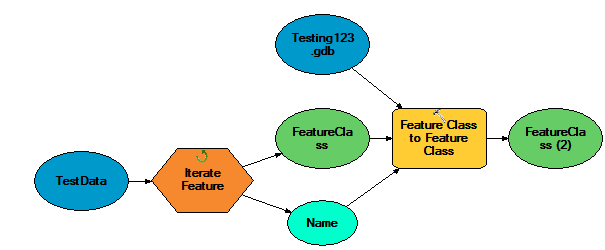

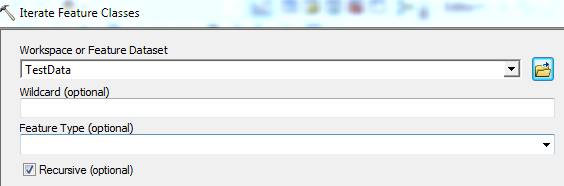

You could also use Feature class iterator and Feature class to feature class (Conversion tool) in a model builder.

Remember to check Recursive (optional).

Think Location

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

so is the test data the folder with the sub folders in it? and then it pulls the feature class name and then converts it to the gdb?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

YES

Think Location