- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Geodatabase Questions

- :

- RAM for SQL Server hosting Enterprise Geodatabases

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

RAM for SQL Server hosting Enterprise Geodatabases

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi,

We are currently having memory pressure issues with the SQL server box that hosts our enterprise geodatabases.

The specs for the SQL Server Box are as follows:

Intel Xeon CPU E5-2660 v3 @ 2.60 GHz 2.59GHz (2 processors)

Installed memory (RAM): 16 GB

Running SQL 2014 SP1 and Windows Server 2012 R2

We have about 50 spatial databases with a total size of 200GB

We are getting the following errors in ArcMap:

Error exporting data.

Table schema changes after cursor declared [4200:[Microsoft][SQL Server Native Client 11][SQL Server]

Could not complete cursor operation because the schema changed after the cursor was declared.]

AppDomain unload. You may have encountered behavior described in Esri Technical Article 43036 and Microsoft Defect 3374271 [42000:[Microsoft][SQL Server Native Client 11.0][SQL Server]The app domain with specified version id (5870) was unloaded due to memory pressure and could not be found.]

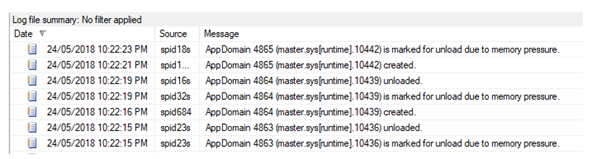

Below is a extract from the SQL Logs:

From a quick google search it appears that we may need an increase in RAM ?

Can anyone let me know the amount of RAM that they are have installed on their SQL boxes. I'm trying to justify

a RAM upgrade to at least 32gb ?

If anyone else has encountered this issue and has another fix other than increasing the RAM please let me know.

Thanks,

Clinton

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

What is the current level of RAM/CPU usage when these errors occur?

Are there any other programs running on this machine that SQL Server is on?

Is this a VM?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi George,

We have FME desktop running on the same box. FME is used to do table joins on a nightly basis.

Yes it the server box is a VM.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I do not think that FME on the same machine would cause the issue unless it was doing a resource intensive job at the time.

I have seen in the past where having the SQL machine on a VM causes issues, mainly due to over allocated host machines. When the SQL machine really needs resources they may not be available on the host and that can cause issues.

I think increasing the available memory up and then tuning of the SQL database server should help.

Update: Here are some links to UC presentations on SQL Server that may be of use, start with the 2015 presentation

UC 2015: Troubleshooting SQL Server Enterprise Geodatabase performance Issues - http://proceedings.esri.com/library/userconf/proc15/tech-workshops/tw_988-194.pdf ans zip file: http://proceedings.esri.com/library/userconf/proc15/tech-workshops/tw_988-193.zip

UC 2017:

Troubleshooting SQL Server Enterprise Geodatabase Performance Issues: http://proceedings.esri.com/library/userconf/proc17/tech-workshops/tw_460-208.pdf

Administering Your Microsoft SQL Server Geodatabase: http://proceedings.esri.com/library/userconf/proc17/tech-workshops/tw_210-184.pdf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

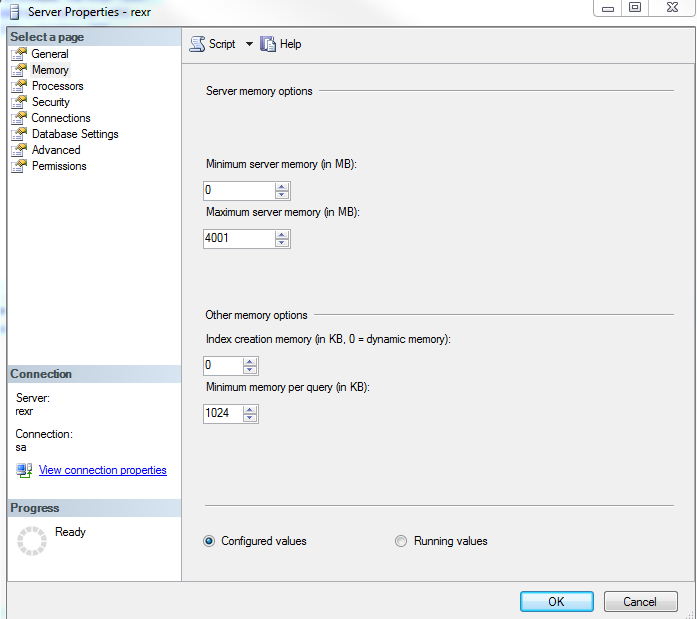

Additionally, has the maximum server memory setting been adjusted to the instance properties on the server? By default, SQL will grow and use as much as it is allocated. That's a good amount of data, and depending on the volume of requests this server is receiving and serving- I could see how 16GB would possibly become limiting. In the screenshot example below, SQL has been capped at 4GB.

If you suspect that there may be specific databases that are taking up too much resources / memory or buffer pool consumption, you can identify the most intensive databases on the instance by running the following query:

The first step would be to run the following query against the SQL Instance to see a list of databases, and the respective buffer pool (% of total) contribution:

DECLARE @total_buffer INT;

SELECT @total_buffer = cntr_value

FROM sys.dm_os_performance_counters

WHERE RTRIM([object_name]) LIKE '%Buffer Manager'

AND counter_name = 'Database Pages';

;WITH src AS

(

SELECT

database_id, db_buffer_pages = COUNT_BIG(*)

FROM sys.dm_os_buffer_descriptors

--WHERE database_id BETWEEN 5 AND 32766

GROUP BY database_id

)

SELECT

[db_name] = CASE [database_id] WHEN 32767

THEN 'Resource DB'

ELSE DB_NAME([database_id]) END,

db_buffer_pages,

db_buffer_MB = db_buffer_pages / 128,

db_buffer_percent = CONVERT(DECIMAL(6,3),

db_buffer_pages * 100.0 / @total_buffer)

FROM src

ORDER BY db_buffer_MB DESC;

Once a particular database has been identified, the following SQL Query can be ran on that database to return a list of all indexes within the database, and their respective size / % contribution to the buffer pool (just update the <databasename> value from the high contributing database(s) from the first query above:

USE <databasename>;

;WITH src AS

(

SELECT

[Object] = o.name,

[Type] = o.type_desc,

[Index] = COALESCE(i.name, ''),

[Index_Type] = i.type_desc,

p.[object_id],

p.index_id,

au.allocation_unit_id

FROM

sys.partitions AS p

INNER JOIN

sys.allocation_units AS au

ON p.hobt_id = au.container_id

INNER JOIN

sys.objects AS o

ON p.[object_id] = o.[object_id]

INNER JOIN

sys.indexes AS i

ON o.[object_id] = i.[object_id]

AND p.index_id = i.index_id

WHERE

au.[type] IN (1,2,3)

AND o.is_ms_shipped = 0

)

SELECT

src.[Object],

src.[Type],

src.[Index],

src.Index_Type,

buffer_pages = COUNT_BIG(b.page_id),

buffer_mb = COUNT_BIG(b.page_id) / 128

FROM

src

INNER JOIN

sys.dm_os_buffer_descriptors AS b

ON src.allocation_unit_id = b.allocation_unit_id

WHERE

b.database_id = DB_ID()

GROUP BY

src.[Object],

src.[Type],

src.[Index],

src.Index_Type

ORDER BY

buffer_pages DESC;

I hope this helps in determining the cause of the memory issues and confirms if you either need additional resources or if current data or settings in the instance can be adjusted to suit needs.

-Rex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

From the Rex instance slide make sure to subtract at least 4 GB RAM from the SQL server instances so the operating system always has something to work with.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Rex,

Thanks for the reply.

The Server Memory Settings have been adjusted to the following:

Total Server Memory = 16GB

Memory Reservered for SQL = 10GB

Memory Reservered for OS = 6GB

Our infrastructure team have reluctantly agreed to add another 8GB of RAM to the box. However they aren't convinced that the memory pressure issues are related to the amount of RAM but may actually be bad SQL queries?

Once this additional RAM has been installed we plan to allocate 16GB for SQL Server and 8GB for the Operating System (Assuming that this is recommended)

We ran the SQL queries you provided and identified the following databases.

db_name | db_buffer_pages | db_buffer_MB | db_buffer_percent |

Topography | 321068 | 2508 | 57.508 |

GIS | 53519 | 418 | 9.586 |

tempdb | 46754 | 365 | 8.374 |

Cadastral | 37069 | 289 | 6.64 |

WebEditor | 19120 | 149 | 3.425 |

Object | Type | Index | Index_Type | buffer_pages | buffer_mb |

CONTOURSURBAN2016OPENDATA | USER_TABLE | R28_pk | CLUSTERED | 54364 | 424 |

CONTOURURBAN2016 | USER_TABLE | R27_pk | CLUSTERED | 48414 | 378 |

TOPOCOMBINED | USER_TABLE | R25_pk | CLUSTERED | 9831 | 76 |

CONTOURURBAN2013 | USER_TABLE | R23_pk | CLUSTERED | 8093 | 63 |

CONTOURURBAN2010 | USER_TABLE | R22_pk | CLUSTERED | 4961 | 38 |

These contour datasets are very detailed and have high vertex counts.

Note: As mentioned to George above we also have FME Desktop (and an ArcGIS Desktop installation that we dont use) on the same VM Box.

Thanks,

Clinton

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Rex,

I have a similar situation to what George described with his SQL database. I ran the queries that you provided above and determined the database that is using the most memory and the feature classes of that database that are using the most memory, but once I gather this information how can I determine if I need more RAM on the server?

I can restart the Server to reduce SQLs RAM usage, but it becomes pegged to 10GB within 2-3 days.

Any assistance would be greatly appreciated.

Server Specs

Windows Server 2012 R2

SQL 2014 SP1

16 GB of RAM

10 GB of RAM allocted to SQL

)_ - Micr.png)

)_ - Micro.png)

Thanks,

Richard

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I would expect the RAM to get pegged at 10GB if that is the limit set for SQL Server. Given that the FC using the most memory looks related to 1 foot contours, that are probably very detailed (i.e. lots of vertexes), that does not surprise me. I would say that you may need more memory on the SQL Server machine based on the DB's listed above. If the data is complex and being accessed frequently, then more memory may be needed.

Here are some MS Docs to review, that may help: https://docs.microsoft.com/en-us/sql/database-engine/configure-windows/server-memory-server-configur...

the other option is to have IT increase the memory to 32GB and increase the SQL allocation to 24GB and monitor performance more than memory usage.

Is there any performance issue being experienced by the end users?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes. Users are sometimes having issues with editing. Edit cursor will not snap or snaps very slowly, redraws and labelling can sometimes be painfully slow. The 'Prod' SQL Instance (which I am referencing here) has a set max of 10GB. Another 'Dev' Instance on the same server has a max of 2GB and that leaves the OS with 4 GB.

I will ask our Server Admin to add an additional 16GB of RAM to up the machine to 32GB of RAM. I will then set the 'Prod' instance to max at 20-24 GB and see how that works.

Thanks for your help.

Richard