- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Geodatabase Questions

- :

- Migrate on-prem PostgreSQL to Azure db for Postgre...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Migrate on-prem PostgreSQL to Azure db for PostgreSQL (DBaaS)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Existing on-prem server:

- Ubuntu Server 18.04 LTS

- PostgreSQL 11.12

- Contains ~90 SDE Geodatabases; total size ~500GB

- ST_GEOMETRY is used

All ArcGIS Enterprise components are getting moved to Azure VMs, workstations will be Azure Virtual Desktops and we want to deploy Azure database for PostgreSQL (database-as-a-service).

Since ST_GEOMETRY cannot be enabled on databases in the cloud can anyone provide instructions for converting the existing ST_GEOMETRY spatial types to POSTGIS?

thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You need to reload the data.

You need to create new PostgreSQL Enterprise Geodatabases on premises then change the default geometry type in the sde.dbtune ##defaults from st_geometry to postgis and reload the data using ArcCatalog or ArcGIS Pro.

Note, if you have traditional versioning or branch versioning on the old st_geometry geodatabases then you need to post/reconcile all child versions with the sde.default version, then delete the versions and execute a geodatabase sde compress (if traditional versioning), then perform the PostgreSQL maintenance to gather new statistics and then proceed to reload the data.

----------------------------------------

sde dbtune configuration keywords

Export Geodatabase Configuration Keyword

modify the dbtune export file and save

from:

##DEFAULTS

GEOMETRY_STORAGE "ST_GEOMETRY"

to:

##DEFAULTS

GEOMETRY_STORAGE "PG_GEOMETRY"

Import Geodatabase Configuration Keyword

----------------------------------------

Create New Geodatabase PostgreSQL

11. Use the Spatial Type drop-down list to choose the spatial type the geodatabase will use: either ST_Geometry or PostGIS.

----------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

to move the PostgreSQL Enterprise Geodatabase with pg_dump & pg_restore.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Here's what I tried:

1) Created a checkpoint of VM (obivously)

2) Installed PostGIS 3.0.1 on the PostgreSQL 11.12 instance (that's currently configured for ST_Geometry storage)

3) Used Create Enterprise Geodatabase tool to create "demogdb", specifying spatial type as POSTGIS

4) Created appropriate users in demogdb

5) Created appropriate feature datasets in demogdb

6) Feature class to feature class tool in Pro to move data from ST_Geometry gdb to new demogdb.

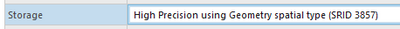

7) demogdb feature classes show:

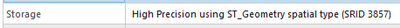

ST_geometry (original) feature classes show this:

The above workflow seemed to work fine. What's the purpose of exporting/importing the configuration keywords?

I did export both the orginal and demogdb configuration keyworks and they each correctly show "ST_GEOMETRY" and "PG_GEOMETRY", respectively.

thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Question: What's the purpose of exporting/importing the configuration keywords?

Answer: to make sure ##DEFAULTS GEOMETRY_STORAGE is set to "PG_GEOMETRY"

You can export the dbtune or

query the sde.sde_dbtune table directly "select * from sde.sde_dbtune order by 1,2;"

you only need to import the dbtune if you make a change to the dbtune file that you exported.

Example:

from:

##DEFAULTS

GEOMETRY_STORAGE "ST_GEOMETRY"

to:

##DEFAULTS

GEOMETRY_STORAGE "PG_GEOMETRY"

I hope this clarifies your question.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

When you state that you have "~90 SDE Geodatabases", does that mean you have ~90 PostgreSQL databases? That would be quite a few.

While the migration procedure is basic enough, migrating a half-terabyte to the cloud is not a trivial undertaking. Have you benchmarked performance, and/or plan to move the compute resources (Enterprise components) to the same cloud?

Keep in mind that database latency is increased when using on-premise applications with remote/cloud databases -- I had a PG RDS migration delayed by more than a year until network infrastructure could support adequate bandwidth to the RDS mission, after initial benchmarking showed a 90-minute publishing export process took 4-6 days from the RDS host.

You might want to look at migrating the SDE.ST_GEOMETRY columns to PostGIS Geometry in the on-premise instance, so that the migration will be a simple pg_dump/pg_restore. But note that pg_dump corrupts PG 11 enterprise geodatabase datasets, because of the deprecation of WITH OIDS=TRUE (used in iN tables). From that regard, you might be better off standing up PG12 or PG13 in the target instance (after making sure your other Esri tools are compatible with 12/13)

To summarize:

- 90 databases/500Gb is a huge migration

- Beware of increased latency in an off-premise database (unless you also move the data operation servers into the same/nearby servers)

- PG 11 is now the eldest supported database. PG 11-PG 11 migration via database tools is risky, and in situ enterprise geodatabase upgrade from PG 11 to PG 12/13 is not supported. Consider migrating to PostGIS geometry before migration, then going directly to the most recent release your Esri stack can support using database tools.

Good luck.

- V

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks everyone, this should be enough to get me started with the test environment!

Yes, we have ~90 PostgreSQL databases, but I would guess only 1/2 of them will make the migration. A lot of db cleanup needs to happen for projects (db's) that are no longer active.

Sounds like the best path is to convert on-prem SDE.ST_GEOMETRY columns to PostGIS Geometry, then pg_dump. Transfer that pg_dump (.post file) to Azure Storage Account, then use pg_restore to the Azure instance.

Our IT consultant is following this doc: Deploy Esri ArcGIS Pro in Azure Virtual Desktop, except Azure db for PG, not SQL.

Our entire base Enterprise deployment is being moved to Azure VMs that are in the same region (Azure GOV) as the Azure db for PG instance. Our analysts' workstations are also being moved to Azure Virtual Desktops, and our file share (Pro projects, FGDBs, ect.) is being moved to Azure NetApp files. So, everything should be co-located in the same Azure region for minimal latency. I didn't even want to attempt on-prem workstations accessing cloud databases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Can someone elaborate on the process of updating the SDE connections after migration?

Our old on-premise DNS name will be different in Azure and there are issues with setting up a split-horizon DNS policy that resolves Azure requests to the new hosted postgres instance.

After migration, do we just update the datastore connections, or does it require re-publishing each service?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@danbecker - if any of the geodatabase connection file properties e.g. database server name, port number, user name, password, changes then the ArcGIS Server Services will stop working and you will need to create new geodatabase connection files, resource the map documents and then republish the services.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

After migration, (ArcGIS Enterprise site & SDE gdbs from on-prem to Azure db for Postgres), what if we had the oldhostname.ourdomain.com FQDN resolve to the IP of the Azure db for Postgres, where the dbo and password are identical.

Would this split-horizon DNS setup require re-publishing every service?