- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Data Management Questions

- :

- Re: Append (Data Management GP tool) - Why the ext...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Append (Data Management GP tool) - Why the extreme speed difference between SQL Server and Local File geodatabase

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Community!

I need help understanding how the Append tool (data management) works, and why the performance is vastly different if I use a target feature class on SQL server versus a target feature class in a local file geodatabase.

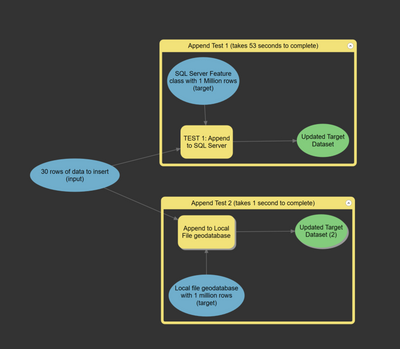

I have a geoprocessing task that I developed in Model Builder. As the last step of my task, I append a small number of features (less than 30 usually) into a feature class containing a very large number of rows (1 million or so), but it is taking a very long time. I simply need to save the rows and exit the task. In other words, I don't need to access the updated feature class after the append is complete.

To troubleshoot, I created a test geoprocessing tool that does two tests:

Test 1 - Append 30 rows into a SQL Server feature class containing 1 million rows. This takes 53 seconds to complete the task, however I noticed that if I select from the table, I can see the rows are immediately inserted into the database. If the data has been inserted, what is the append operation doing the rest of the time?

Test 2 - Append 30 rows into a File Geodatabase feature class containing 1 million rows. This only takes 1 second to complete.

What am I doing wrong? Are there things that I can check on SQL Server to troubleshoot the issue?

Thank you!

David

A screenshot of my test model is shown below.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks to George and his SDE intercept tip, SQL Profiler, and the help of a couple of my colleagues, we finally found the problem!

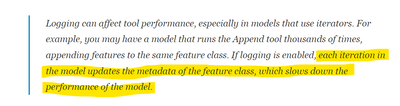

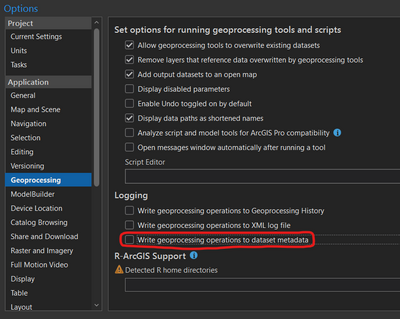

I realize now that I should have mentioned that we run our tool very frequently. And I also wasn't aware of this hidden danger:

Inside of the GDB_Items table managed by SDE, there is a Documentation column containing an XML document that contains the history of geoprocessing operations performed on the feature class in question. Every time our tool ran and appended rows, it added a block of logging XML to that feature class's history. The XML document for that feature class had grown to over 45MB, making further logging updates painfully slow!

Here's an article that talks more about this: Geoprocessing history logging in ArcGIS: performance review – Alex Tereshenkov (wordpress.com)

So the solution in our case is to turn this logging history off for our geoprocessing task, to ensure that we aren't writing geoprocessing operations to the dataset metadata.

arcpy.SetLogHistory(false)

correction... I think this is the function we need to call: arcpy.SetLogMetadata(False).

or in the ArcPro settings:

Perhaps ArcPro / ModelBuilder could be enhanced in some way to make this behavior more obvious? I didn't see anything about this potential problem when looking through the Environment Variables, on the [Append] Tool settings, or anywhere in the Publish tool settings when I publish my geoprocessing task. Also, it seems like it would be more performant for the metadata / history be stored differently, perhaps in a linked table rather than an XML document, in order to make these logging history updates faster. Just my two cents.

Thank you again for your assistance!

-David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is that to connect to SQL Server there are many "handshaking" operations that are not necessary for a local FGDB connection. The operation to insert 30 rows is quite simple, but to carry it out, many messages are exchanged between the client and the server that slow down the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ClaudioRuschel - read my white paper.

commnity.esri.com - How Load Large Featureclass SQL Server Geodatabase

| Cloud & Database Administrator | OCP - Oracle Certified Professional |

I work with Enterprise Geodatabases since 1997.

“ I do not fear computers. I fear the lack of them." Isaac Isimov

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can run a SQL trace in SQL Server and look at all the operations being completed during the append process. This will tell you where the time is spent. You can also do an SDEINTERCEPT (https://support.esri.com/en/technical-article/000014807) on ArcGIS Pro at the same time which can be used to compare where the time is spent: client (ArcGIS Pro) or SQL Server.

I wonder if some of the time is updating indexes, which is not an ArcGIS "task".

If you run the GP tool (not in model builder) does it have different run times?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi George,

Thanks for the tips on the SdeIntercept. I was struggling to get SQL Profiler to return something useful.

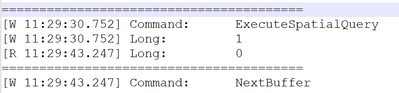

I ran my test again with sde intercept enabled and looked through the logs. I see the process doing the TableInsert and FlushBufferedWrites within the first few seconds to insert the rows. But after that step, I see a lot of other entries in the log, including several ExecuteSpatialQuery calls like this:

Each of these takes on average 10 seconds. I'm not really sure why it is executing these spatial queries, because they aren't part of my model. Can you help me figure this out?

p.s. I do not see any log entries related to dropping or creating the spatial indexes (e.g. TableCreateSpatialIndexWithInfo / TableDropSpatialIndex) because I set the MaintainSpatialIndex environment variable in the Environment properties before running my test.

Thank you

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You would need to compare to the SQL trace to the SDE intercept to see what SQL Server is doing at the same time. It could be on the SQL side and the ArcGIS client is "waiting" for something back.

It would be best to work with technical support on this issue. They can help you dive deeper into the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks George, I will reach out to support. I appreciate the info you provided.

-David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks to George and his SDE intercept tip, SQL Profiler, and the help of a couple of my colleagues, we finally found the problem!

I realize now that I should have mentioned that we run our tool very frequently. And I also wasn't aware of this hidden danger:

Inside of the GDB_Items table managed by SDE, there is a Documentation column containing an XML document that contains the history of geoprocessing operations performed on the feature class in question. Every time our tool ran and appended rows, it added a block of logging XML to that feature class's history. The XML document for that feature class had grown to over 45MB, making further logging updates painfully slow!

Here's an article that talks more about this: Geoprocessing history logging in ArcGIS: performance review – Alex Tereshenkov (wordpress.com)

So the solution in our case is to turn this logging history off for our geoprocessing task, to ensure that we aren't writing geoprocessing operations to the dataset metadata.

arcpy.SetLogHistory(false)

correction... I think this is the function we need to call: arcpy.SetLogMetadata(False).

or in the ArcPro settings:

Perhaps ArcPro / ModelBuilder could be enhanced in some way to make this behavior more obvious? I didn't see anything about this potential problem when looking through the Environment Variables, on the [Append] Tool settings, or anywhere in the Publish tool settings when I publish my geoprocessing task. Also, it seems like it would be more performant for the metadata / history be stored differently, perhaps in a linked table rather than an XML document, in order to make these logging history updates faster. Just my two cents.

Thank you again for your assistance!

-David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is a great find, I know about the issue but did not think about it in this case.

That should help speed up the process.