- Home

- :

- All Communities

- :

- Products

- :

- Attribute Rules

- :

- Attribute Rules Blog

- :

- Attribute Rule Authoring and Configuration Tips an...

Attribute Rule Authoring and Configuration Tips and Best Practices

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Attribute Rules are scripts written in Arcade that allow users to intercept edits made by applications and make further changes or reject the edits. Attribute rules are very useful for enhancing the user workflows, however when authored incorrectly they can slow down editing.

This blog contains a list of best practices and tips to author and configure performant Arcade and attribute rules. You can also watch the Attribute Rules - A Deep Dive DevSummit Presentation where all these are detailed.

Table of Content

1) Understand when queries are executed in Arcade

2) Avoid Count when not needed

3) Watch out for Client Side Attribute Rules

4) Skip Evaluation if Possible

5) Combine Multiple Attribute Rules

6) Only Select the Fields You Need

7) Don't pull the geometry when not required

8 ) Consider Publishing the classes referenced by Attribute Rules

9 ) Watch out Referencing Classes in Feature datasets

10) Push down filters down to the database

11) Push Aggregate functions to the database

12) Consider Increasing Service MaxCacheAge

13) Use Templates when writing filters

14) Understand Attribute Rules Arcade validation

15) Use Triggering Fields

16) Assign Subtypes when Possible

17) Make sure classes are referenced with literal strings

18) Debug Attribute Rules

19) Identify Slow Attribute Rules with uncli

20) Attribute Rules Log extractor

Let us go through each topic in details.

1) Understand when queries are executed in Arcade

Arcade uses lazy loading when querying classes. This means when you use featureset functions like `FeatureSetByName` or `FeatureSetByRelationShipName` or `FeatureSetByRelationShipClass`, nothing is actually get sent to the database. This allows users to chain a featureset to multiple filters, intersects to further filter the featureset.

The query is only sent when you:

- Use Aggregate function (Count, sum, etc..)

- Use First

- Iterate through the featureset

Here are some examples

//nothing gets executed here

var fsSubstation = FeatureSetByName($datastore, "Substation", ["name"], true)

var substationType = 'Transmission'

//nothing gets executed here

var fsTransmission = Filter(fsSubstation, 'type = @substationType')

//a query is set chaining the filter type = 'Transmission' to the substation table

var f = First (fsTransmission)

//nothing gets executed here

var fsBuilding = FeatureSetByName($datastore, "Building", ["name"], false)

//a count query is sent to the database to count all buildings

//very expensive if building table is large..

if (Count(fsBuilding) > 0) {

//doesn't send any query

var fsRes = Buffer(Intersects(fsBuilding, geometry($feature)), 100, 'feet')

//sends a spatial query to return building intersecting

//only name field is returns (no building geometry)

//the $feature geometry buffered for 100 feet

//returning only the first result

var firstBuild = fsRes

if (firstBuild == null)return;

return firstBuild.name;

}

//nothing gets executed here

var fsBuilding = FeatureSetByName($datastore, "Building")

//execute a query to pull the entire Building table

//all fields and the geometry of the rows are returned

for (var b in fsBuilding)

{

//iterate through each building in the client side

}

2) Avoid Count when not needed

Many customers use Count to check if a featureset is empty before doing any work. As we explained in the previous topic, Count actually sends a query to the database to count the featureset results which might be expensive and can add unnecessary load to the database. In order to check if the featureset is empty you can simply iterate through the result directly. Keep in mind that you need to have proper filters before you do so.

Example of a query that checks if the count

var fsBuilding = FeatureSetByName($datastore, "Building", ["name"], false)

//a count query is sent to the database to count all buildings

//very expensive if building table is large..

if (Count(fsBuilding) > 0) {

var fsRes = Buffer(Intersects(fsBuilding, geometry($feature)), 100, 'feet')

//sends a spatial query to count buildings intersecting

//only name field is returns (no building geometry)

//the $feature geometry buffered for 100 feet

//returning the count

if (Count(fsRes) > 0) {

//sends another a spatial query to return buildings intersecting

//only name field is returns (no building geometry)

//the $feature geometry buffered for 100 feet

//returning the first feature

var firstBuild = First(fsRes)

if (firstBuild == null)return;

return firstBuild.name;

}

}

The above script sends 3 queries to the database, so imagine if we execute this on 10k features, we will have 30k queries. The first Count and the second count are not necessary and can be removed. This script executes one query only

var fsBuilding = FeatureSetByName($datastore, "Building", ["name"], false)

var fsRes = Buffer(Intersects(fsBuilding, geometry($feature)), 100, 'feet')

//sends a spatial query to return buildings intersecting

//only name field is returns (no building geometry)

//the $feature geometry buffered for 100 feet

//returning the first feature

var firstBuild = First(fsRes)

if (firstBuild == null)return;

return firstBuild.name;

3) Watch out for Client Side Attribute Rules

There is a property on the Attribute rule that allows the rule to be executed on Applications that supports Attribute rules before the backend executes it again. The property Exclude from Application Evaluation, when unchecked (default) allows clients to execute the rule locally before sending the final payload to the backend which executes it again. I have written extensively on this property here if you want to learn more, but essentially I want to talk about the cost of this property when the wrong attribute rule is authored.

Take this example that pulls all buildings and iterate to find residential ones

var fsBuilding = FeatureSetByName($datastore, "Building")

//execute a query to pull the entire Building table

//all fields and the geometry of the rows are returned

//find b.type == 'Residential'

var globalids =[]

//iterate through each building in the client side

for (var b in fsBuilding)

{

if (b.type == 'Residential')

Push(globalids, b.globalId)

}

return globalids

Not only this script is incorrectly authored, if the rule is allowed to execute on the client (say behind a service), then depending on the size of the building layer, this script may result in hundred of thousands of queries against the feature service. That is because of feature service max record set (default 2000*), to fetch the entire building the client has to page through the layer and execute queries until the entire table is retrieved. This puts enormous load on the database and significantly slow down execution. Once the entire set is retrieved from the feature service, Arcade will iterate through the result. After this client side execution is completed, the edit is sent to the backend and the same script is executed again.

* Note that MaxRecordCount is different in recent versions of Pro.

Even if you added a filter iterating over a potentially large result set is not recommended, especially for rules allowed to be executed on the client side. Not to mention that after this is fully executed.

Read more about Exclude from Application Evaluation here.

4) Skip Evaluation if Possible

Every line of Arcade that is executed has a cost, so if we can terminate the script early we can potentially avoid unnecessary execution and queries to the database. This is especially true if you are editing other features, if you can avoid editing another feature when you don't have to, you can safe a chain of rules and queries that may be executed as a result of this edit. Use return; for early exit

This is an example of a transformer bank and transformers, where as we add or update transformer kva we want to update the totalkva field on the bank.

//This rule is set on the Device transformer

//It updates the container transformer assembly with the total kva of all content transformer

//kva has not been updated, and the association status didn't change exit

//we want to execute the script if child becomes content even if the kva didn't change

if ($originalfeature.kva == $feature.kva && $originalFeature.associationStatus == $feature.associationStatus) return; //early exit

//find the parent feature.

var containerRows = FeatureSetByAssociation($feature, "container")

var transformerGlobalId = $feature.globalId;

var transformerBankAssociationRow = first(containerRows)

if (transformerBankAssociationRow == null) return; //we didn't find any parent, exit

var transformerBankGlobalId = transformerBankAssociationRow.globalId;

//query the parent asembly

var transformerBanks = FeatureSetByName($datastore, "ElectricDistributionAssembly", ["totalkva", "globalId"], false)

var transformerBank = First(Filter(transformerBanks, "globalid = @transformerBankGlobalId"));

//now find all the children to do the sum of true kva

var contentRows = FeatureSetByAssociation(transformerBank, "content")

var globalIds = [];

var i = 0;

for (var v in contentRows) {

globalIds[i++] = v.globalId

}

var deviceClass = FeatureSetByName($datastore, "ElectricDistributionDevice", ["kva"], false)

var devicesRows = Filter(deviceClass, "globalid <> @transformerGlobalId")

var devicesRows = Filter(devicesRows, "globalid in @globalIds")

//send a service side sum (instead of looping)

var totalKVA = sum(devicesRows, "kva")

totalKVA += $feature.kva;

//don't update the bank if the kva is the same

//early exit

if (totalKVA == transformerBank.totalkva) return;

//else send the update.

return {

"result": $feature.kva,

"edit": [

{

"className": "ElectricDistributionAssembly",

"updates": [

{

"globalID": transformerBankGlobalId,

"attributes": {"totalkva": totalKVA }

}

]

}

]

}

Notice that we did multiple early exits to avoid unnecessary evaluation and even potential errors. The first early exit is when the kva value didn't change, the second when the feature doesn't have a parent, and the third and most important one is when the totalkva value is the same as that of the parent bank, we don't even send the edit to the parent.

5) Combine Multiple Attribute Rules

The more attribute rules a class has the more overhead is incurred on both execution, Arcade engine script parsing and data definition storage, serialization and deserialization. It is recommended to combine attribute rules in fewer rules when possible. Here are few examples of when you can do this.

Multiple rules on the same class updating multiple fields. This pole class has 3 attribute rules, all has similar logic which queries the compatible unit table based on the compatible unit value specified on the pole class, and then retrieves the assettype, height and pole class.

//This rules queries the CU table and retrieves the asset type

if ($originalfeature.compatibleunitcode != $feature.compatibleunitcode) {

var vFeature_cucode = $feature.compatibleunitcode

var polecuvalues = FeatureSetByName($datastore, "PoleCUValues", ["assettypevalue"])

var cuvalue = First(Filter(polecuvalues, "cucode = @vFeature_cucode"))

if (cuvalue == null) return

return cuvalue.assettypevalue

}

return;

//This rules queries the CU table and retrieves the height

if ($originalfeature.compatibleunitcode != $feature.compatibleunitcode) {

var vFeature_cucode = $feature.compatibleunitcode

var polecuvalues = FeatureSetByName($datastore, "PoleCUValues", ["height"])

var cuvalue = First(Filter(polecuvalues, "cucode = @vFeature_cucode"))

if (cuvalue == null) return

return cuvalue.height

}

return;

//This rules queries the CU table and retrieves the pole class

if ($originalfeature.compatibleunitcode != $feature.compatibleunitcode) {

var vFeature_cucode = $feature.compatibleunitcode

var polecuvalues = FeatureSetByName($datastore, "PoleCUValues", ["poleclass"])

var cuvalue = First(Filter(polecuvalues, "cucode = @vFeature_cucode"))

if (cuvalue == null) return

return cuvalue.poleclass

}

return;

Notice that the rules are almost identical, but assigned to different fields, so we end up executing the same query 3 times per execution. The three rules can be joined into one rule, leaving the Field property blank (to indicate multiple fields) and we can use a special dictionary return to update all 3 fields at once. This executes the query once and saves on space and Arcade parsing logic.

//This rules queries the CU table and retrieves the pole class, assettype and height

if ($originalfeature.compatibleunitcode != $feature.compatibleunitcode) {

var vFeature_cucode = $feature.compatibleunitcode

var polecuvalues = FeatureSetByName($datastore, "PoleCUValues", ["poleclass", "height", "assettypevalue"])

var cuvalue = First(Filter(polecuvalues, "cucode = @vFeature_cucode"))

if (cuvalue == null) return

//update 3 fields at once in the current feature

return {

"result": {"attributes": {

"poleclass": cuvalue.poleclass,

"height": cuvalue.height,

"assettype": cuvalue.assettypevalue,

}}

}

}

return;

Another example is when you have different logic for different events, a special logic for Insert, update an delete and as a result have 3 different rules, this can be combined in one rule, the $editContext.editType can tell you if the edit is an insert, update or delete, and assigning the rule to run on all relevant events.

if ($editContext.editType == "INSERT"){

//execute insert specific logic

}

if ($editContext.editType == "UPDATE"){

//execute update specific logic

}

if ($editContext.editType == "DELETE"){

//execute update specific logic

}

6) Only select the fields you need

When using FeatureSetByName, specify what fields you want returned, otherwise all fields are requested by default by the query. This results in large overhead when the table has many fields.

//execute a query to pull the entire Building table (all fields)

var fsBuilding = FeatureSetByName($datastore, "Building")

//specify what fields to pull

var fsBuilding = FeatureSetByName($datastore, "Building", ["buildingtype", "objectid")

7) Don't pull the geometry when it's not required

The geometry is field can potentially be large, so when it is not required it is better not to ask for it. The returnGeometry parameter defaults to true, so set it to false when it is not required. Remember this parameter means whether the final resultset should include the geometry or not, so technically you can do spatial queries using intersects and not return the geometry.

var fsBuilding = FeatureSetByName($datastore, "Building", ["name"], false)

//the intersects is pushed to the database

var fsRes = Intersects(fsBuilding, Buffer(geometry($feature, 100, 'feet'))

//sends a spatial query to return buildings intersecting

//only name field is returns (no building geometry)

//the $feature geometry buffered for 100 feet

//returning the first feature

var firstBuild = First(fsRes)

if (firstBuild == null)return;

return firstBuild.name;

8 ) Consider publishing classes referenced by Attribute Rules

When an attribute rule on class A references another class B using FeatureSetByName both classes need to be opened by the geodatabase. Editing class A will execute the rule which will open class B in order to query it.

This becomes interesting a services environment, if you only publish class A assuming the rule is excluded from application evaluation, when the service start only class A will be opened and cached for the DEFAULT workspace. However when we start editing class A, the rule will execute and ask to open class B which the service is not aware of so we will have to go and open class B during the edit which incurs as small cost.

Once the edit is completed class B is no longer referenced by anything, class B is closed and its memory is released, while class A remains because it is used by the SOC process for that feature service.

As the volume of edits increase you will see the constant opening, closing of class B causing many traffic and performance issues.

Publishing class B and class A together improves caching, performance and editing throughput.

The exception here where such cost must be incurred is when that classes cannot be published to the service for security reasons.

9 ) Watch out Referencing Classes in Feature datasets

Let us build up on the example from the previous point, assume class A lives at the root of the geodatabase (not in a featuredataset) but class B is part of a featuredataset that has 100 other classes. The definition of the featuredataset is when a class is opened that is part of a feature dataset, all classes within that feature dataset are opened as well. This means when we edit class A, and the rule triggers the read on class B, class B will be opened and as a result all classes within the featuredatset will be opened with it incurring large cost.

This now goes back to data modeling techniques where when possible class B can be moved to the root or to a feature dataset with fewer classes. Alternatively the feature dataset and all classes can also be published so they can be opened and cached once. Another solution is to create a view that reads from class B and reference the view directly from class A.

10) Push down filters down to the database

The database is very powerful, and when possible we want to push as many filters and expressions down to the database so the client can do as little work as possible, also this allows the database planner to use the proper indexes to filter the final result set.

Here is an example of an unoptimized attribute rules that sums the cost of all residential buildings.

var fsBuilding = FeatureSetByName($datastore, "Building", ['type', 'cost'], true)

//execute a query to pull all buildings (type and geometry)

//filter

//find b.type == 'Residential'

//iterate through each building in the client side

var sumCost = 0.00

for (var b in fsBuilding)

{

if (b.type == 'Residential')

sumCost+= b.cost;

}

return sumCost

This scripts executes an unbounded query and pulls all buildings to the client and then filters each feature by the type in the client side which is very expensive and sums the cost.

This can be rewritten to push the residential filter to the database, making the number of returned features smaller (we can still make it better, I'll discuss that in the next topic)

var fsBuilding = FeatureSetByName($datastore, "Building", ['type', 'cost'], true)

var type = 'Residential'

var fsResidential = Filter( fsBuilding, 'type = @type')

//execute a query but only pll the residential

//iterate through each building in the client side

var sumCost = 0.00

for (var b in fsResidential )

sumCost+= b.cost;

return sumCost

11) Push Aggregate functions to the database

In the previous topic, we pushed the filter to the database resulting in fewer rows coming back to the client, however we are still potentially working with large number of rows. In this particular case because we are doing a sum, we can use the aggregate functionalities of Arcade to push the entire sum down to the database.

var fsBuilding = FeatureSetByName($datastore, "Building", ['type', 'cost'], true)

var type = 'Residential'

var fsResidential = Filter( fsBuilding, 'type = @type')

//execute a query but only pll the residential

//push the sum to the database

var sumCost = sum(fsResidential)

return sumCost

While this is the best outcome it doesn't mean this query is cheap, there a cost for aggregate functions. Executing a sum for every edit can still be expensive, so avoid it unless it is absolutely necessary, refer to previous topics where we can skip execution when it is not required.

12) Consider Increasing Service MaxCacheAge

The map service creates a per SOC cache for the data sources opened for each edited version. For example, if an edit is received on a version A, the workspace is opened a cached for version A on the SOC that services the edit. The next edit on version A will utilize this cache if it happens to hit the same SOC.

The MaxCacheAge controls how long the data sources cache should remain on the SOC before it is discarded. Increasing that value (default 5 minutes) raises the chances of cache hits and improves overall performance but at a cost of higher memory usage for ArcSOC.

You can add maxCacheAge using the admin API, right under the properties of the mapserver of your service. The value is not there by default.

When debug logging is enabled you will see a broadcast entry showing how many classes and tables are opened. This tells us how long the broadcast took, and how many tables/classes and relationships were touched. For example if there is a feature dataset with 100 classes, and we published one of those classes as a service, all 100 will be opened when you edit that class.

BeginBroadcastStopEditing;Broadcasting Stop editing accross: 1 Feature Datasets, 24 Tables, 17 Relationships

13) Use Templates when writing filters

One of the common things we see in attribute rules are manually hand-rolled where clause filter specially when looking up a set of values. This way of building filters is error prune causes failures and potentially wrong filters. Here is an example.

var fsTable = FeatureSetByName($datastore, "table")

var objectIds = [1,2,3,4,5]

var whereClause = "objectId in ("

for (var oid in objectIds){

whereClause += "'" + objectIds[oid] + "',"

}

whereClause = Left(whereClause, Count(whereClause) - 1)

whereClause += ")"

fsFilter = Filter(fsTable, whereClause);

///rest of code

We can use variables in where clause filter and Arcade will parse the variable and use the correct SQL syntax. Whether its date, string or integer. Here is how to write the same script above but much simpler. Use @ symbol with variable in the where clause. Here is the same example rewritten.

var fsTable = FeatureSetByName($datastore, "table")

var objectIds = [1,2,3,4,5]

var whereClause = "objectId in @objectIds";

fsFilter = Filter(fsTable, whereClause);

That will be translated to the following SQL

OBJECTID IN (1,2,3,4,5)

And if the field is string or date , Arcade will add single quotes as necessary and format date function based on the target DBMS.

Note that @ doesn't support expressions so you can only put variables. E.g. you can't do "objectId in @collection.list"

14) Understand Attribute Rules validation

The Attribute Rule expression builder allows users to author Arcade expressions and validate them based on the attribute rules profile context. However, Attribute rules work with Arcade globals that are tied to editing context. For example when you edit a feature the $feature global is populated with the current state of the row being edited, another example is the $originalFeature is the state of the row before the edit which is applicable for update events.

These globals are not available during validation of the expression, so what the builder does is the fetch the first row in the table and use it as input to $feature. That $feature is then used for validation of the script.

This results in interesting scenarios where the rule can pass validation but fails at runtime. That is why the Arcade script author should always author guard logic to protect against all cases. Let us take an example

//This script validates fine in the expression builder

//but fails when a new feature is created

var label = $feature.labeltext;

//split the string based on a space

var lblArray = Split(label, " ")

//take the second token

var secondPart = lblArray [1]

return secondPart;

Arcade is a programming language that needs to be authored to handle errors. In this particular case the first feature in the table, which is used by the expression builder for verification, happened to have a whitespace in $feature.labeltext which passed the validation. However when subsequent new features that are created may have a location without a whitespace, which results in a split returning 1 element instead of 2. This is why the rule sometimes work and sometimes fails with index out of bound error.

Here is the updated rule with the fix to exit if the script has not space.

//This script validates fine in the expression builder

//but fails when a new feature is created

var label = $feature.labeltext;

//if there is no space exit

if (find(label , " ") < 0) return;

//split the string based on a space

var lblArray = Split(label, " ")

//take the second token

var secondPart = lblArray [1]

return secondPart;

15) Use Triggering Fields

Immediate calculation attribute rules that are assigned to an update event are executed regardless of what field is being updated. While users can of course add a condition in Arcade to exit the rule early if the field that they want isn't changed, this still incurs the cost of the Arcade engine parsing and execution.

Attribute rules triggering fields list is a new property on the attribute rule that specifies what fields should trigger the rule, avoiding the overhead of rule execution when it is not required. Here is an example of the performance benefit updating 20,000 features with and without triggering fields.

You can read more about the performance benefits of using triggering fields here.

16) Assign Subtypes when possible

Assigning a subtype to an attribute rule is useful to prevent unnecessary rule execution. Prior to 3.5 you could only assign one subtype to a rule, however with 3.5 and up you can assign multiple subtypes to a rule. This allows the rule to only execute when a feature with a subtype in its subtypes list is edited. Read more about Multi-Subtypes feature here.

17) Make sure classes are referenced with literal strings

When writing attribute rules, we often tend to use the FeatureSetByName function to reference other tables or classes. However, you might have encountered this error before when using Arcade scripts with attribute rules that utilize this function.

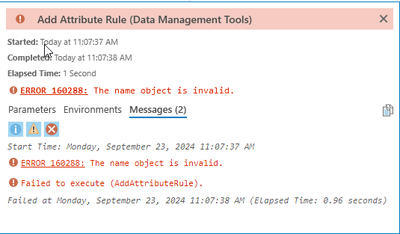

ERROR 160288: The name object is invalid. (3.3.3, 3.2.4, 3.17, 2.9.13)

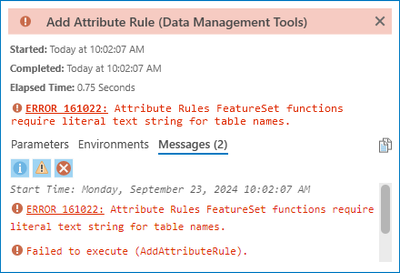

ERROR 161022: Attribute Rules FeatureSet functions require a literal text string for table names. (3.4+)

This means you wrote an Arcade expression using FeatureSetByName and passed a variable to the class name parameter. Here is an example:

var class_name = "test";

var feature_set = FeatureSetByName($datastore, class_name);

This pattern is not supported, and the error message is intentional to protect against downstream errors, such as issues with publishing, copy and paste, exporting XML workspaces, and various other data transfer operations.

To fix this, find all instances of FeatureSetByName and ensure you are passing a literal string, as shown below:

var feature_set = FeatureSetByName($datastore, "test");

Why does FeatureSetByName require a literal string?

In ArcGIS Pro 2.3, we added the ability in attribute rules to reference other tables and classes. This created a dependency tree: if class A has an attribute rule that references class B, copying class A should also bring class B with it; otherwise, the editing behavior will be broken in the new workspace.

To support this, in Arcade, we statically analyze the script to determine this dependency and rely on literal strings to find the referenced classes. When using a variable in FeatureSetByName instead of a literal string, we would have to execute all Arcade expressions to find the value of the table, which would slow down the process of saving the rule. Moreover, execution alone is not enough to discover the table name — for example, having FeatureSetByName nested within a condition that might not be reachable during execution would still pose a problem.

Therefore, always use a literal string when working with FeatureSetByName. Note that this is also applicable to FeatureSetByRelationShipClass

18) Debug Attribute Rules

Attribute rule executions is logged in details in both the server log and ArcGIS Monitor when debug mode is enabled. This allows users to investigate capture their attribute rules execution and investigate how long it takes.

Examples of different rule types:

Below are examples of the logged behavior for the different rules types to help search the logs above.

- Immediate calculation rule:

Attribute rule executed: {"Class name":"ElectricDistributionDevice","GlobalID":"{8B2F2A70-A94A-4028-8787-CEEA4E853BE0}","Rule name":"Assign Transformer FacilityID","Rule type":"Calculation","Expression Result":"Alpha - Tx-303","Elapsed Time":0.0063134000000000003}

- Constraint rule:

Attribute rule executed: {"Class name":"StructureBoundary","GlobalID":"{7FB51958-A7C6-4F9A-BAC6-629CB21DA123}","Rule name":"Substation name cannot be null or empty","Rule type":"Constraint","Expression Result":"0","Elapsed Time":5.3499999999999999e-05}

- Batch calculation rule:

Attribute rule executed: {"Class name":"StructureBoundary","GlobalID":"{E1D79791-CCDE-40CF-B8EA-DA085EFDC3AC}","Rule name":"Calculate TransformerCount on substation","Rule type":"Calculation","Expression Result":"0","Elapsed Time":0.0056515999999999997}

- Validation rule:

Attribute rule executed: {"Class name":"Inspections","GlobalID":"{BBCC635A-3F6A-4D8D-BFF8-0B6427848856}","Rule name":"Inspection records must have comments","Rule type":"Validation","Expression Result":"0","Elapsed Time":0.017661199999999998}

Refer to this knowledge base Article to know how to setup debug mode and capture the attribute rules log. In the next topics we present some tools that extract these logs and present them in more friendly way.

Note that you can also use console and the console messages will also be printed in the server and pro debug log.

19) Identify Slow Attribute Rules with uncli

This server log parser is a web tool that uses the admin API to query and parse recent logs. It can show Utility network Trace, Validate, update subnetworks, it also show the summary of attribute rules and how long each took and the SQL queries executed as a result.

You can install uncli server log web parser here. Make sure to install it on the same machine as your webadaptor. Once you install and configure it it will look something like this.

Let us say we have a slow edit that we want to debug. First we enable server log debug mode in order to capture attribute rules.

Then we make the edit in Pro or the Web Editor.

Next we go to the web parser, login using the admin credentials and select the service we want to extract the log from, how long ago we want to query (10 minutes is the default) and click on Attribute Rules log. This will query the admin api for all attribute rules that has been executed in the past 10 minutes and list them as a table.

We see that the slowest attribute rule is CreateEO (edge object) and that took almost 3 seconds. So we go and investigate why the rule is slow based on the topics we learned in this article.

We can use the applyEdits log to see the raw edit and how long it took.

Taking the request Id which is the unique identifier for the request we can use it to subsequently filter all queries executed by that request. In the requestId field fill in the request id from the applyEdit and click on SQL logs.

In this particular case, there is no one particular query but a lot of them that add up.

20) Attribute Rules Log extractor

The uncli log extractor is great against immediate edits that happen recently, but it requires configuration, admin api access but it is not designed to work with large amount of logs (ie, days or weeks). For that the solutions team have a dedicated log extractor tool that takes the raw xml files and turn it into indexable mobile geodatabase.

Download the Utility Data Management Support toolbox from here.

Add the toolbox to pro and run the Extract Logs from Files tool.

Drag the ArcGIS Server logs and ArcGIS Services Log to the diagnostic files. The logs can be find under the arcgis logs directory.

The output is a mobile geodatabase, let us add it to Pro. The main.logs table has all the events, the rest are views that summarizes the log.

Let us open the attribute rules view and sort by elapsedTime to find the slowest rule.

the geodatabase queries view shows all the SQL queries executed, just like we did in web parser, we can use the requestid to filter the SQL for a specific requestid.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.