- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Lessons Learned — Attribute Rules

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

My organization plans to switch from ArcMap to Pro later this year. As you can imagine, we've got a long list of functionality we want to implement with attribute rules once we move to Pro.

Other than doing the usual "best practice" stuff like...

- Documentation

- Clean code

- Clean names and descriptions

- Make sure the requirements are actually needed & well understood

Do you have any techniques that you can share, when it comes to keeping your attribute rules manageable? (avoid creating an unmaintainable monster)

Things like:

- Combine like-rules into larger rules to reduce the number of rules. Or conversely, separate them into more manageable smaller parts. Which is a better approach?

- Use contingent values as an alternative to attribute rules? Or is it better to use attribute rules for everything, so that all logic is stored in the same place (avoid confusion and conflicts).

- Are alternative mechanisms like database triggers or scheduled jobs better in some cases?

What lessons have you learned?

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Since I switched to Pro 3 years or so ago, I have used Attribute Rules heavily in my database.

Things I do include

- Automatic primary key

- maintain topological relationships

- stream segments and their junctions

- create a junction: snap to closest segment, split that segment at the junction

- create a segment: look for junctions close to end points (snap to the junctions or create new ones)

- move a junction: move the segment vertices and vice versa

- streams and buildings (bridges, culverts, weirs, etc)

- create/edit building: snap to nearest segment

- move segment: re-snap buildings

- stream segments and their junctions

- get attributes from related tables (relationships via location and/or attributes)

- edit other tables

- Constraint rules

- Validation Rules

Some answers to your questions (of course, only from my experience, your mileage may vary):

- Many rules vs huge rules: I tend to huge.

- cons: unwieldy to work with, especially in the default Arcade editor.

- pros:

- you don't have to do the same steps (eg load the same featureset) in multiple rules, which is good for writing and changing the rule/database schema and for execution time.

- If multiple rules trigger on the same table, you have no control over the execution order, which can give unwanted results if a rule depends on a field that is calculated by another rule. If you calculate both fields in the same rule, you have full control.

- if you have to debug, you only have one rule to check vs two or three or even more. of course, working through a huge rule can also be more cumbersome than working through several small ones...

- other geodatabase features: I haven't worked with contingent values. But for default values I found that it is just as easy to check a field for null and return the default value with the other calculated values.

- alternative mechanisms:

- bulk inserts/edits with attribute rules that load other tables are really slow, because the other tables get loaded for each feature. if you have to do that often, a python script is better (just read the other table once, store it in a list/dict and work with that).

- same goes for field calculation with related tables

- same goes for validation rules. I wanted to validate my topological relationships (I know, there's the topology dataset, but I have attributes based on topology, too). But that took hours for my relatively small database, while a simple Python tool does the same in under 10 minutes.

Other things I did to make my rules manageable:

- You may want to use an external editor (I wanted to but couldn't because of IT restrictions)

- Have your rules saved somewhere (could already be solved with an external editor). That is good for version control and for quickly taking a look at the rule without opening Pro, navigating to the table, opening the Attribute Rules view, and opening and resizing the editor. Personally, I developed my rule in the default editor until it worked, then I ran a tool that exported all attribute rules to text files.

- Personally, I find the separation into Calculation/Constraint/Validation somewhat cumbersome, because I kept on forgetting that some tables had constraint rules. So I just converted my constraint rules to calculation rules (or incorporated them in existing ones). To reject an edit, you can return a dict with the key errorMessage (see here). Mostly, I just used a default value if some error occured to let the edit through.

Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Here are the kinds of attribute rules we have in mind:

- Things we wish there were built-in to ArcGIS, but aren’t; will implement using attribute rules instead:

- Hard-enforce subtypes and domains (multiple domains per table)

- Prevent true curves

- Prevent multi-part features

- Populate M-vales as cumulative length of line

- Other customizations:

- Auto-generate IDs

- Get attributes from related records.The relationship can be tabular or spatial.

- Create, update, or delete records in related tables.

- Tabular constraints:

- If field A=x, then field B should be y.

- Check if there’s a record in a related table.

- The number in field A should be >= the number in field B.

- Topological constraints:

- If field A=x, then the the line's midpoint should be within a polygon in a related FC. All other features should fall outside of the polygons in the related FC.

- Maintain additional geometry columns in separate tables.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Since I switched to Pro 3 years or so ago, I have used Attribute Rules heavily in my database.

Things I do include

- Automatic primary key

- maintain topological relationships

- stream segments and their junctions

- create a junction: snap to closest segment, split that segment at the junction

- create a segment: look for junctions close to end points (snap to the junctions or create new ones)

- move a junction: move the segment vertices and vice versa

- streams and buildings (bridges, culverts, weirs, etc)

- create/edit building: snap to nearest segment

- move segment: re-snap buildings

- stream segments and their junctions

- get attributes from related tables (relationships via location and/or attributes)

- edit other tables

- Constraint rules

- Validation Rules

Some answers to your questions (of course, only from my experience, your mileage may vary):

- Many rules vs huge rules: I tend to huge.

- cons: unwieldy to work with, especially in the default Arcade editor.

- pros:

- you don't have to do the same steps (eg load the same featureset) in multiple rules, which is good for writing and changing the rule/database schema and for execution time.

- If multiple rules trigger on the same table, you have no control over the execution order, which can give unwanted results if a rule depends on a field that is calculated by another rule. If you calculate both fields in the same rule, you have full control.

- if you have to debug, you only have one rule to check vs two or three or even more. of course, working through a huge rule can also be more cumbersome than working through several small ones...

- other geodatabase features: I haven't worked with contingent values. But for default values I found that it is just as easy to check a field for null and return the default value with the other calculated values.

- alternative mechanisms:

- bulk inserts/edits with attribute rules that load other tables are really slow, because the other tables get loaded for each feature. if you have to do that often, a python script is better (just read the other table once, store it in a list/dict and work with that).

- same goes for field calculation with related tables

- same goes for validation rules. I wanted to validate my topological relationships (I know, there's the topology dataset, but I have attributes based on topology, too). But that took hours for my relatively small database, while a simple Python tool does the same in under 10 minutes.

Other things I did to make my rules manageable:

- You may want to use an external editor (I wanted to but couldn't because of IT restrictions)

- Have your rules saved somewhere (could already be solved with an external editor). That is good for version control and for quickly taking a look at the rule without opening Pro, navigating to the table, opening the Attribute Rules view, and opening and resizing the editor. Personally, I developed my rule in the default editor until it worked, then I ran a tool that exported all attribute rules to text files.

- Personally, I find the separation into Calculation/Constraint/Validation somewhat cumbersome, because I kept on forgetting that some tables had constraint rules. So I just converted my constraint rules to calculation rules (or incorporated them in existing ones). To reject an edit, you can return a dict with the key errorMessage (see here). Mostly, I just used a default value if some error occured to let the edit through.

Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks Johannes! Very helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@JohannesLindner , would you be willing to share an example of getting data from related tables? If I have a feature class with a related inspection table that users would fill out multiple times for one parent feature, is it possible to write a value from one of the attributes in the related table into a column in the original feature when new inspections are completed?

- Zach

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That is absolutely possible.

There are two ways to exchange values between (spatially) related tables:

- Pull: When you add or edit a feature, the rule pulls values from features in related tables and stores them in this feature

- Push: When you add or edit a feature, the rule pushes values from this feature to features in related tables

Which method you implement depends on your use case. Some examples (not tested, there might be some missing parentheses and such):

Examples for Pulling:

Featureclass "Assets" and related table "Inspections". New Inspection should use the Asset's "Value1" attribute.

// Calculation Attribute Rule on Inspections

// Field: Value1

// Triggers: Insert

// get the related asset

var asset_id = $feature.AssetID

var assets = FeaturesetByName($datastore, "Assets")

var asset = First(Filter(assets, "AssetID = @asset_id"))

// if there is no related asset, abort

if(asset == null) { return }

// else return the asset's Value1 attribute

return asset.Value1

Same as above, but the new Inspection should use the Asset's "Value1" and "Value2" attributes.

// Calculation Attribute Rule on Inspections

// Field: leave empty

// Triggers: Insert

// get the related asset

var asset_id = $feature.AssetID

var assets = FeaturesetByName($datastore, "Assets")

var asset = First(Filter(assets, "AssetID = @asset_id"))

// if there is no related asset, abort

if(asset == null) { return }

// else return the asset's Value1 and Value2 attributes

return {

result: {

attributes: {

Value1: asset.Value1,

Value2: asset.Value2

}

}

}

Polygon featureclass "Parks" and point featureclass "Benches". They are related spatially, but Benches should also store the Park's name.

// Calculation Attribute Rule on Benches

// Field: ParkName

// Triggers: Insert

// get the park

var parks = FeaturesetByName($datastore, "Parks")

var park = First(Intersects($feature, parks))

// if there is no intersected park, abort

if(park == null) { return }

// else return the park's name

return park.Name

Examples for Pushing:

Probably the most common one: Featureclass "Assets" and related table "Inspections". When a new Inspection is added, the date of that latest inspection should be stored in the Asset.

// Calculation Attribute Rule on Inspections

// field: leave empty

// Triggers: Insert

// Exclude from application evaluation!

// get the related asset

var asset_id = $feature.AssetID

var assets = FeaturesetByName($datastore, "Assets")

var asset = First(Filter(assets, "AssetID = @asset_id"))

// if there is no related asset, abort

if(asset == null) { return }

// else push an edit to that asset

return {

edit: [{

className: "Assets",

updates: [{

objectID: asset.OBJECTID,

attributes: {

LastInspectionDate: $feature.InspectionDate

}

}]

}]

}

Polygon featureclass "Parks" and point featureclass "Benches". They are related spatially, but Benches also store the Park's name (see examples for pulling). Point featureclass "Trees" also stores the Park's name. If the park is renamed, the new name should be pushed to Benches and Trees.

// Calculation Attribute Rule on Parks

// field: leave empty

// Triggers: Update

// Exclude from application evaluation!

// get the benches

var benches = FeaturesetByName($datastore, "Benches")

var benches_in_park = Intersects($feature, benches)

// create an empty array that will store the update info

var bench_updates = []

// loop over the intersected benches and fill the update array

for(var bench in benches_in_park) {

var update = {

objectID: bench.OBJECTID,

attributes: {ParkName: $feature.Name}

}

Push(bench_updates, update)

}

// do the same for trees

var trees = FeaturesetByName($datastore, "Trees")

var trees_in_park = Intersects($feature, trees)

var tree_updates = []

for(var tree in trees_in_park) {

var update = {

objectID: tree.OBJECTID,

attributes: {ParkName: $feature.Name}

}

Push(tree_updates, update)

}

// push the edits to the other featureclasses

return {

edit: [{

className: "Benches",

updates: bench_updates

},

{

className: "Trees",

updates: tree_updates

}]

}

Point fc and polygon fc. The polygon fc is just a buffer around the point, they are related by PointID. If you add/move/delete a point, the buffer should automatically be created/moved/deleted.

// Calculation Attribute Rule on Points

// field: leave empty

// Triggers: Insert, Update, Delete

// Exclude from application evaluation!

// abort if PointID is null

if($feature.PointID == null) { return }

// create empty arrays to store the edits to the polygon fc

var adds = []

var updates = []

var deletes = []

// get the edit type

var mode = $editcontext.editType

// create the new geometry for the polygon

var default_distance = 10

var buffer_distance = DefaultValue($feature.BufferDistance, default_distance)

var new_geometry = Buffer($feature, buffer_distance)

// get the related polygon

var polygons = FeaturesetByName($datastore, "Polygons")

var point_id = $feature.PointID

var poly = First(Filter(polygons, "PointID = @point_id"))

// if no polygon was found (we're inserting a new point or updating a point with missing buffer), create a new polygon

if(poly == null) {

var new_poly = {

geometry: new_geometry,

attributes: {PointID: $feature.PointID}

}

Push(adds, new_poly)

}

// if a polygon exists

else {

// update? -> set new geometry

if(mode == "UPDATE") {

var updated_poly = {

objectID: poly.OBJECTID,

geometry: new_geometry

}

Push(updates, updated_poly)

}

// delete? -> delete the buffer polygon

if(mode == "DELETE") {

var deleted_poly = {objectID: poly.OBJECTID}

Push(deletes, deleted_poly)

}

}

// Push the edits to the polygon fc

return {

edit: [{

className: "Polygons",

adds: adds,

updates: updates,

deletes: deletes,

}]

}

Push and Pull combined

"Assets" and "Inspections"

// Calculation Attribute Rule on Inspections

// field: leave empty

// Triggers: Insert

// Exclude from application evaluation!

// get the related asset

var asset_id = $feature.AssetID

var assets = FeaturesetByName($datastore, "Assets")

var asset = First(Filter(assets, "AssetID = @asset_id"))

// if there is no related asset, abort

if(asset == null) { return }

// else push an edit to that asset

return {

result: {

attributes: {

Value1: asset.Value1,

Value2: asset.Value2

}

},

edit: [{

className: "Assets",

updates: [{

objectID: asset.OBJECTID,

attributes: {

LastInspectionDate: $feature.InspectionDate

}

}]

}]

}

For more reading:

Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That is an amazingly thorough answer, thank you so much for the time you spent on it! I copy/paste/adjusted to fit my use case, and the expression verifies, the console message demonstrated the filter is working, and I published the service out to give it a test.

// Calculation Attribute Rule on Inspections

// field: leave empty

// Triggers: Insert

// Exclude from application evaluation!

// get the related asset

var asset_id = $feature.GUID

var assets = FeatureSetByName($datastore, "epgdb.PARKS.ParkBuildings")

var asset = First(Filter(assets, "GlobalID = @asset_id"))

console (asset)

// if there is no related asset, abort

if(asset == null) { return }

// else push an edit to that asset

return {

edit: [{

className: "Park Buildings",

updates: [{

objectID: asset.OBJECTID,

attributes: {

Status: $feature.Struct

}

}]

}]

}

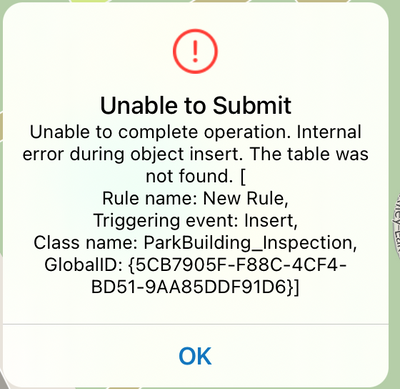

When I attempt to add a record in the web map (via field maps) I get the error:

So it looks like maybe it's a problem with the class name? The rule verified no matter what text I had entered there, so I figured it wasn't important, but I must be wrong. I guessed the best thing would be to use the parent feature class as the className? Any specifics that I missed?

- Zach

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Another update, I monkied with it a little:

// Calculation Attribute Rule on Inspections table

// field: leave empty

// Triggers: Insert

// Exclude from application evaluation

// get the related asset

var asset_id = $feature.GUID

var assets = FeatureSetByName($datastore, "epgdb.PARKS.ParkBuildings",['GlobalID','Status'])

var asset = First(Filter(assets, "GlobalID = @asset_id"))

console (asset)

// if there is no related asset, end expression

if(asset == null) { return }

// else push an edit to that asset

return {

edit: [{

className: assets,

updates: [{

attributes: {

Status: $feature.Struct

}

}]

}]

}

And now the error reads "Invalid Function arguments" I don't know if that means I'm closer or farther away from a correctly written expression, but I think I at least got the class name correct?

- Zach

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I wanted to share one more update in case anyone else is following this thread: I got my code to work! I see after review that I had misplaced some of the code elements which probably caused some problems. I replaced the GlobalID parameter for the updates function, but still had some problems. I tried using quotes around all the keywords and parameters after the last return as per ESRIs documentation, but that didn't make a difference either. From what I can tell, once I replaced my missing code bit as the ObjectID (and not the GlobalID), that's when it clicked. The last weird thing is that I actually do need to check both insert and update in order to get this to run. Just having insert checked leads to the rule verifying but not running when a new record is created, which is kind of strange, but ultimately not too bad.

// get the related asset

var asset_id = $feature.GUID

var assets = FeatureSetByName($datastore, "epgdb.PARKS.ParkBuildings")

var asset = First(Filter(assets, "GlobalID = @asset_id"))

// if there is no related asset, end expression

if(asset == null) { return }

// else populate attribute with the edit

return {

edit: [{

className: "epgdb.PARKS.ParkBuildings",

updates: [{

objectID: asset.OBJECTID,

attributes: {

Status: $feature.Struct,

LastInspDate: $feature.InspDate

}

}]

}]

}- Zach

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@JohannesLindner I ran across this very detailed explanation for updating data from another table and see where it can help in some other cases of items that I am working on.

However, I'm attempting to update parcel details in another table that worked fine when placing a point on a single parcel. I've got a few areas where there are overlapping parcels. I'm thinking that using filter would likely work (with a dictionary for multiple fields from the parcel table). I know that in the sql database, the whole thing would be done with a sql query as to 'select field1, field2, field3, etc from parcel where pid = @pid' (or whatever the syntax is for keyboard entry); is there a way to do this with AR? If so, I've not found the right tool to have the pid entered for the where clause. We are trying to avoid using stored procedures in the database in favor of AR to have readily available for editing in the future.

Thanks,

Lorinda