- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Questions

- :

- How to get attachments size with Python script

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to get attachments size with Python script

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello, we work in a big organization where we have many users and projects in ArcGIS Online. For us, it is very important to keep track of credit usage to assign its cost to the appropriate project. Also, we must recommend good practices to reduce and optimize credit usage. We have noticed that the main cost of credits come from storage.

Even though ArcGIS Online provides specific reports that offer information about the size of different items, detailing the size of feature storage and file storage, we need to exploit this information in an external database to obtain dashboards personalized for our purposes.

We have developed a Python script that gets all information that we need from our AGOL, and download it in our database weekly. But we cannot get some data as total attachments size unless it gets through each feature in every layer, which can take hours or days.

This information is clearly somewhere in ArcGIS on line, linked to each item. It is provided in the reports and in the details shown in the item page, so it seem easy to get. But we have not found the property to query this information easily with our script (something as featureLayer.properties.AttachmentsSize).

Has anybody found the way to query attachments size with a unique command instead of querying each individual item of a layer?

Thank you.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Take a look at arcgis.features.managers.AttachmentManager.

There is a method search, which, according to the docs:

allows querying the layer for its attachments and returns the results as a Pandas DataFrame or dict

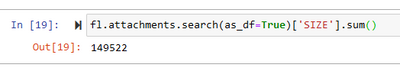

It is possible to use this on a per-layer basis to return the details of all attachments. On of the included fields in the output dataframe (assuming you include the parameter as_df=True) is SIZE, which you can use to calculate the total size of all attachments on the layer using DataFrame['SIZE'].sum().

So you'll still need to iterate over every layer in your org, but you could easily get an org-wide overview of how much storage each layer's attachments are taking up.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Take a look at arcgis.features.managers.AttachmentManager.

There is a method search, which, according to the docs:

allows querying the layer for its attachments and returns the results as a Pandas DataFrame or dict

It is possible to use this on a per-layer basis to return the details of all attachments. On of the included fields in the output dataframe (assuming you include the parameter as_df=True) is SIZE, which you can use to calculate the total size of all attachments on the layer using DataFrame['SIZE'].sum().

So you'll still need to iterate over every layer in your org, but you could easily get an org-wide overview of how much storage each layer's attachments are taking up.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Great!, it worked. Thank you very much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Found this post and am wondering if there are any ideas on the disparity I see when querying the size of the attachments through the Python API versus what is shown on the item page...?

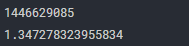

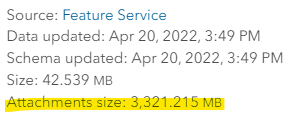

If I query the attachments through the Python API for a feature layer of interest, I get 1.35GB. However, if I look at the item page, it shows 3.3GB.

Python API query --- bytes converted to GB

Item page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I believe I have resolved my issue, which is the number of attachments is greater than the max record count of the service. In order to retrieve all attachments, I needed to use the "max_records" and "offset" parameters.

Sample code can be found here if anyone needs it for future reference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you!