- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Documents

- :

- Overwrite ArcGIS Online Feature Service using Trun...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Overwrite ArcGIS Online Feature Service using Truncate and Append

Overwrite ArcGIS Online Feature Service using Truncate and Append

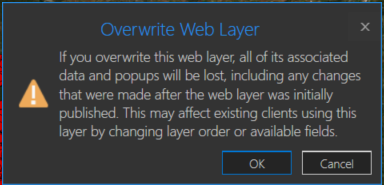

You may have a need to overwrite an ArcGIS Online hosted feature service due to feature and/or attribute updates. However, this could cause some data loss such as pop-ups, symbology changes, etc in the hosted feature service. For example, you will receive a warning about this when you try to overwrite a feature service in ArcGIS Pro:

One way around this is to use the ArcGIS API for Python. If you are the data owner, or Administrator, you can truncate the feature service, and then append data. This is essentially an overwrite of the feature service. The below script will do this by specifying a local feature class and the item id of the feature service you wish to update. The script will then execute the following steps:

- export the feature class to a temporary File Geodatabase

- zip the File Geodatabase

- upload the zipped File Geodatabase to AGOL

- truncate the feature service

- append the zipped File Geodatabase to the feature service

- delete the uploaded zipped File Geodatabase in AGOL

- delete the local zipped File Geodatabase

- delete the temporary File Geodatabase

Here is an explanation of the script variables:

- username = ArcGIS Online username

- password = ArcGIS Online username password

- fc = path to feature class used to update feature service

- fsItemId = the item id of the ArcGIS Online feature service

- featureService = True if updating a Feature Service, False if updating a Hosted Table

- hostedTable = True is updating a Hosted Table, False if updating a Feature Service

- layerIndex = feature service layer index

- disableSync = True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

- updateSchema = True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

- upsert = True will not truncate the feature service, requires a field with unique values

- uniqueField = Field that contains unique values

Note: For this script to work, the field names in the feature class must match the field names in the hosted feature service. The hosted feature service can have additional fields, though.

Video

Script

import arcpy, os, time, uuid, arcgis

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Variables

username = "jskinner_rats" # AGOL Username

password = "********" # AGOL Password

fc = r"c:\DB Connections\GIS@PLANNING.sde\GIS.Parcels" # Path to Feature Class

fsItemId = "a0ad52a76ded483b82c3943321f76f5a" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = 0 # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = True # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

upsert = True # True will not truncate the feature service, requires a field with unique values

uniqueField = 'PIN' # Field that contains unique values

# Environment Variables

arcpy.env.overwriteOutput = True

arcpy.env.preserveGlobalIds = True

def zipDir(dirPath, zipPath):

'''

Zips File Geodatabase

Args:

dirPath: (string) path to File Geodatabase

zipPath: (string) path to where File Geodatabase zip file will be created

Returns:

'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

def updateFeatureServiceSchema():

'''

Updates the hosted feature service schema

Returns:

'''

# Get required fields to skip

requiredFields = [field.name for field in arcpy.ListFields(fc) if field.required]

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

def divide_chunks(l, n):

'''

Args:

l: (list) list of unique IDs for features that have been deleted

n: (integer) number to iterate by

Returns:

'''

# looping till length l

for i in range(0, len(l), n):

yield l[i:i + n]

if __name__ == "__main__":

# Start Timer

startTime = time.time()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

if arcgis.__version__ < '2.4.0':

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

elif arcgis.__version__ >= '2.4.0':

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Append features from featureService class/hostedTable

if upsert == True:

# Check if unique field has index

indexedFields = []

for index in fLyr.manager.properties['indexes']:

indexedFields.append(index['fields'])

if uniqueField not in indexedFields:

print(f"{uniqueField} does not have unique index; creating")

fLyr.manager.add_to_definition({

"indexes": [

{

"fields": f"{uniqueField}",

"isUnique": True,

"description": "Unique field for upsert"

}

]

})

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Append features

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=True, upsert_matching_field=uniqueField, field_mappings=[])

# Delete features that have been removed from source

# Get list of unique field for feature class and feature service

entGDBList = [row[0] for row in arcpy.da.SearchCursor(fc, [uniqueField])]

fsList = [row[0] for row in arcpy.da.SearchCursor(fLyr.url, [uniqueField])]

s = set(entGDBList)

differences = [x for x in fsList if x not in s]

# Delete features in AGOL service that no longer exist

if len(differences) > 0:

print('Deleting differences')

if len(differences) == 1:

if type(differences[0]) == str:

features = fLyr.query(where=f"{uniqueField} = '{differences[0]}'")

else:

features = fLyr.query(where=f"{uniqueField} = {differences[0]}")

fLyr.edit_features(deletes=features)

else:

chunkList = list(divide_chunks(differences, 1000))

for list in chunkList:

chunkTuple = tuple(list)

features = fLyr.query(where=f'{uniqueField} IN {chunkTuple}')

fLyr.edit_features(deletes=features)

else:

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

hasViews = False

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[

{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[

{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if maxOBJECTID != None and minOBJECTID != None:

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

Updates

3/3/2023: Added the ability to add/remove fields from feature service keeping schemas in-sync. For example, if a field(s) is added/removed from the feature class, it will also add/remove the field(s) from the feature service

10/17/2024: Added upsert functionality. Deleted features/rows from the source feature class/table will also be deleted from feature service. This is helpful if you do not want your feature service truncated at all. In the event of a failed append, the feature service will still contain data. A prerequisite for this is for the data to have a unique id field.

The issue that I experience is that at the end of the process, the zip file wont delete. Says that it is still in use. Any thoughts?

"PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\\Users\\rossch\\Downloads\\996470ae-bd03-11ee-aaba-70d823122144.gdb.zip'"

@ChrisJRoss13 it could be a permissions issue for the scratch directory. You can change this to another directory by updating line 43. For example, change the below:

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]to something such as:

gdb = arcpy.CreateFileGDB_management(r"C:\temp", gdbId)[0]Hi @JakeSkinner , I had actually changed the original scratch folder input to another directory, like you had indicated. This error is still occurring.

@ChrisJRoss13 did the error occur for both the scratch and other directory specified?

@JakeSkinner I'm running into an issue with the append step where no features are added, leaving the feature service empty. Everything else seems to work, though I did have to note out the same line that @ChrisJRoss13 had trouble with. If I note out the delete steps and check the file GDBs, everything is intact. I can "Publish" from the zipped GDB from the Portal item page and the layer it creates matches and has all of the features.

I did double check that the feature service supports appends which was set to true but noticed that it doesn't list a GDB as an accepted type:

"supportedAppendFormats": "shapefile,geojson,csv,featureCollection,excel,jsonl"

Would this cause the Append step to not add any features?

I can't say for sure why this is occurring, but if you are working with Portal, it may be just as fast to execute ArcGIS Pro's Delete Features and Append tools.

@JakeSkinner @MelissaJohnson @ashleyf_lcpud Sorry it took me a bit to respond!

My issue seemed to be that line 141 of the script excludes fields with "Shape_" in the name. At least in my case, my SDE database does not have an underscore in the Shape field name. This was causing issues with sync and append of the shape fields.

Since the script plays nicer with layers from a file geodatabase, I used the existing temp gdb to compare schema with ArcGIS Online. I also had to add a dictionary entry to account for SmallInteger field types. Here is my modified Schema Sync section, picking up at Line 122 of the original script:

# Schema Sync

if updateSchema == True:

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name and 'GlobalID' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

fc_export = os.path.join(gdb, fcName) ## Added variable for temp fGDB feature class

if hostedTable == True:

for field in arcpy.ListFields(fc_export): ## using fgdb feature class rather than SDE fc

if field.type != 'OID' and field.type != 'Geometry' and 'GlobalID' not in field.name:

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc_export): ## using fgdb feature class rather than SDE fc

if field.type != 'OID' and field.type != 'Geometry' and 'Shape_' not in field.name and 'GlobalID' not in field.name:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['SmallInteger'] = 'esriFieldTypeSmallInteger' ## Added dict entry for SmallInteger

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc_export, key)][0] ## using fgdb feature class rather than SDE fc

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

Jake, I'd be happy to share a dataset with you, but I believe my issues stemmed from using an SDE layer as the input, so I'm fairly confident that exporting my SDE data to a file gdb would mask the problem.

Hope this helps!

@ChrisJRoss13 I am running into the same issue you are, did you ever figure it out? I currently using this to overwrite four feature services, and two of the four scripts run with no error, but the other two also get the PermissionError.

I can't figure out why two of them fail, there is no reasonable reason I can see, though the script does still append and do everything else fine, it just leaves a scratch gdb at the end. It just make it difficult to automate since the script fails task scheduler will give me an error code and I have to manually check to be sure the append succeeded.

@Chase_RSO try deleting the scratch folder at C:\Users\<useraccount>\appdata\local\temp. Recreate this directory, and then add Full Control to your account to the scratch folder by right-clicking on the folder > Properties > Security.

@JakeSkinner Thank you for the suggestion. I tried that no luck, I continue to get the same error message.

@JakeSkinner and @Chase_RSO I am getting that same error as well. Changed the scratch directory to c:\temp. . . same error, I have full privileges on my machine.

@Chase_RSO @SallyBickel what version of Pro are you running?

@SallyBickel @Chase_RSO what IDE are you using to execute the script? Try running the IDE as an Administrator (i.e. right-click on IDE > Run As Administrator). Do you still receive the same error?

@JakeSkinner using PyScripter here and yes same results Run as Administrator.

@JakeSkinner Visual Studio Code. And also the same result when I run as administrator as well.

Anybody figure this out yet? It's now happening to me. Processes that I have had running w no problems for over a year.

os.remove(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"))

PermissionError: [WinError 32] The process cannot access the file because it is being used by another process:

@Levon_H @Chase_RSO do you get an error if you manually delete the zip file? Instead of deleting, are you able to move the zip file? Ex:

shutil.move(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"), os.path.join(r"C:\temp\TempGDB.gdb.zip"))I'm wondering if you try moving the file to another directory first, you can then delete it using the os.remove command.

@JakeSkinner Good morning Jake. Great idea. However, it won't let me move it for same permission reason. It just doesn't like that location.

shutil.move(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"), os.path.join(r"C:\temp\TempGDB.gdb.zip"))

File "C:\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\shutil.py", line 846, in move

os.unlink(src)

PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\\Users\\chjones\\AppData\\Local\\Temp\\scratch\\TempGDB.gdb.zip'

@Levon_H do you get the same error when trying to delete the zip file manually?

@JakeSkinner Hey Jake. Yep, I can delete it by hand.

@Levon_H try adding the following line after the Geodatabase is deleted, but before it attempts to remove the zipped geodatabase:

arcpy.management.ClearWorkspaceCache(arcpy.env.scratchFolder)

Ex:

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

arcpy.management.ClearWorkspaceCache(arcpy.env.scratchFolder)

os.remove(gdb + ".zip")

Cool, thanks. I tried it. I'm getting the infamous 999999 error.

File "C:\ArcGIS\Pro\Resources\ArcPy\arcpy\management.py", line 22276, in ClearWorkspaceCache

arcgisscripting.ExecuteError: ERROR 999999: Something unexpected caused the tool to fail. Contact Esri Technical Support (http://esriurl.com/support) to Report a Bug, and refer to the error help for potential solutions or workarounds.

Failed to execute (ClearWorkspaceCache).

I've been using your script for a few months now , but I am now receiving:

Exception: Unable to delete feature service layer definition.

Invalid definition for System.Collections.Generic.List`1[ESRI.ArcGIS.SDS.FieldInfo]

Invalid definition for System.Collections.Generic.List`1[ESRI.ArcGIS.SDS.FieldInfo]

(Error Code: 400)

And the service becomes empty.

Has any one else had this issue when trying to run the script ?

@Le1Hod can you share the hosted feature service you are updating with me to an AGOL Group? You can invite my AGOL account, jskinner_rats, to this Group.

@JakeSkinner , thanks for posting this. I'm not sure why all the developer information I came across provided examples for .csv exclusively. I ran this on a number of feature layers from an ArcGIS Notebook and it worked out of the box. I have a Pro Project with many of the layers I publish to AGO. When I publish a new layer to AGO, I add a cell in the notebook to overwrite it. Then I just run that cell whenever the feature class gets updated.

The schema update portion is awesome!

I'm also getting the following error relating to the os.remove(gdb + ".zip") line:

"PermissionError: [WinError 32] The process cannot access the file because it is being used by another process:"

I'm able to run this for a few other sde feature classes successfully, but when I try to run it for a larger feature class (parcels), it is unable to delete the zipfile in the scratch folder. This would suggest there is something other than a permissions issue going on.

Hi,

I tried to run the code and was working until at some point stopped throwing me an error ( after deleting the fields if I'm not wrong). Then I read that the FGDB (which is the format used in the script) is not a format accepted?

I then tried to Frankenstein it a bit to change it to shapefile but it gives me error while deleting the fields saying that one of them is used as display layer and can't be deleted? Any thoughts? I need to update a layer

@nbrown

Hi. I was able to work around it by running the script as a .cmd or .bat, and adding the "del" command with force "/f" after the script. My cmd looks something like this:

C:\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\python.exe \\Scripts\Beta\TuncAppend.py

del /F "C:\Users\levon\AppData\Local\Temp\scratch\TempGDB.gdb.zip"

Good morning @JakeSkinner . I've been running this script for a couple of years now on many layers without any real issues.

This morning, however, it's getting hung up on the Append portion. It doesn't want to fully append...it just spins and spins.

Obviously something changed somewhere. Just checking to make sure it's not on the AGOL side. Any other thoughts?? Thanks!!!!

@JakeSkinner Recently, my notebooks are hanging up while appending features. Previously, the cells containing the Truncate/Append script complete in less than a minute. Yesterday and today, they'll get through export/zip/upload, truncating the feature layer, and the schema sync. It won't get past flyr.append (currently sitting for about 2 hours). Truncate worked, my feature layers have no features, but nothing is being appended. Not sure how to troubleshoot without any errors or indication of what's failing. Any suggestions?

@Levon_H Guess, I'm glad that I'm not the only one having this issue today. community.esri.com has been really slow for me this morning and I didn't see your post when I posted ~10 minutes later. In order to get my feature layer populated, I tried using the Update Data function on the layer's detail page with the temp zipped .gdb that the script generates. That method also hangs up in AGO, and based on the message is also using Append. It seems like there is an issue with Append in AGO.

Please post if you're able to find any resolution.

@BrianShepard @JakeSkinner So, we've tested everything this morning, and have come to the conclusion that "tempFGD" on AGOL is not appending the truncated Feature Layer.

@Levon_H, I spoke with Esri Support and there is a known issue that's still being evaluated. I don't know when it'll be resolved, but I'm supposed to be notified when it is. The information I got is:

This behavior is consistent with an identified issue in ArcGIS Online that involves asynchronous operations such as:

* append

* createReplica

* Sync

* addToDefinition

* deleteFromDefinition

* publishing

Our team is working in resolving the issue that was reported started occurring on August 9th, 2024.

@BrianShepard Good to know Brian, thank you very much for posting this information.

@Levon_H @BrianShepard I tested the script this morning and everything executed successfully. Can you test again on your end?

@JakeSkinner Our scripts are up and running.

Thank you for this script, responding to almost everyone over time, creating workarounds and troubleshooting since its inception.

That said, we are heavily dependent upon hosted feature layers for several critical business layers. If ESRI would have given us any kind of heads up that it was their servers (or AWS or whatever caused the issue), that would have been nice. We spent an entire day scrambling and troubleshooting. It was big deal. Only to find out here (social media/message boards) that it was an ESRI issue.

Feel free to share my concerns with your powers that be. And thanks again for everything!

@JakeSkinnerIt's working again for me. Thanks for following up.

@Levon_H I mentioned to Esri support that it would be good to know about these issues, especially since I found out I couldn't append data after it had been truncated. It looks like they did update the issue on the ArcGIS Online Health Dashboard, but not for a couple of days after it was known. Since there is a dedicated site for AGO health, I'd expect to see issues reported there when I experience them. Would have saved some time in troubleshooting an issue I couldn't resolve.

@BrianShepard Same here, I mentioned it to ESRI support as well. If known, I would have been able to mitigate expectations to our employees and customers last week. Instead, my entire team was sent scrambling. It was a very disappointing experience.

@BrianShepard Do you have a case number for the append issues? We are experiencing the same behavior.

@SallyBickel I didn't have any issues during my monthly update a couple of weeks ago. The previous incident Esri identified was "INC-001547: Asynchronous operations are slow to complete". It was noted on the ArcGIS Online Health Dashboard (ArcGIS Online Health Dashboard), but I don't see anything similar when I checked this morning. Since I won't be able to repopulate my data if there is an issue, it's not something I'm tempted to see if it's still working for me. I'd recommend contacting support if you're still having issues.

I was doing the append with the upload_format='filegdb' and got this error:

Exception: 'appendUploadFormat' must be one of the following: shapefile, geojson, csv, featureCollection, excel.

(Error Code: 500)

What kind of joke is this? ESRI doesn't support their own proprietary format for doing stuff?

@Iron_Mark were you using the script in this document? filegdb is definitely supported.