- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Blog

- :

- ArcGIS Enterprise Content Promotion

ArcGIS Enterprise Content Promotion

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Esri provides concept paper around Architecture best practices. One of the architectural recommendations in is to deploy ArcGIS Enterprise with “Environment Isolation”. In the white paper, the concept is defined as: “Isolating computing environments is an approach to maintaining system reliability and availability. This approach involves creating separate systems for production, testing, and development activities. Environment isolation reduces risk and protects operational systems from unintentional changes that negatively impact the business.”

In architectural conversations with customers, especially with one having large-scale deployments who manage system of records/engagements, quite often there is a discussion around environment isolation. The questions include:

- What is recommendation and best practice to promote content from lower environment to other?

- Do we propose some automation methodologies for environment promotion, or it should be manual?

- What are different constraints that should be taken in consideration for this process?

We need to address all these concerns/questions by designing an automation workflow. Generally, the best practices (security/infrastructure constraints) around environment isolation are:

- As name suggest, lower environments and production environments should be completely isolated. There should be no cross talk between these.

- Data owner credentials in database should not be communicated to Publishers.

- Data owner password should not be same across environments.

- Similarly, ArcGIS Enterprise admin account credentials with higher privileges should not be shared across organization.

Now let’s consider a simple use case scenario which needs to be promoted in an automated way:

- There is web mapping application which needs to be:

- Developed/configured in development environment

- validated in lower (staging) environment

- once validated, it should go-live with a promotion to production environment

- The application is used for data editing workflows

- The application is based on web application builder

- The application is required to be shared with a user group

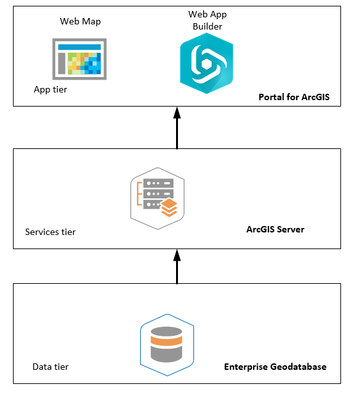

Let’s decompose this application in all subcomponents as shown in the figure 1:

Figure 1: Application subcomponents

To promote this application from Development to Production environment successfully, all sub-components (from all tiers) should be taken in account. If organization wants to automate this process, they need to automate for all tiers. Let’s go through all the implementation workflow:

- Esri propose APIs to automate content promotion, which includes:

- ArcGIS API for Python: This is python-based API which is a wrapper around REST API available with portal for ArcGIS. This can be used for automation around app tier.

- ArcPy: This python-based API which is deployed with ArcGIS Pro and ArcGIS Server. This is used to perform database operations and publish non-hosted services.

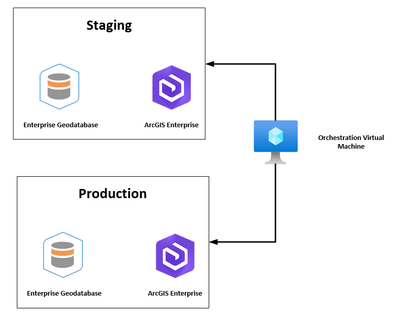

- Let’s consider a simple scenario to automate content promotion. The simple scenario is being shown in figure 2. Assume that our current deployment is 2 environments based i.e., Staging and Production.

Figure 2: Automated Content promotion scenario

- Both environments (staging and production) are completely isolated. There are only linked through Orchestration virtual box which should contain following installed components:

- ArcGIS Pro (or at least licensed ArcGIS Server) to have ArcPy, which is going to automate data promotion operations and pusblish non-hosted service.

- ArcGIS API for Python to automate all operations around app tier. Generally, API is also installed with ArcGIS Pro (ArcGIS Server), but latest version can also be installed using Conda.

- Once both environments are up and running and orchestration box is also deployed and configured properly, let is talk about application components:

- Data tier: Developer can work on data in development environment, the data oriented tasks can be:

- Design data model

- Create feature classes

- Create tables

- Create indices

- Add other functionalities (if required like relationship classes, domains, etc.)

Once all requirements are implemented, in lower environment. The data model can be delivered in File geodatabase format. This can become a data exchange format between all environments. ArcPy can be used to inject data model from single file geodatabase source to cross environments enterprise geodatabases to ensure that data is in sync and there is no discrepancy due to any manual intervention. Data management toolbox from ArcPy can be used for this scenario. Some command examples are:

arcpy.management.CopyFeatures

arcpy.AddIndex_management

arcpy.AddRelate_management

- Services tier: ArcGIS Pro is being used to read data from database, add symbology, create definition queries and at the end when data is ready, publish data in form of cartographic service to ArcGIS Server. Now publishing can be done in 2 ways:

- Manual publishing: This can be done through ArcGIS Pro. It connects with database and ArcGIS Server. ArcGIS Server also need to connect to database. Publisher needs to configure all required parameters for published services (e.g., activating feature access operation for data editing purpose, use dedicated/shared instance, configure number of instances for dedicated instances, etc.), and then publish cartographic service

- Using SD File: Another pattern is to generate an intermediate file called as Service Definition (Service Definition). It depends on matter of opinion, but from automation perspective, I prefer this method. Publisher deliver SD file, this can be published across all environments. This is to ensure that same service configuration is delivered across all environments and there is no chance of having any discrepancy resulting from manual intervention. SD file generation can also be automated but this can also be generated directly from ArcGIS Pro.

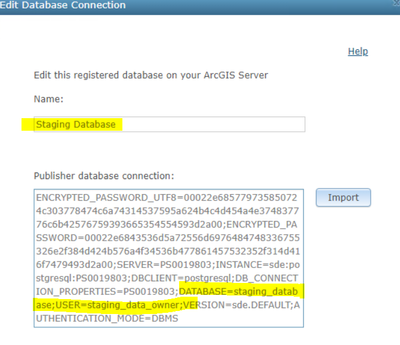

ArcGIS Servers should be configured in following way:

- Lowest environment: Configure ArcGIS Server with a database connection to corresponding to environment.

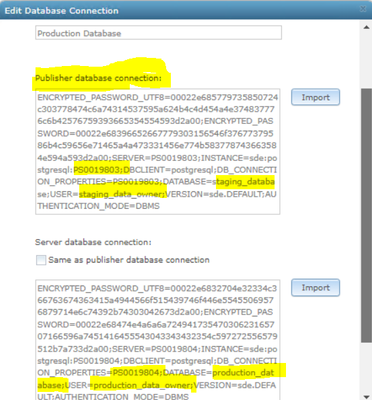

Staging database whose name is staging_database and it uses a database user staging_data_owner.

SD File is created from ArcGIS Pro which is using same data connection as above.

ArcPy based Command can be used for publishing:

import arcpy

inSdFile = r"C:\install\socal_fire_infra.sd"

inServer = r"C:\install\staging_connection.ags"

# Run UploadServiceDefinition

arcpy.server.UploadServiceDefinition(inSdFile, inServer)

ArcPy needs 2 input parameters one is ArcGIS Server connection file, and one is Service Definition which is created using ArcGIS Pro.

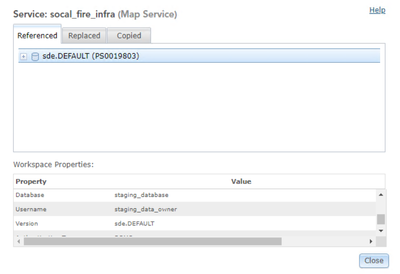

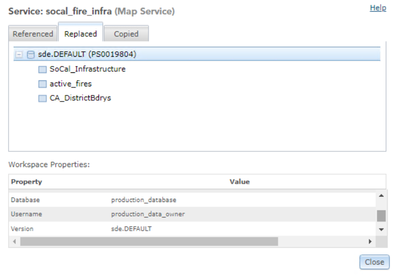

At the end it publishes service in staging environment in root folder for ArcGIS Server:

The service is published with referenced database:

- Production Environment: Configure ArcGIS Server in a following way:

Publisher database connection should be configured with credentials of data owner from staging database. Server database connection should be configured with production data owner credentials.

Now by executing same line of ArcPy based python code, the service is published in production environment. The service is published with replaced dataset, where staging credentials are being replaced by production:

- App tier: Once data and services are being promoted, the next part is app tier. There are 2 parts in app tier for which we should be worried about:

- Web Map: The web map can be created/configured in lower environment. Once web map is created it can be cloned/copied from lower environment to production using ArcGIS API for python. The code can be executed from orchestration machine.

from arcgis.gis import GIS

sourcePortal='https://staging.esri.com/portal'

sourceUserName='portaladmin'

sourcePassWord='Source2018'

targetPortal='https://prod.esri.com/portal'

targetUserName='portaladmin'

targetPassWord='Target2018'

source = GIS(sourcePortal, sourceUserName, sourcePassWord, verify_cert = False)

print("Connected to Source Portal " + sourcePortal + " as "+sourceUserName)

target = GIS(targetPortal, targetUserName, targetPassWord, verify_cert = False)

print("Connected to Target Portal " + targetPortal + " as "+targetUserName)

item_mapping={}

item_mapping['d4038035da9c4850980d0eec723d5fc1']='6481d82b0597403ab31c2a68487e473d'

source_item = source.content.get('d5de71d8fc69496c8359fde9b21b0a3f')

target_item = target.content.clone_items(items=[source_item],owner=targetUserName,

item_mapping = item_mapping,

copy_data=False,

search_existing_items=True,

preserve_item_id=True)

print('Item is clonned with ID:' + target_item[0].id)

We need 3 IDs to make this happen:

- Item ID of non-hosted feature service in Source environment which in this example is 'd4038035da9c4850980d0eec723d5fc1'

- Item ID of non-hosted feature service in Target environment which in this example is ' 6481d82b0597403ab31c2a68487e473d'

- Item ID of web map in Source environment which in this example is 'd5de71d8fc69496c8359fde9b21b0a3f'

With option “Preserver Item ID TRUE”, the web map in target portal will be created with same item ID as in source environment.

- Web Mapping Application: The web mapping application can be created/configured in lower environment. Once web application is being created it can be cloned/copied from lower environment to production using ArcGIS API for python. The code can be executed from orchestration machine.

from arcgis.gis import GIS

sourcePortal='https://staging.esri.com/portal'

sourceUserName='portaladmin'

sourcePassWord='Source2018'

targetPortal='https://prod.esri.com/portal'

targetUserName='portaladmin'

targetPassWord='Target2018'

source = GIS(sourcePortal, sourceUserName, sourcePassWord, verify_cert = False)

print("Connected to Source Portal " + sourcePortal + " as "+sourceUserName)

target = GIS(targetPortal, targetUserName, targetPassWord, verify_cert = False)

print("Connected to Target Portal " + targetPortal + " as "+targetUserName)

item_mapping={}

item_mapping['d4038035da9c4850980d0eec723d5fc1']='6481d82b0597403ab31c2a68487e473d'

source_item = source.content.get('1e2d62518d8940dcaf5a077cf8f4e6db')

target_item = target.content.clone_items(items=[source_item],owner=targetUserName,

item_mapping = item_mapping,

copy_data=False,

search_existing_items=True)

print('Item is clonned with ID:' + target_item[0].id)

We need 3 IDs to make this happen:

- Item ID of non-hosted feature service in Source environment which in this example is 'd4038035da9c4850980d0eec723d5fc1'

- Item ID of non-hosted feature service in Target environment which in this example is ' 6481d82b0597403ab31c2a68487e473d'

- Item ID of web map in Source environment which in this example is ' 1e2d62518d8940dcaf5a077cf8f4e6db'

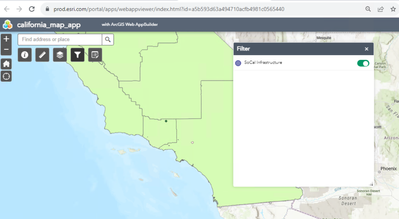

Congratulations, you have successfully promoted Web Mapping Application from staging to production using automation script and application is fully working with all widgets in production environment:

Takeaways:

- We have successfully demonstrated a fully automated content promotion for one of the workflows.

- It is quite possible that you may face other scenarios, which may be more challenging and need some additional/different steps for validation.

- Esri provides you tools to implement these content promotion workflows, it needs to understand the objectives, underlying objectives and times to implement.

In this blog the focus in on web mapping application. As we know that future in around experience builder. In the next blog of automation series, there will be a focus around experience builder.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.