Some time ago I posted an article comparing the performance of older Esri SDKs (ArcObjects: .NET, C++) with newer ones (Pro, Enterprise, Runtime: .NET). The Pro and Exterprise SDKs barely performed better than their ArcObjects .NET counterparts: instead of being re-engineered from scratch, they obviously leveraged the older ArcObjects technology, and were bogged down by the same COM interop performance issues. Runtime, on the other hand, proved to be a true innovation and far outperformed any of the other SDKs.

As a fun exercise, I decided to compare the three flavors of Runtime 100.12 available for Windows desktop (.NET, Java, Qt). Again, I used the same purely computational benchmark: creating convex hulls for 100,000 random polygons. I built all three examples as standalone console applications (release builds), and executed them outside of their respective IDEs. I ran each benchmark five times, and picked the best time for each.

Here's the benchmark comparison:

| Runtime SDK | Execution time (seconds) |

| .NET | 16 |

| Java | 19 |

| Qt (C++) | 33 |

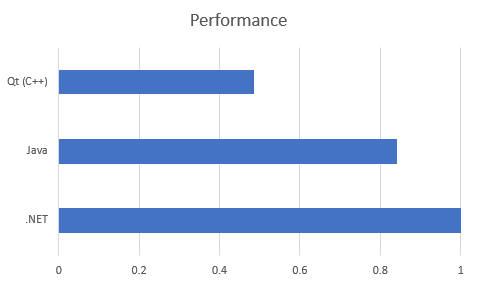

And here's the normalized performance index:

I expected .NET and Java to be pretty much neck-and-neck, since no COM interop is involved in the benchmark (COM interop perfomance is much worse in Java than .NET). Qt was a bit of a surprise; although, I've dabbled with Runtime for Qt in the past, and noticed that fine-grained code seems not to be as fast as it could be. While C++ is my favorite programming language, and I admire Qt's "write once, deploy many" approach, it's obvious that the framework carries some baggage.

[See attachment for the code.]

Update:

This has certainly been a fascinating topic, and there's been some good participation and feedback. While the original purpose of the exercise was to compare the relative interop performance of the various flavors of Runtime in making a large number of fine-grained calls to the common libraries, it has since been demonstrated that tweaks to the logic can make a significant difference in performance. And in one case so far, the exercise has led to Esri's discovering and fixing a bug. Kudos to everyone who participated.

Benchmarks_Round2_src.zip