- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Image Analyst

- :

- ArcGIS Image Analyst Questions

- :

- Classify Pixels Deep Learning Package - Sentinel 2

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Classify Pixels Deep Learning Package - Sentinel 2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

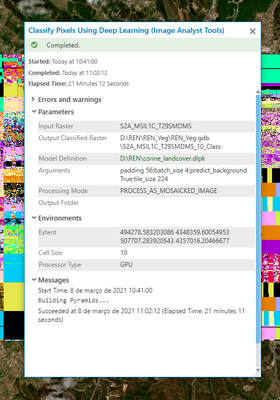

I've been trying to put to work the deep learning package corine_landcover.dlpk, as available in Esri Living Atlas. I'm having a unexpected result from the deep learning process, without no warnings or errors identified by ArcGIS Pro. I've tested 2 approaches:

- Scenario 1: raster mosaic, sentinel 2 type, with all bands template, using SRS WGS 1984 UTM Zone 29N.

- Scenario 2: raster dataset, Sentinel True Colour, exported R::Band 4; G::Band 3; B::Band 2, SRS Web Mercator.

Scenario 1, my first approach, I've experimented several batch_size, from 4, 8 to 16. I've selected Mosaicked Image and Process All Rasters single. batch_size parameter has not affected the results. Processor type (GPU or CPU), has affected the results. Both are not understandable.

All processing have took account the visible map area as input parameter for the inferencing.

Starting point:

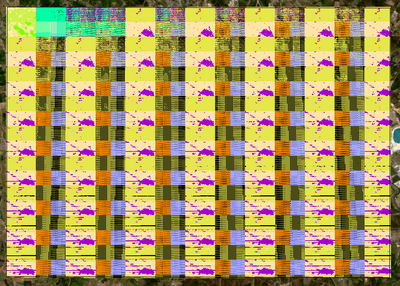

Scenario 1 - GPU - Mosaicked Image / Batch size 4/GPU:

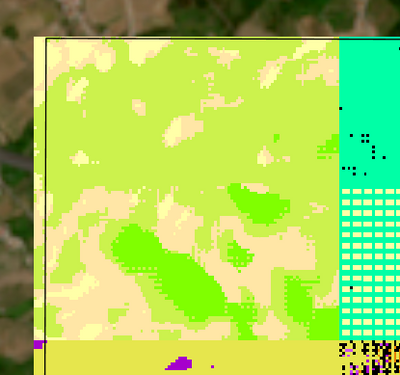

Scenario 1 - CPU - Mosaicked Image / Batch size 4/CPU:

For scenario 2 same results, GPU and CPU.

For the GPU case I don't have a clue what the issue is. I've ArcGIS Pro Advanced 2.7.1, Image Analyst ext, Deep Learning Framework (with all the requirements, including visual studio). My GPU is RTX 3070 8 Gb who I consider has enough power to do the math. No errors or warnings are presented in the inference processing.

For the CPU the results are clearly not adequate. The test area is about 145.6716km². Most of the classification is Inland Waters, and that doesn't make any sense has you see above in the sentinel 2 scene. I know the model is Unet and should be using GPU, but nevertheless I thought it could be SRS (WGS 1984 UTM Zone 29N) the issue.

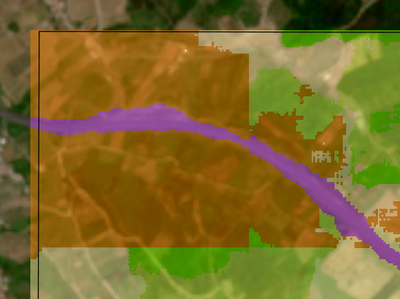

I tested again, scenario 2, the below image is GPU processing

CPU mode was about the same.

I understand that the Pytorch version in use in ArcGIS Pro supports only CUDA 10.2 and no CUDA 11.x GPU's. I've seen around the threads issues alike, with different graphic cards, Touring and Pascal, and this type of issue are happening in pixel classification using deep learning.

The CPU results, I don't understand them. I have an i5-10600K CPU with 32 Gb RAM.

Any comments on this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TiagoCarvalho1979 ,

I would recommend you to:

1. Use this tool here Manage Sentinel-2 imagery (arcgis.com) to create your mosaic layer, It expects sentinel 2 Level 1 C data.

2. Change the processing template of the newly created layer to None. (Right click on the layer > Properties > Processing template)

3. Try using the layer with the model.

Let me know if you face any issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous User

well in GPU processing I've got the same result.

So for using the tool I had to install ArcMap. In my understanding the Create Mosaic in ArcGIS Pro has the capability to configure a Sentinel 2 template and import data. But I followed all the steps just as a precaution.

The first import option in the tool (import 10 m band) in the deep learning model didn't work, because the model tries to get the index bands for inference and fails in the import process if not all bands are present. So I had to select in the tool the multispectral option.

With the multispectral option the model started and did not presented errors.

But as you see above the results are still the same.

Any clues?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

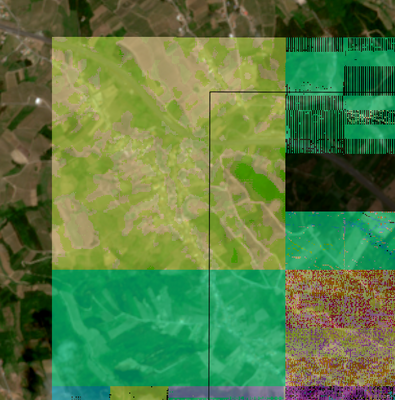

@Anonymous User one thing, not all the processing was wrong. See this upper left corner in the image:

Could be the tiles? Any thoughts on this?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I also done a CPU based inference. The results are less granular, although I've delimited the inference based on a polygon of a pre-defined AoI. The results:

Now for the upper left limit in my previous post, compare btw GPU and CPU processing:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TiagoCarvalho1979 ,

This tool here https://www.arcgis.com/home/item.html?id=e6e1f20cb0374d28a6eed24f5c2ff51b works in ArcGIS Pro too.

Then you need to use the multispectral layer with processing template set to None.

If you think it works well on CPU the problem might be related to GPU, I recommend you to try setting batch size to one and run on GPU. Just to test it.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous User ,

the link above is not working:

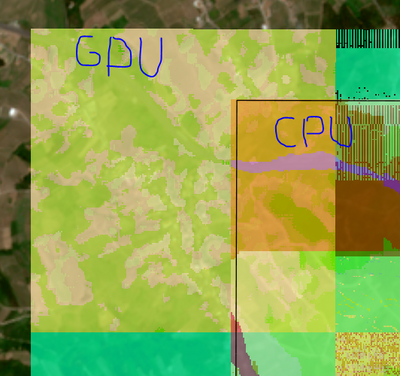

I tested with batch size = 1. The processing is below:

Again upper left corner did work:

My issue related with CPU processing is that the granularity of the processing is lesser. See bellow the same are by CPU inference:

classification is not the same.

@Anonymous User do you consider that since pytorch version 1.4.0 does not support CUDA 11.x that this is a issue related to more recent Ampere architecture GPU cards?

Thank you for your time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TiagoCarvalho1979 ,

I am not sure about your GPU related question because I have not tested it with that specific GPU. About your question related to granularity you can check the resolution of the raster. Also I have fixed the link, it is same tool and that works with ArcGIS Pro too You don't need arcmap for that if you are already using ArcGIS Pro.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous User

both rasters have the same pixel value 10 meters. So my conclusion is how the built-in libraries models that handle the data work for the differente cases. Since I don't have another GPU, Touring or Pascal, available I cannot test the model using other type of GPU.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

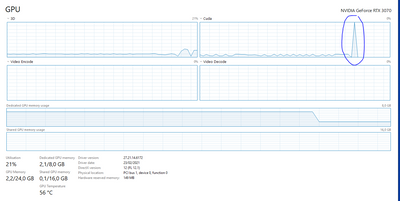

new feedback for the discussion. What happens is something like this:

so in the example above the GPU is working. I was identifying if the problem was regarding to the pre-defined extent of the processing. So looking at the CUDA chart the GPU is processing without any problems of memory neither GPU. Then a peak happens in CUDA and the processing breaks with the above results showing. This is a strange behaviour. I use my GPU for 3D rendering and analysis and nothing like this happens, and I have all drivers update.

Any comments on this?