- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoStatistical Analyst

- :

- ArcGIS GeoStatistical Analyst Questions

- :

- Exporting GA Layer to Grid: Cell Size & Accuracy

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exporting GA Layer to Grid: Cell Size & Accuracy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I've used Geostatistical Analyst Cokriging (Rainfall + Elevation) to create an MAP Surface (Mean Annual Precipitation). The GA layer that is produced as an output of the analysis can't be used in any of the other ArcGIS tools for further processing. You have to convert the GA Layer to Grid using the following tool: GA Layer to Grid. The problem that I'm having is that when I try to convert my GA Layer to Grid with a cell size of 30m based on the resolution of the DEM, it runs forever without completing. I left is running for 48 hrs without any sign that it was about to complete running. There seems to be no way of telling what the current cell size is of the GA Layer to understand why converting the GA Layer to Grid is not working. I don't wish to reduce the cell size as this will reduce the accuracy of the predicted surface which I have spent considerable time getting the input variables and models correctly setup. Any help as to why GA Layer to Grid is unable to export the following Grid (30m Cellsize) will be appreciated.

It would help going forward if the GA layer could be used as input to other ArcGIS Tools to prevent the need of having to covert the results to a Grid unnecessarily.

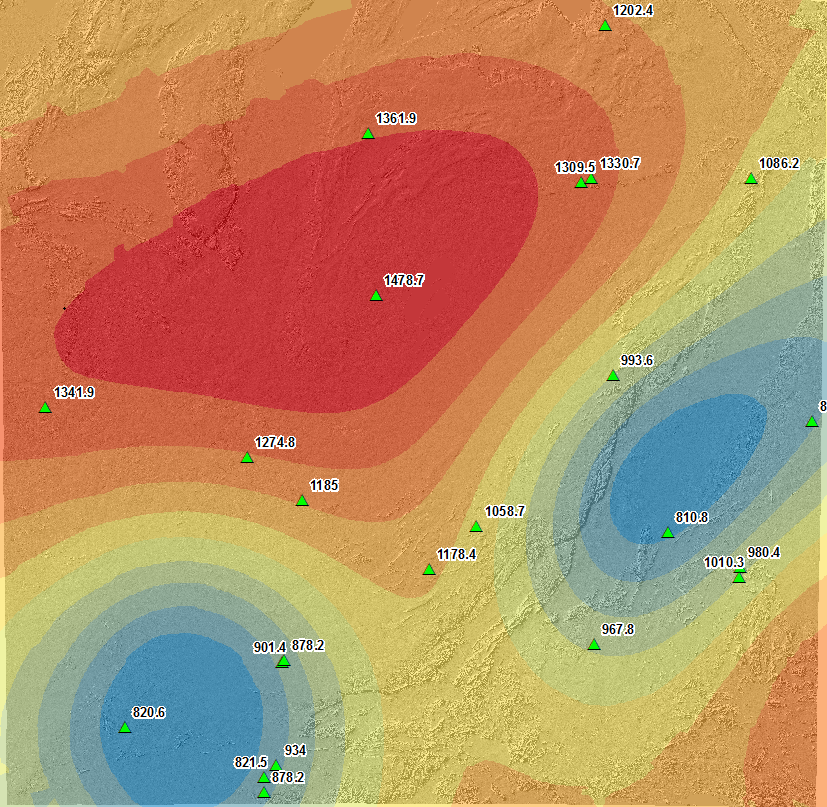

Geostatistical Layer: Cokriging Result

I've attached my geostatistical model xml file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Peter

How many columns and rows will the output raster have at 30m?

How long does it take when you use the default cell size?

Have you set any environments?

Are you using ArcMap or Pro?

Using Task Manager, how many processors are being used?

Regards

-Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Steve ![]()

Thanks for getting back to me on the following.

- There's 26821 columns and 26161 rows which equates to 701 664 181 cells.

- 5min, but the cell size is 3160 and the accuracy of the results are lost as the results of averaging over the study area.

- None

- ArcMap 10.3.1

- All my cores are being used: X6 2.8Ghz

How is one meant to maintain the accuracy of the output GA Layer without having to reduce the cellsize when exporting the result to a raster? Is there a way of determining the cellsize of the existing GA Layer to maintain the accuarcy of the raster being produced when converting the GA Layer?

Thanks for helping with the following.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

and how much memory do you have?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Dan

My notebook has 32 GB of memory. ![]()

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I guess I was just trying to figure out if GA is running in 64bit and could use all available memory or is it limited to lower amounts. I can't find any direct references so perhaps Steve knows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Peter

from using the default cell size you know how long it takes to do 1 pixel and then multiply that by 700 million to get an estimate of the time yours will take.

All processing in Desktop is done in 32 bit environment and is limited to 3 or 4 GB's of memory.

-Steve