- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Multiple GDBs vs Single GDB

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Multiple GDBs vs Single GDB

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Our production enterprise system is currently 1 sde gdb (Sql Server 2014) with several (45 to be exact) datasets and 9 editable versions (based on department). The sheer number of datasets makes it a little cumbersome for users to find what they may be searching for.

I was wondering if anyone has tried breaking down a large sde gdb into several smaller gdbs, perhaps 1 sde gdb per department? If so, is there is a performance advantage of having 1 large sde gdb versus several smaller sde gdbs? Is response time better for smaller gdb versus larger gdbs? Would reducing the number of versions have a benefit on performance?

I'm hoping to get some pros / cons of having 1 large sde gdb which is like a one-stop-shop for any GIS needs or several smaller sde gdbs meant for departmental needs.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great question,

I work for a medium size municipality and had tried a department split by database, so that our Utilities was in one, Public Works in another, Raster in another, and general edit database for everything else. Then replicate all to a production database that all others (non editors, public) could get current info from. It was all still on one SQL instance. This worked to isolate editing, so that utilities editors could edit utilities data and so forth, but limited use for other editors. It also increased the amount of management for all the databases.

We have since moved to the Local Government model for all edits. Similar set up to what James Crandall discussed. We have one database for all edits, that replicate to a production database (view only), with a static database for raster / contour info, a back up, and test database. The replication is handled on a nightly sync that is automated, so that production is only 1 day older than edit. All services are pulled form production. It is working well, with less management, and other editors are not limited by what they have access to. We have started utilizing table views to stream line what some users need in production. I will say its a bit slower than how it was, but for me only managing it in my spare time, it is a better fit. However we are thinking of now isolating our parcel fabric to its own database because of the sheer volume of records, and transactions. Hope this is helpful for you Matt.

Thank you Vince I was not aware how the RDBMS handle datasets I will consider this for future

Regards

Allen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Matthew,

There is one part of your question that stands out to me beyond the organization and number of databases. No matter what ends up working best for your organization from a backup and database organizational stand point, the behind the scenes parts, is the focus on the customer. You said, "The sheer number of datasets makes it a little cumbersome for users to find what they may be searching for". We have a large vector database for view/query, a number of raster databases, and a few editing databases. But we guide staff away from "digging" in the databases. With the last upgrade to 10.3 we avoided even giving them connection files. We provide group layer files, by theme. In our case they go to an internal webpage and find the theme they have interest in and load that layer file into Desktop. These layer files also relate directly to a webapp we have for staff and citizens. So if you use one to explore data, you can use the other.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is very interesting!...and something that hadn't crossed my mind. Providing them themed layer files will not only avoid them having to search for data, but will take care of 'standardizing' symbology and labeling. You could even go so far as to create a python add-in and have buttons or a pick list that would load in the themes for users without them having to navigate a directory.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not the DBA here, but I can maybe shed some light on our practice. Our county has ~400K people. A large percent of the county, city and utility data is in one large Oracle SDE. We have 85 feature datasets and then on top of that 815 objects that are outside of (excluding) the objects in those datasets. There's probably 70 or so versions, but I think most are direct children of the default version. Every 8 - 12 weeks, we do get an email from the DBA asking everyone to post their versions so he can compress the database states down to "zero". But on most given days, there are data in a large number of versions. As a frequent data reader and less frequent data editor, I've never experienced unacceptable latency in the 8-ish years that we've operated this way. I think this one gigantic database probably simplifies back up and restore, since it's only one database.

The consultants that helped up migrate a lot of the county's workflow from Microstation to ArcGIS suggested we organize every based on this GIS for the nation data model. Every feature/object class that deals with land use starts with "LU", such as "LU_Zoning", "LU_Parks". So it's not so bad finding the feature class that we're looking for in ArcCatalog.

For our users that are not full time GIS staff, we create layer files so they can just drop them into ArcMap. This allows us to make recommendations about which features should be hidden (inactive features), how the data should be symbolized, and which fields are the most appropriate for generating labels.

I'm not saying that you should set up your system like we have, but so far it's worked well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have abandoned Datasets as a way of organizing a GDB. I feel the performance and usability are awful. I have all feature classes together at the "root" of the GDB, and organized by using a prefix, either by domain (publicworks_*, hydro_*, etc.), or by department. But prefer by domain. Users can easilly browse the entire GDB alphabetically, and zoom in to the domain/dept. they're looking for. It seems counter intuitive but this really seems to work faster, at the software level as well as for the users.

Also, having several GDBs is an awkward solution for me as I imagine there will be a huge increase in connection files for the users, and connections to the databases in the server(s), since arcmap and arcgis server will require new connections for each database referenced in a mxd. Also, I am not sure how the rdbms server can optimize its cache mechanism when the data is divided into several databases. Maybe SQLServer has this solved, I am not sure.

To find and load data, we are using a more friendly approach for the users, by pre-producing lyr files. On 90% of the use-cases users don't have to connect and look for the correct feature class, and choose an appropriate symbology and/or definition queries. They just load a certain lyr with an easy to identify name. Actually we have an addon in ArcMap that shows a tree of themes. The users double click one theme in the tree and the corresponding lyr is loaded.

Getting back to dividing data according to editing rights, I feel that arcgis would benefit from supporting inheritance in Feature Classes. Many times editing responsabilites on a single FC are geographically defined. This makes it really difficult to have a good solution, that is not a maintenance nightmare. Having spatial inheritance would be a really cool addition to the GDB capabilities.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point on the number of connection files that you'll have to maintain and "install" on every client's computer. A large number of those (or even a few more than several), would not be fun to maintain and distribute.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You've beat me about the lyr point... didn't see it until after I posted...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would say that in the grand scheme of things you do not have a lot of datasets. We have split ours into two geodatabases. One for editable data and one for non changing data. In the first database all of our lidar and orthos and data received via third party that we will never be edited goes in one. Everything else in another. For us it has allowed us to change our backup routines and saved a lot of tape and SAN storage and more importantly reduced the amount of time it was taking to back up the data.

In addition we have published all of the layers as services and for the most part we have found a number users have started consuming the services rather than the dataset itself. The real power users that need actual access to the SDE know where the information/layers are that they want!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

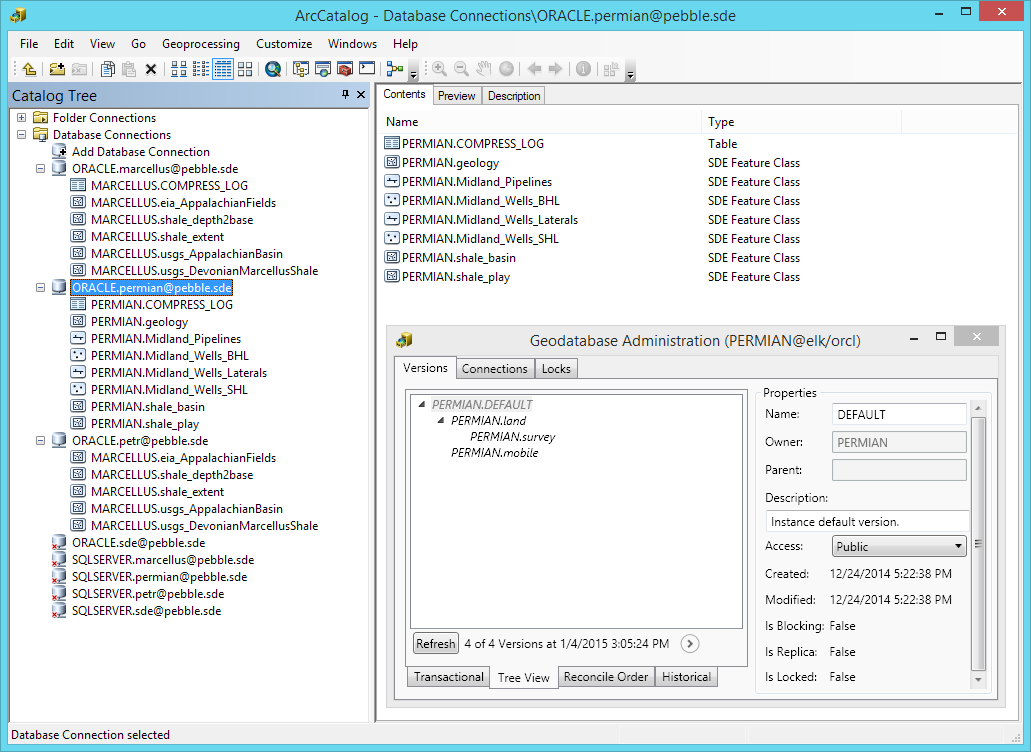

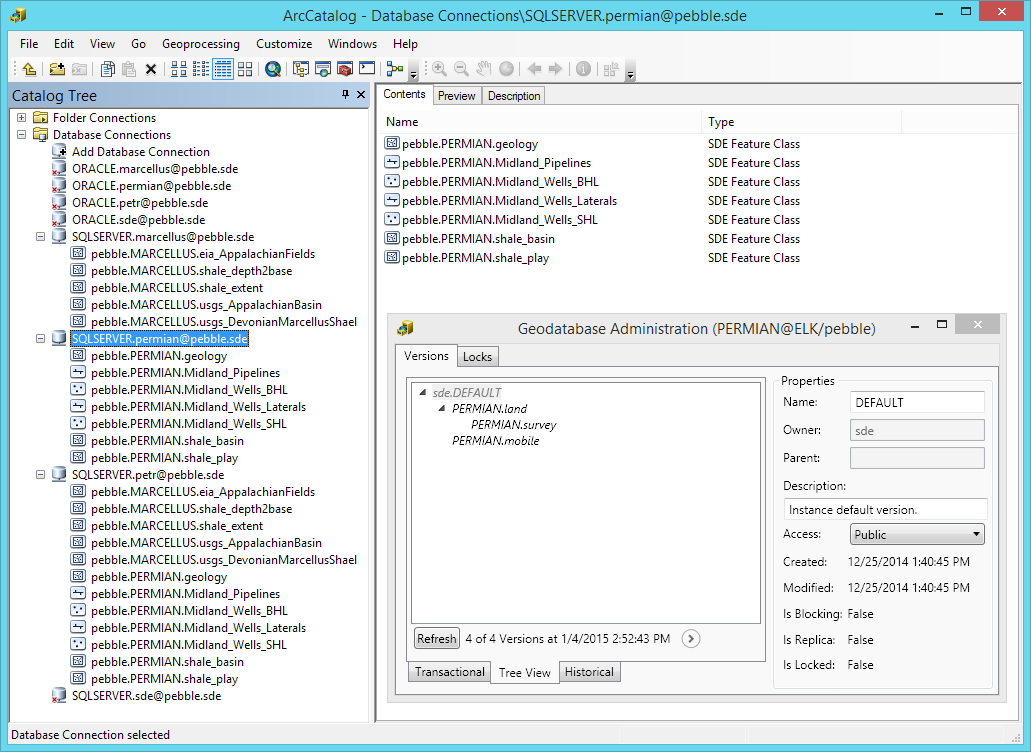

I would 1) put raster data in an enterprise geodatabase, vector data in another enterprise geodatabase; and 2) use database's schema to contain different kinds of vector data, and further use prefix for feature class. In this screen-shot, I use PERMIAN and MARCELLUS schema, inside the PERMIAN schema, I further use Midland prefix and shale prefix. Have fun!