- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: How to improve arcpy.da.UpdateCursor / InsertC...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to improve arcpy.da.UpdateCursor / InsertCursor performance?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am running a script via the ArcPro python terminal, with the layers I am using in my script open in the project. sTabName is a string which contains the name of the table I have opened in ArcPro.

I have a table which has 200k records, I wish to update and insert <20 items within this table.

I need to firstly use an UpdateCursor to update a few rows, then a InsertCursor to insert some rows to replace those I updated.

My code currently:

# Create a table view to use within the edit session

arcpy.SelectLayerByAttribute_management(sTabName, "NEW_SELECTION", where_clause=sOldWhere)

TableViewCount = int(arcpy.GetCount_management(sTabName).getOutput(0))

print(f"sTabName with sql querey: {sOldWhere}, applied. TableViewCount contains: {TableViewCount} rows")

# Start editing session

edit = None # Initialize edit variable

edit = arcpy.da.Editor(SDE_Workspace_Path)

edit.startEditing(False, True)

edit.startOperation()

# Retire the old assessments

print("Retiring old assessment(s)...")

count = 0

with arcpy.da.UpdateCursor(sTabName, ("RecordStatus", "Retired")) as oRows:

for oRow in oRows:

oRow[0] = "Retired"

sretired = "Title retired & replaced by {0}".format(",".join(aNewSIDs))

if len(sretired) > 250:

oRow[1] = 'Title retired & replaced by Multiple parcels'

else:

oRow[1] = sretired

oRows.updateRow(oRow)

count += 1

print(f"retiring item {count} of {TableViewCount}")

The Problem:

This seems to take a very long time to open up the UpdateCursor, >1-2 minutes, and the search cursor which comes after it (not included in code sample).

How can I improve efficiency given that I am only updating the specific items from where_clause=sOldWhere?

I have tried using:

arcpy.MakeTableView_management(sTable_path, "TableView", where_clause=sOldWhere)

instead of what is at Line 2, which does seem to run faster, but this creates a tableview item which I then have to remove (to clean up) which adds more processing time once again.

I wish to reduce the processing time of this script as much as possible as it is manually run multiple times over a day to complete a task.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @EdX1,

Is your feature class registered as versioned? If it is, has your geodatabase been compressed recently? The compress will clear the A&D (delta) tables, which will improve performance of the feature class. You will want to make sure there are no locks on the feature class when performing the compress. For example, make sure all services are stopped, and all users are disconnected.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is definitely what I would do first. You could also try to rebuild the indexes.

Also add the layer to a map and running some gp tool on it. If it's also slow there, you could try deleting geoprocessing history from the metadata. Or you could copy the features into a new feature class for testing and run your script on the new feature class to see if the performance is any different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BlakeTerhune Would you recommend rebuilding the indexes on a regular basis? Is there any possible repercussions with deleting geoprocessing history from the metadata? This table I am editing contains critical data.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@EdX1 wrote:@BlakeTerhune Would you recommend rebuilding the indexes on a regular basis? Is there any possible repercussions with deleting geoprocessing history from the metadata? This table I am editing contains critical data.

Thanks!

Yes, you should compress, update statistics, and rebuild indexes on a regular basis.

Enterprise geodatabase maintenance tasks—ArcGIS Pro | Documentation

The only down side to removing geoprocessing history is that you have to have a process to do it and you won't have a history of geoprocessing operations run on the data. If neither of those things are trouble for you or your organization, then go for it.

For me, I try to include the option to not record the gp history in metadata when I run scripts.

arcpy.SetLogMetadata(False)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@JakeSkinner Thanks for the suggestion! After some investigation it looks like that db is compressed daily, via a script which runs overnight 🙂

I wasn't aware of this process or what the compressing process was so I still learned something! Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would verify the feature classes' A&D tables are cleared after the compress. If there is a lock on the feature class, the compress will skip over the feature class. If your database is SQL Server you can find the correct A&D table with the following:

- query the sde.sde_table_registry table to get the registration_id. Ex:

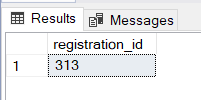

select registration_id from dbo.SDE_table_registry where table_name = 'Airports'This will return the registration_id:

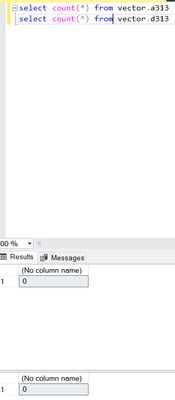

This will indicate which A&D tables to query. Ex:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@JakeSkinner wrote:I would verify the feature classes' A&D tables are cleared after the compress. If there is a lock on the feature class, the compress will skip over the feature class. If your database is SQL Server you can find the correct A&D table with the following:

- query the sde.sde_table_registry table to get the registration_id. Ex:

@JakeSkinner

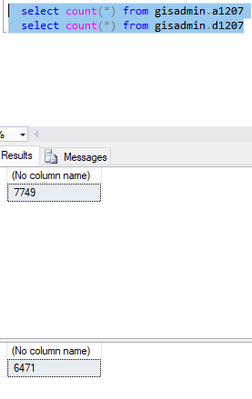

I'm glad you posted a follow up to this! It looks like it isn't being compressed as the a/d tables contain:

Which is possibly several years worth of changes!!!

Thank you! In the script that automates the compress it looked like it disconnected users and ran the compress but maybe it doesn't do this table, even though this table is in the same SDE its pointed to do a compress on.

I'd better do some deeper investigation...

Here is the code which should be doing the compress, sdeWorkspace variable contains a .sde connection with a massive amount of data, along with the table which the original question was about.

def compress():

#block new connections to the database.

triLogger.info("Stopping new user connections")

triLogger.info("Workspace: {0}".format(sdeWorkspace))

arcpy.AcceptConnections(sdeWorkspace, False)

sleep(120) # ____ 03/28/2023 wait until connections fail. https://support.esri.com/en/technical-article/000018531

try:

# Disconnect users for a full compress

triLogger.info("Disconnecting users")

arcpy.DisconnectUser(sdeWorkspace, "ALL")

# Run the compress tool.

triLogger.info("Compressing (this may take a while)")

arcpy.Compress_management(sdeWorkspace)

except arcgisscripting.ExecuteError:

triLogger.error(arcpy.GetMessages())

except:

triLogger.error("Unexpected error:" + sys.exc_info()[0])

finally:

# Allow the database to begin accepting connections again - this is critical so try twice!!!

triLogger.info("Allowing user connections")

try:

arcpy.AcceptConnections(sdeWorkspace, True)

except arcgisscripting.ExecuteError:

triLogger.error(arcpy.GetMessages())

sleep(600) # 10 minutes

arcpy.AcceptConnections(sdeWorkspace, True)

except:

triLogger.error("Unexpected error:", sys.exc_info()[0])

sleep(600) # 10 minutes

arcpy.AcceptConnections(sdeWorkspace, True)

This script runs every day and it looks like sometimes it only takes 1 minute or so to run the compress, which is conflicting with the comment "(this may take a while)". It obviously isn't compressing the table i'm interested in!

My question:

Given the code above, should all items within the sdeWorkspace be getting compressed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have not tested this with 3.x, but with 2.7, if I commented out any print statements, my scripts will run in a fraction of the time.

So, in most all my scripts, I set a debugPrint variable at the top, then I have replaced the print statements with:

debugPrint = True

.

.

.

if debugPrint:print('What ever you want')

So, normally, debugPrint = False so that the script runs much faster, but If I'm having issues, I toggle it to True so I can get the printouts.

R_