- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Data Management Blog

- :

- More From The Duck Pond - Using DuckDB In ArcGIS P...

More From The Duck Pond - Using DuckDB In ArcGIS Pro

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In an earlier post I got my feet wet using DuckDB in a hosted notebook in ArcGIS Online. That post highlighted reading GeoParquet files in an object store to maintain a feature service. That's a powerful workflow but it is much more likely your daily work involves wrangling common file formats which take up way too much time to ingest - like CSV, Excel and JSON. This post is about making your life easier by showing how DuckDB enables SQL for any file format and also supports simple conversion to Esri formats. If you have basic SQL and Python skills - as in you can read this sample and the web help - you will be fine.

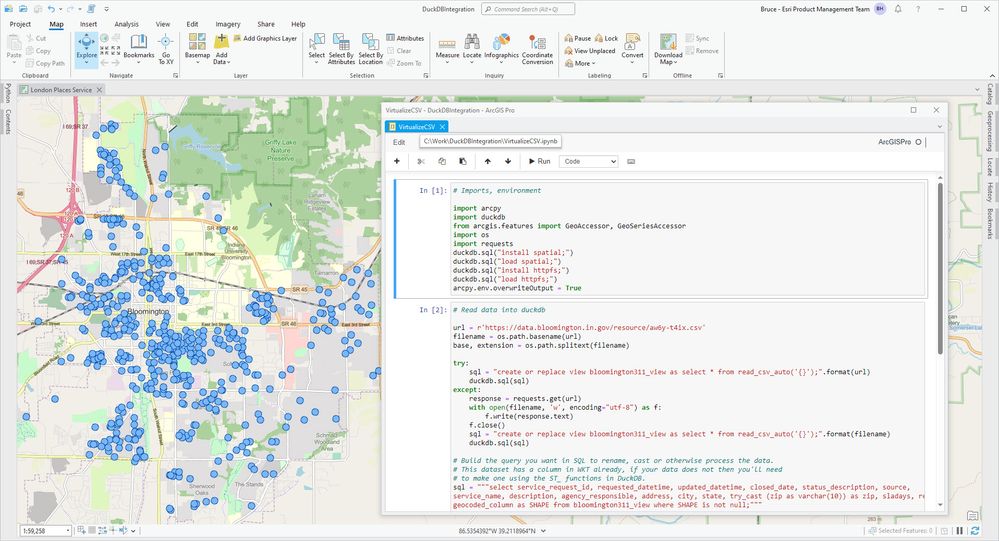

Here is some live action, Bloomington Indiana open 311 incidents migrated to a feature class in seconds.

The notebook (in the blog download) reads a CSV file at a public URL and writes a feature class into the project default geodatabase. Notebooks are a good place to start compared to a script tool because you can work in a cell interactively as you figure out your SQL. Once things are working you might copy the code to a script tool for easy automation on a schedule.

But first you need to install DuckDB! The usual route to adding a Python package to your environment is to clone the arcgispro-py3 environment then add a package in the Package Manager UI backstage in Pro. You would look for a package named python-duckdb. This errored for me (it happens) so I did a manual install. I cloned my default environment to one I named arcgispro-py3-quack and installed DuckDB by opening the Python Command Prompt from the ArcGIS program group then running this command ('username' will be yours):

conda install -c conda-forge python-duckdb=0.9.2 -p "C:\Users\username\AppData\Local\ESRI\conda\envs\arcgispro-py3-quack" --yes

Make sure to get the latest DuckDB distribution, at writing it is 0.9.2.

Let's walk through each notebook cell. The first one is pretty simple, the imports and some calls to duckdb to set up the environment.

# Imports, environment

import arcpy

import duckdb

from arcgis.features import GeoAccessor, GeoSeriesAccessor

import os

import requests

duckdb.sql("install spatial;")

duckdb.sql("load spatial;")

duckdb.sql("install httpfs;")

duckdb.sql("load httpfs;")

arcpy.env.overwriteOutput = True

The second cell makes a pandas dataframe by reading the CSV data while automatically inferring data types - such a big help. You'll notice I code defensively for the situation the server I'm hitting doesn't support HTTP range request access like DuckDB wants - I download the data - which in this case was necessary.

url = r'https://data.bloomington.in.gov/resource/aw6y-t4ix.csv'

filename = os.path.basename(url)

base, extension = os.path.splitext(filename)

try:

sql = "create or replace view bloomington311_view as select * from read_csv_auto('{}');".format(url)

duckdb.sql(sql)

except:

response = requests.get(url)

with open(filename, 'w', encoding="utf-8") as f:

f.write(response.text)

f.close()

sql = "create or replace view bloomington311_view as select * from read_csv_auto('{}');".format(filename)

duckdb.sql(sql)

# Build the query you want in SQL to rename, cast or otherwise process the data.

# This dataset has a column in WKT already, if your data does not then you'll need

# to make one using the ST_ functions in DuckDB.

sql = """select service_request_id, requested_datetime, updated_datetime, closed_date, status_description, source,

service_name, description, agency_responsible, address, city, state, try_cast (zip as varchar(10)) as zip, sladays, request_complete_days, sla_diff_days,

geocoded_column as SHAPE from bloomington311_view where SHAPE is not null;"""

df = duckdb.sql(sql).df()

Note the point about using or creating WKT values for your geometry. The spatial extension for DuckDB has a rich set of spatial functions.

Now convert the dataframe to a feature class. You have other conversion options. Note that the arcgis Python API has no idea where the dataframe came from - DuckDB will do fine! - so I spatially enable the dataframe and bounce the data through a FeatureSet to get it into a geodatabase feature class. No wrangling of attribute field properties, they just work (see the SQL above, the classic situation of casting ZIP codes to text came up). The spatial reference got lost somewhere but I just reapplied it to the output.

I think I found a bug in the expression df.spatial.to_featureclass(), I'll look into it.

# Write the feature class

df.spatial.set_geometry("SHAPE",sr=4326,inplace=True)

aprx = arcpy.mp.ArcGISProject("CURRENT")

gdb = aprx.defaultGeodatabase

out_fc = arcpy.ValidateTableName(base,gdb)

location = os.path.join(gdb,out_fc)

#df.spatial.to_featureclass(location=location,overwrite=True,sanitize_columns=False)

fs = df.spatial.to_featureset()

fs.save(gdb,out_fc)

sr = arcpy.SpatialReference(4326)

arcpy.management.DefineProjection(in_dataset=location,coor_system=sr)

Lastly, announcement!

print("Created feature class {}".format(location))

That's it, fast, simple, smart migration of data with some help from DuckDB!

The notebook is in the blog download, let us know how you get on.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.