- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Questions

- :

- How to create Hosted feature layer backups periodi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to create Hosted feature layer backups periodically

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

How do you recommend to run periodical backups from a hosted feature layer ?

Several layers are editing a group of layers and we need to make backups.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Geodatabase Backups

I recommend adding a Tag to any content you want to backup.

Then via Python script, you can download any content with that Tag to gdb and schedule the script (via Task Scheduler) to run daily or weekly as you need.

I use the tag: GDBNightlyBackup

import datetime

startTime = datetime.datetime.now()

TodaysDate = datetime.date.today().isoformat()

print (startTime)

##############

import arcgis

from arcgis.gis import GIS

import os

#enter AGOL sign-in credentials

gis = GIS("https://yourorg.maps.arcgis.com", "username", "password",verify_cert=False)

now = datetime.datetime.now()

folderName = now.strftime("%Y-%m-%d")

#print (folderName)

parent_dir = r"C:\ArcGISOnline\BackupFiles\FeatureLayers"

path = os.path.join(parent_dir, folderName)

#create folder if it doesn't exist

if os.path.isdir(path):

pass

else:

os.mkdir(path)

print("Directory '% s' created" % folderName)

def downloadItems(downloadFormat):

try:

download_items = [i for i in gis.content.search(query="tags: = 'GDBNightlyBackup'", item_type='Feature Layer',

max_items=-1)]

#print(download_items)

# Loop through each item and if equal to Feature service then download it

for item in download_items:

if item.type == 'Feature Service':

print(item)

result = item.export(f'{item.title}', downloadFormat)

#r'file path of where to store the download'

result.download(path)

# Delete the item after it downloads to save on space

result.delete()

except Exception as e:

print(e)

downloadItems(downloadFormat='File Geodatabase')

#############

endTime = datetime.datetime.now()

td = endTime - startTime

hours, remainder = divmod(td.seconds, 3600)

minutes, seconds = divmod(remainder, 60)

TimeElapsed='{:02}:{:02}:{:02}'.format(int(hours), int(minutes), int(seconds))

print ("")

print ("Done!")

print ("")

print ("Ended at " + str(endTime))

print ("Time elapsed " + str(td))

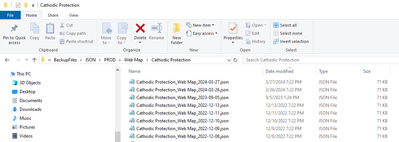

The result is I have backup folders every day for all the hosted content I've been downloading.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

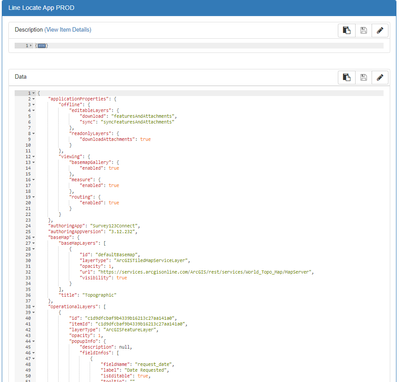

JSON backups

Similarly, I also backup the content JSON files incase schema on a hosted layer gets changed or a webmap/dashboard/app gets inadvertently changed or broken.

ESRI webmaps, dashboards, etc. have two parts to their JSON data.

- Description

- Data

The Data is where all the customizations you’ve made to the content are stored.

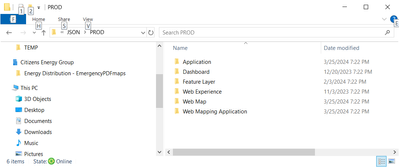

The script is set to download all the following JSON Data for the following item types in your Portal:

- Web Map

- Web Mapping Application

- Feature Layer

- Application

- Dashboard

- Web Experience

- item_types

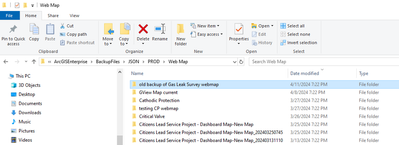

If a new item of that type is added to Portal, a new folder is created from the item name.

If the content was modified in the last 24 hours, a new JSON file is saved.

import arcgis

from arcgis import gis

from arcgis.gis import GIS

import json

import os

import glob

gis = arcgis.gis.GIS("https://yourorg.maps.arcgis.com", "username", "password")

import datetime

now = datetime.datetime.now()

dateString = now.strftime("%Y-%m-%d")

folder_location = r"C:\ArcGISOnline\BackupFiles\JSON"

item_types = { 'Web Map', 'Web Mapping Application', 'Feature Layer','Application', 'Dashboard', 'Web Experience'}

for itemType in item_types:

folder_name = itemType

subfolder_path = os.path.join(folder_location,folder_name)

try:

os.mkdir(subfolder_path)

except:

pass

print("\n"+itemType)

itemType_items = gis.content.search(query="*",item_type=itemType,max_items=10000)

listOfItemIDs = []

for item in itemType_items:

listOfItemIDs.append(item.id)

for iid in listOfItemIDs:

my_item = gis.content.get(iid)

item_title = my_item.title

removestring ="%:/,.\\[]<>*?$"

item_title = ''.join([c for c in item_title if c not in removestring]).strip()

file_name = item_title+"_"+itemType+"_"+str(dateString)+".json"

file_path_item_folder = os.path.join(subfolder_path ,item_title)

file_path = os.path.join(file_path_item_folder ,file_name)

item = my_item.get_data(try_json=True)

if len(item)>0: # only proceed if json dump is not empty

try:

#print(file_path_item_folder)

os.mkdir(file_path_item_folder)

except:

a=1

full_path = os.path.join(file_path_item_folder,file_name)

#get date of newest file in folder

list_of_files = glob.glob(file_path_item_folder+"\*")

if len(list_of_files)>0:

latest_file = max(list_of_files, key=os.path.getctime)

newestFileDate = os.path.getmtime(latest_file )

else:

newestFileDate = 0

#note: does not run on empty folders with no json exports already

if newestFileDate == 0 or my_item.modified/1000>newestFileDate : # if modified date newer than last json or no json in folder

print("\t"+item_title)

print(full_path)

with open (full_path, "w") as file_handle:

file_handle.write(json.dumps(item))

print("\n\nCompleted")