- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Image Analyst

- :

- ArcGIS Image Analyst Questions

- :

- GPU not working

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

GPU not working

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi,

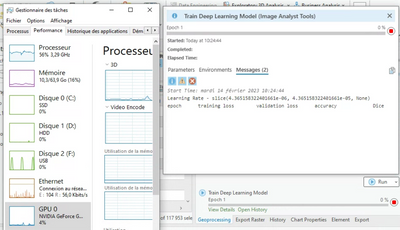

I'm trying to use GPU capabilities to train a deep learning model in arcgis pro using the 'train deep learning model' tool. I used the msi installation to install deep learning capabilities. It's working fine but is so slooooooow!

From other posts, I checked that Cuda is properly installed and that the python command torch.cuda.is_available() returns True.

I've activated GPU in environment/processor type but looking at task manager, my GPU does not seem to work hard, neither the CPU's.

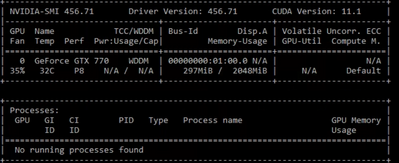

I also check with the nvidia-smi monitoring tool which confirms that no running processes are found:

I'm using a GeForce GTX 770. Maybe this model is not compatible? It's not brand new nvidia

Best regards

Adrien

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks

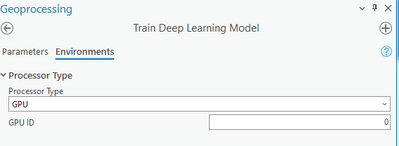

@PavanYadav: yes I was setting environment just like that

I choose to buy a new GPU NVIDIA GeForce RTX 4090

That helped a lot 🙂

But I'm facing other problems... If you want to have a look here :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You checked the faq?

Deep learning frequently asked questions—ArcGIS Pro | Documentation

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes I did

I think this is probably due to my old GPU but all the tests/checks found online came out positive so I don't have the confirmation that it is actually this issue

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@DanPatterson thank you for sharing the FAQs link.

I'm copying/pasting info from the link above so in case other users refer to this thread:

The recommended VRAM for running training and inferencing deep learning tools in ArcGIS Pro is 8GB. If you are only performing inferencing (detection or classification with a pretrained model), 4GB is the minimum required VRAM, but 8GB is recommended.

If you do not have the required 4–8GB VRAM, you can run the tools on the CPU, though the processing time will be longer.

@AdrienMichez looks like geforce-gtx-770 is 2GB memory size. (https://www.techpowerup.com/gpu-specs/geforce-gtx-770.c1856)

Product Engineer at Esri

AI for Imagery

Connect with me on LinkedIn!

Contact Esri Support Services

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@AdrienMichez I am curious if you were setting GPU in the Processor Type

You may also want to try to check:

>>> import torch

>>> torch.cuda.is_available()

True

>>> torch.cuda.device_count()

1

>>> torch.cuda.current_device()

0

>>> torch.cuda.device(0)

<torch.cuda.device at 0x7efce0b03be0>

>>> torch.cuda.get_device_name(0)

'GeForce GTX 950M'

Even though with a 2GB GPU, you might not see much performance improvements. I just wanted to share the above, and you should be able to use it.

Product Engineer at Esri

AI for Imagery

Connect with me on LinkedIn!

Contact Esri Support Services

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks

@PavanYadav: yes I was setting environment just like that

I choose to buy a new GPU NVIDIA GeForce RTX 4090

That helped a lot 🙂

But I'm facing other problems... If you want to have a look here :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@AdrienMichez thanks for sharing. For the other issue, I discussed with my coworkers. I see one of them have already responded to you the thread. Hope, what she shared can help resolve issue.

Product Engineer at Esri

AI for Imagery

Connect with me on LinkedIn!

Contact Esri Support Services

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @PavanYadav I have the same problem, I have an NVIDIA RTX 5000 graphics card, but when I perform object detection using deep learning, the card doesn't seem to be working properly. It does eventually complete the task, but it's incredibly slow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Possible Reasons for GPU Underutilization:

- Small Batch Size: If the batch size is too small for the available GPU vRAM, the GPU may not be fully utilized. Try increasing the batch size to see if this improves performance.

- CPU-GPU Synchronization: The GPU might be waiting for the CPU to send data, leading to fluctuating GPU usage. Monitor CPU and GPU usage to identify any synchronization issues.

- Software Bug: as of today, we are not aware of any bugs related to GPU for this tool.

- Small Input Rasters: If the input rasters are very small (e.g., 1024x1024), GPU/CPU will give you about the same performance.

Additional Tests for GPU Verification:

- CPU vs. GPU Performance Comparison: A significant difference in processing time (3-4x to 20x) between CPU and GPU should be observed for large enough input rasters. Compare the processing times to determine if the GPU is working as expected.

- Large Batch Size Test: Setting a very large batch size (e.g., 1024) should result in a "CUDA out of memory" error if the GPU is functioning correctly. If you see a "CPU out of memory" error, try a smaller batch size like 512.

Product Engineer at Esri

AI for Imagery

Connect with me on LinkedIn!

Contact Esri Support Services

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I’m using ArcGIS Notebooks in ArcGIS Online with GPU support to train a deep learning model (MaskRCNN) and publish the package (.dlpk). The training and publishing work well, but object detection with DetectObjectsUsingDeepLearning in ArcPy takes about 15 hours, even with GPU.

Questions:

- Web Applications: Is it possible to use the deep learning package in web applications for real-time object detection?

- Optimization: How can I reduce the detection time? Is it normal for it to take this long even with GPU?

- Recommended Workflow: What is the most efficient workflow for object detection with ArcGIS Online?

Thank you for your assistance.

Best regards,