- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Blog

- :

- Using a partial GeoEvent Definition to update feat...

Using a partial GeoEvent Definition to update feature records

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

.

One of the first contributions I made to the GeoEvent space on GeoNet was a blog titled https://community.esri.com/community/gis/enterprise-gis/geoevent/blog/2015/06/05/understanding-geoev.... Technical workshops and best practice discussions for years have recommended that, when you want to use data from event records to add or update feature records in a geodatabase, you start by importing a GeoEvent Definition from the targeted feature service. This allows you to explicitly map an event record’s structure as the last processing step before an add / update feature output. The field mapping guarantees that service requests made by GeoEvent Server match the schema expected by the feature service.

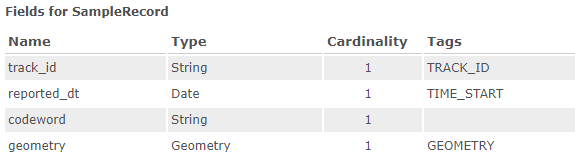

In this blog I would like to expand upon this recommendation and introduce flexibility you may not realize you have when working with feature records in both feature services and stream services. Let's begin by considering a relatively simple GeoEvent Definition describing the structure of a "sample" event record:

Different types of services will have different schema

I could use GeoEvent Manager and the event definition above to publish several different types of services:

- A traditional feature service using my GIS Server's managed geodatabase (a relational database).

- A hosted feature service using a spatiotemporal big data store configured with my ArcGIS Enterprise.

- A stream service without any feature record persistence and no associated geodatabase.

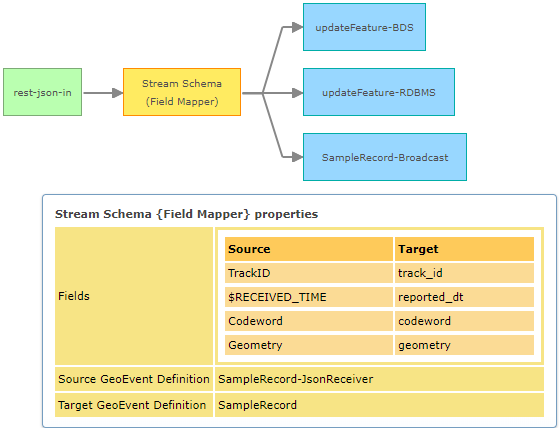

Following the best practice recommendation, a Field Mapper Processor should be used to explicitly map an event record structure and ensure that event records routed to a GeoEvent Server output match the schema expected by the service. The GeoEvent Service illustrated below can be used to successfully store feature records in my GIS Server's managed geodatabase. The same feature records can be stored in my ArcGIS Enterprise's spatiotemporal big data store with copies of the feature records broadcast by a stream service:

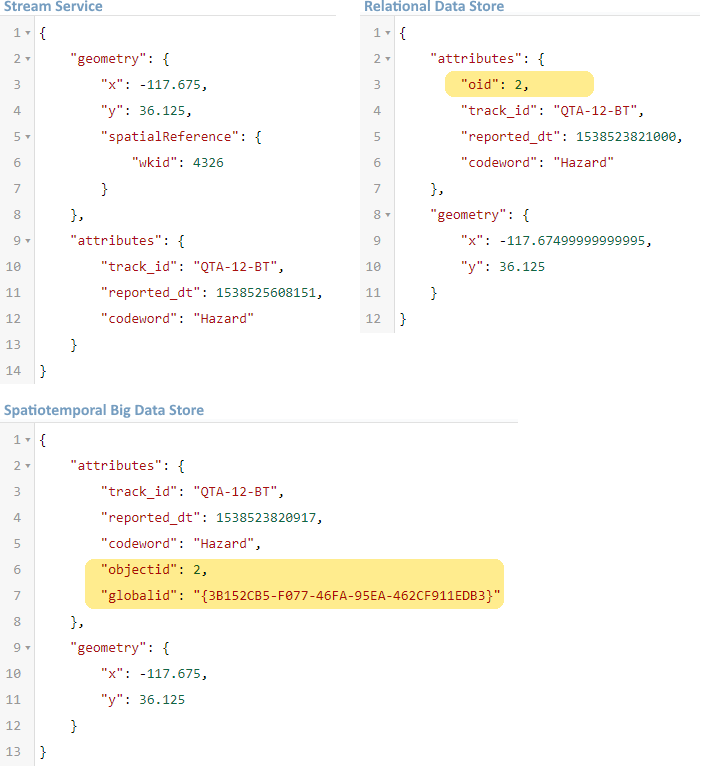

But if you compare the feature records broadcast by the stream service with feature records queried from the different feature services and data stores you should notice some subtle differences. The schema of the various feature records is not the same:

You might notice that the stream service's geometry is "complete". It has both the coordinate values for the point geometry and the geometry's spatial reference, but this is not what I want to highlight. The feature services also have the spatial reference, they just record it as part of the overall service's metadata rather than including the spatial reference as part of each feature record.

What I want to highlight are the attribute values in the relational data store's feature record and spatiotemporal big data store's feature record which are not in the stream service's feature record. These additional identifier values are created and maintained by the geodatabase and you cannot use GeoEvent Server to update them.

Recall that the SampleRecord GeoEvent Definition illustrated at the top of this article was successfully used to add and update feature records in the different data stores. If new GeoEvent Definitions were imported from each feature service, however, the imported event definitions would reflect the actual schema of their respective feature classes:

Since the highlighted attribute fields are created and maintained by the geodatabase and cannot be updated, the best practice recommendation is to delete them from the imported GeoEvent Definitions. Even if event records you ingest for processing happen to have string values you think appropriate to use as a globalid for a spatiotemporal feature record, altering the database's assigned identifier would be very bad.

But if I delete the fields from the imported GeoEvent Definitions ...

Exactly. The simplest way to convey the best practice recommendation to import a GeoEvent Definition from a feature service is to say that this ensures event records mapped to the imported event definition will exactly match the structure expected by the feature service. In service-oriented architecture (SOA) terminology this is "honoring the service's contract."

Maybe you did not know that the identifier fields could be safely deleted from the imported GeoEvent Definition, and so chose to keep them, but leave them unmapped when configuring your final Field Mapper Processor. The processor will assign null values to any unmapped attribute fields, and the feature service knows to ignore attempts to update the values that are created and maintained by the geodatabase, so there is really no harm in retaining the unneeded fields. But unless you want a Field Mapper Processor to place a null value in an attribute field, it is best not to leave attribute fields unmapped.

Is it OK to use a partial GeoEvent Definition when adding or updating feature records?

Yes, though you generally only do this when updating existing feature records, not when adding new feature records.

Say, for example, you had published a feature service which specified the codeword attribute could not be null. While such a restriction cannot be placed on a feature service published using GeoEvent Manager, you could use ArcGIS Desktop or ArcGIS Pro to place a restriction nullable: false on a feature class's attribute field to specify that the field's value may not be assigned a null value.

If you were using GeoEvent Server to add new feature records to the feature class, left one or more attribute fields unmapped in the final Field Mapper, and those attribute values are not allowed to be null, requests from GeoEvent Server will be rejected by the feature service -- the add record request does not include sufficient data to satisfy all the restrictions specified by the feature service.

Feature services which have nullable: false restrictions on attribute fields normally also specify a default value to use when a data value is not specified. Assuming the event record you were processing did not have a valid codeword, you could simply delete that attribute field from the Target GeoEvent Definition used by your final Field Mapper and allow the feature service to supply a default value for the missing, yet required, attribute. If the feature service spec does not include default values for required fields, well then, the processing you do within your GeoEvent Service will have to come up with a codeword value.

The point is, if you do not want to attempt to update a particular attribute value in a feature record, either because you do not have a meaningful value, or you do not want to push a null value into the feature record, you can simply not include that attribute field in the structure or schema of event records you route to an output.

Examples where feature record flexibility might be useful

I have worked with customers who use feature services to compile attribute data collected from different sensors. One type of sensor might provide barometric pressure and relative humidity. Another type of sensor might provide ambient temperature and yet another a measure of the amount of rainfall. No single sensor is supplying all the weather data, so no single event record will have all the attribute values you want to include in a single feature record. Presumably, the different sensor types are all associated with a single weather station, whose name could be used as the TRACK_ID for adding and updating feature records, so we can create partial GeoEvent Definitions supporting each type of sensor and update only the specific attribute fields of a feature record with the data provided by a particular type of sensor installed at the weather station.

Another example might be when data records arrive with different frequency. Consider an automated vehicle location (AVL) solution which receives data every two minutes reporting a vehicle's last observed position and speed. A different data feed might provide information for that same vehicle when the vehicle's brakes are pressed particularly hard (signaling, perhaps, an aggressive driving incident). You do not receive "hard brake" event records as frequently as you receive "vehicle position" event records, and you do not want to push null values for speed or location into a feature record whenever an event record signaling aggressive driving is received, so you prepare a partial GeoEvent Definition for the "hard brake" event records and only update that portion of a vehicle's feature record when that type of data is received.

A third example where using a GeoEvent Definition which either deliberately includes or excludes a attribute value may be helpful is described in the thread Find new entries when streaming real-time data

Are stream services as flexible as feature services?

They did not used to be, no, but changes made to stream services in the ArcGIS 10.6 release relaxed their event record schema requirements. You should still use a Field Mapper Processor to make sure that the spelling and case sensitivity of your event record's attribute fields match those in the stream service's specification. Stream services cannot transfer an attribute value from an event field named codeWord into a field named codeword for example, but you can now send event records whose structure is a subset of the stream service's schema to a Send Features to a Stream Service output. The output will attempt to handle any necessary data conversions, broadcasting a long integer value when a short integer is received, or broadcasting a string equivalent when a date value is received. The output will also omit any attribute value(s) from the feature record(s) it broadcasts when it does not receive a data value for a particular attribute.

Hopefully the additional detail and examples in this discussion illustrate flexibility you have when working with feature records in both feature services and stream services and helps clarify best practice recommendations to use a Field Mapper Processor to ensure the structure of event records sent to either a feature service or stream service output have a schema compatible with the service's specification. You can use partial GeoEvent Definitions which model a subset of a feature record's complete schema to avoid pushing null values into a data record and/or avoid attempting to update attribute values you do not want to update (or are not allowed to update).

- RJ

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.