- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Frustrating .lock files

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Frustrating .lock files

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have created a Python package for use with python toolboxes (.pyt) which does a lot of the file management and parameter management grunt work that I need my tools to do. The point of this is to greatly simplify file naming and working with file directories as well as allowing me to access parameter values by name rather than index, all of which works well. The user selects an output workspace, and depending on various other parameters the user sets and the nature of the output workspace, a file GDB is created. An external script is called and this GDB is passed to it, all "intermediate" files which are outputs of other ESRI tools called in the script but not intended to be the final output of the script are stored in this file GDB. The script completes and the final outputs are written to disk, and then the script tries to clean up by deleting all of the files out of the GDB and remove the GDB. The point is to give the user the option of keeping these files or deleting them if they are not needed, since many can be used to review the output or used in further geoprocessing.

The problem is that sometimes the file GDB is NOT completely deleted. All of the actual data in the GDB is removed and all of the files required for the GDB to function as a GDB are removed, but a few .lock files pertaining to a single raster dataset produced in the workflow remain along with the .GDB folder. It seems to depend on the actual arcpy functions that I use to create these intermediate files, as some files created by some functions just really do not appreciate being deleted.

I have attached images of the problem below. (is there a way to post code?)

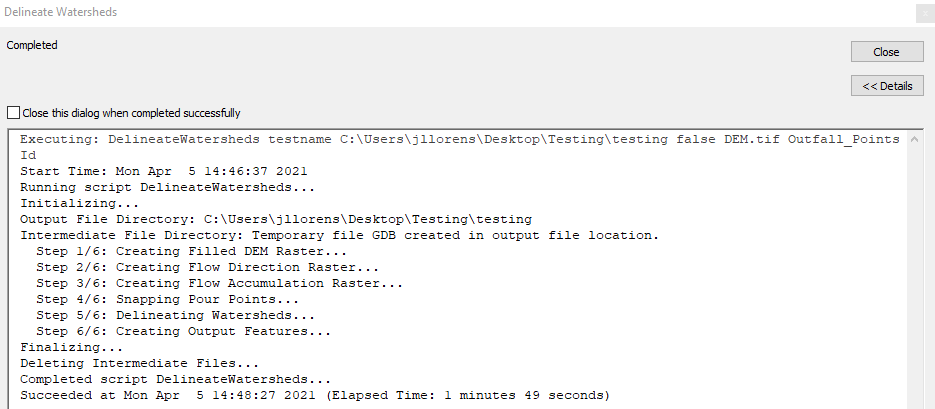

In the image below, you can see no errors are produced by the script and it completes successfully. Here is an example of me running a simple script from this API which performs the standard workflow to produce watersheds from a DEM raster and a pour points feature class. The outputs (a watersheds polygon feature class and a pour points feature class) look fine and are created properly.

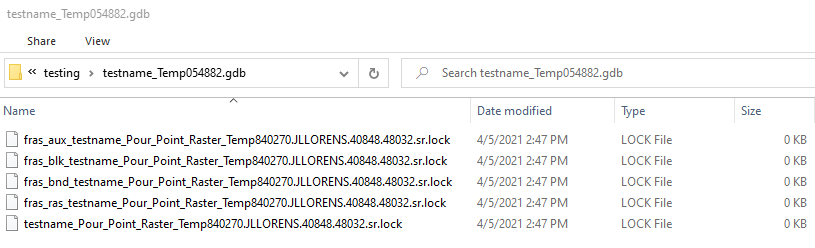

In the image below, you can see the file GDB is not entirely removed, a nonfunctional "remnant" .gdb folder is left with a few .lock files inside. All of these .lock files pertain to a Pour_Point_Raster dataset which was saved to this file GDB during the script. The dataset itself was removed successfully, along with all other "intermediate" datasets, yet the .lock files for this particular dataset remain. Closing ArcMap causes these .lock files to dissapear and the .gdb folder can then be removed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posting code....

Code formatting ... the Community Version - Esri Community

File locks... hmmmm save, shutdown reboot, delete if it exists at the beginning of the script.

File locks are always a big issue with no definitive workarounds. Simple things like layers being added to a map... even if removed ... keep a lock.

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There may be hope because you have identified the items (rasters) that are holding the file lock. Knowing that, I would go through your code and make sure you explicitly delete any layers or raster objects pointing to data before you delete the datasets themselves. It gets stickier if the layers are actually added to a map because then the application itself (not your script) may establish a file lock.

I have developed code patterns where I initialize variables (to None) for layers and datasets at the beginning of a script (function) and at the end, if not null, delete them in a finally block. Layers first, then datasets.

Finally a suggestion: From your code it looks like you are doing a lot of spatial analyst raster processing. Based on my own experience, if you aren't already, I highly recommend you use .tif files for your rasters instead of file gdb for performance reasons.

Good luck chasing down those file locks, you may need it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

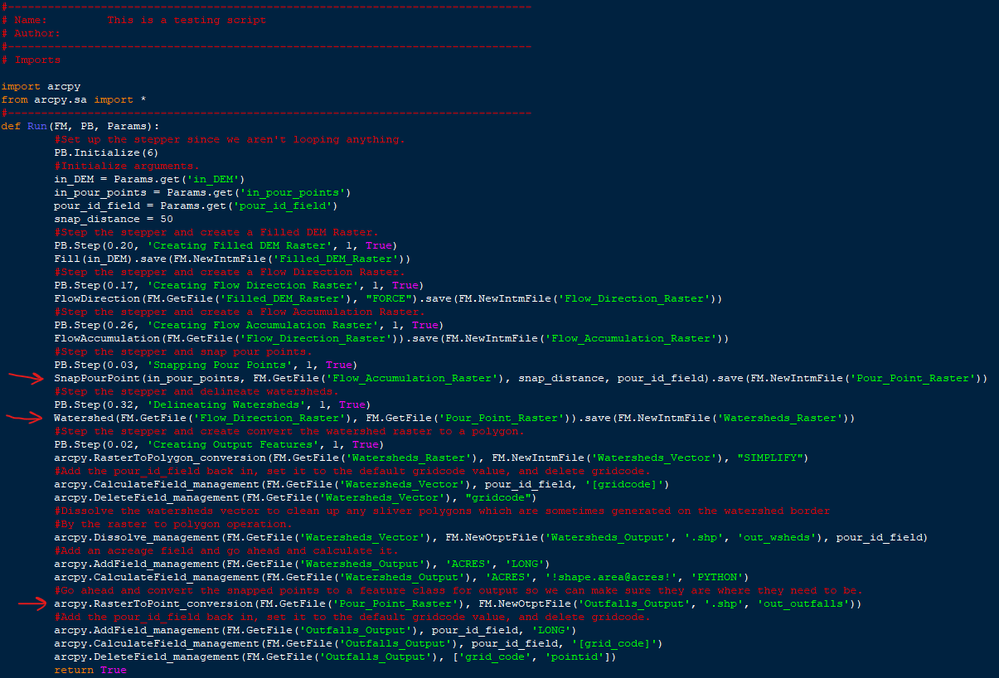

The specific file in question is created by the SnapPourPoint SA function in a file GDB, then it gets used by the Watershed and RasterToPoint functions, then it is never used or looked at again. No layers are created from this file. It is not useful for any purpose other than being fed into the Watershed function.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting. Perhaps I shouldn't be calling .save directly on the Spatial Analyst functions? (these functions return a raster object, unlike other arcpy functions). Maybe I should return these raster objects into a variable that I can call del on after the fact?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't seen this workflow in the help, the normal way I do it is one of these patterns:

# map algebra normal way to do it (as shown in the help usually)

pp = SnapPourPoint(....)

pp.save("snap_pour_point")

# then later you can re-use the pp variable

# and later delete the raster this way:

arcpy.Delete_management(pp)

# this pattern avoids creating a raster object

arcpy.gp.SnapPourPoint_sa(input1, input2, output)

I think your current pattern may be putting some raster objects into "space" - not assigning them to variables -- which could be the source of your file lock issues. Or you are running into some bugs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See the example, it uses the code pattern I shared with you. I try to do things the way they demonstrate in the help to avoid problems. Again, raster saved to the gdb are s l o w.

It looks like you're using ArcMap because your Calculate Field uses VB syntax. I recommend Python syntax for Calculate Field so it will work in 64 bit and in Pro.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the tips, I had thought I was using Python syntax so that's a good catch.

In my opinion, this is starting to look more and more like a bug.

I would expect that calling .save directly on the returned raster object from SnapPourPoint would allow Python's garbage cleanup to destroy that object immediately after the .save method is completed to free up memory, since I assume there would be no more references to that Raster object beyond whatever "self" variables reside in the .save method definition. In fact, I wrote it this way explicitly to try and do that. I give the user of the script the option to keep these "intermediate" files, so since I am writing them to disk anyways I saw no reason to write extra lines of code to keep track of variables that I don't actually need beyond the next line of code. I have changed this to make the script more flexible, I'll explain below. I am 99% certain that my script has no pointers to this object after the .save method unless I am misunderstanding how Python works.

All this being said, I really don't think it has to do with Raster class objects. I think it is either .save, Watershed, or RasterToPoint which has some sort of bug where .lock files are created when it accesses the file, but then fails to remove the .lock files after the method is done accessing the file.

Another reason why this appears to be the case is because I can actually delete the specific file with no errors using Delete_management or delete it manually by right clicking on it in the catalog in ArcMap, and either method successfully removes the data but not the .lock files. The .lock files themselves are locked by the operating system as read only until ArcMap is closed.

Anyways, I fixed all this with a combination of things. First, I just dodged the problem by defaulting to the ScratchGDB if the user does not wish to keep these intermediate files. Any files created here are deleted by the script after completion, and it doesn't matter if .lock files remain because I am no longer trying to delete the entire file GDB upon script completion. Second, any SA functions (like SnapPourPoint) which return an actual raster object are passed into a variable, and I follow these functions with:

flowdir = FlowDirection(fill, "FORCE")

if FM.IntmRetain: flowdir.save(FM.NewIntmFile('FlowDir'))

flowacc = FlowAccumulation(flowdir)

if FM.IntmRetain: flowacc.save(FM.NewIntmFile('FlowAcc'))Where IntmRetain is True if the user wants to keep these intermediate files. Then if I need that raster as an input to anything later in the script, it should already be loaded in memory, and I am not even writing them to disk if the user doesn't want to keep them so they never even have to be deleted later.