- Home

- :

- All Communities

- :

- Products

- :

- Imagery and Remote Sensing

- :

- Imagery Questions

- :

- Deep Learning - data.show - depleted VRAM

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Deep Learning - data.show - depleted VRAM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Imagery and Remote Sensing,

I am trying the Esri example - https://developers.arcgis.com/python/sample-notebooks/automate-building-footprint-extraction-using-i... , on the Czech data.

I´ve stuck on the beginning with the command data.show_batch(rows=4).

I am getting a error:

CUDA out of memory. Tried to allocate 88.00 MiB (GPU 0; 4.00 GiB total capacity; 483.95 MiB already allocated; 64.31 MiB free; 500.00 MiB reserved in total by PyTorch)

My GPU has 4GB of VRAM and almost 75% is allocated by the data.show command. And it´s still allocated. even after the result is displayed. Deleting of the Cell did not help.

I would like to ask you for help in three questions:

1) Is there a way how to free VRAM after displaying data.show_batch(rows=4).

2) Is there a way how to use RAM to help the VRAM?

3) What is the recommended VRAM size?

Thank you,

Vladimir

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vladimir,

CUDA out of memory. Tried to allocate 88.00 MiB (GPU 0; 4.00 GiB total capacity; 483.95 MiB already allocated; 64.31 MiB free; 500.00 MiB reserved in total by PyTorch)

The above error indicates that your GPU has very little memory not sufficient to train the model.

In that case, you can reduce the batch size to 2 or 4 and smaller chip size (224 minimum though) and see if it helps

Optionally, train on a CPU (it will be very slow) or, get a GPU with more memory

If you already have a GPU with sufficient memory, restart, or shut down all notebooks and apps that are using GPU so GPU memory can be freed up.

My GPU has 4GB of VRAM and almost 75% is allocated by the data.show command. And it´s still allocated. even after the result is displayed. Deleting of the Cell did not help. or 1) Is there a way how to free VRAM after displaying data.show_batch(rows=4)

data.show_batch(), model.lr_find() utilize the GPU in such a way that once these functions are run completely, though PyTorch doesn't release the memory but the memory is still reusable for further operations like model.fit().

2) Is there a way how to use RAM to help the VRAM?

The preprocessing code for prepare_data already uses RAM but for show_batch, if you still want to use RAM, you could set using the command: %env CUDA_VISIBLE_DEVICES=''. But once you set this environment variable, the training will also take place on CPU till you restart your notebook.

3) What is the recommended VRAM size?

It depends on the model that you intend to use. An object detection model such as SingleShotDetector can work with 4GB VRAM with approx. 4 Batch Size and 224 chip size. However, for an instance segmentation model like MaskRCNN, 4GB GPU memory can be insufficient, given batch size and chip size. Thus, I suggest you could reduce chip size and batch size in order to work with it.

Thanks,

Priyanka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @VHolubec,

You're most welcome! Hope you got some good results after training a model.

Your suggestion has been noted. You can read this blog to learn about GPU recommendation, specifications etc.

Deep Learning with ArcGIS Pro Tips & Tricks: Part 1

Additionally, I would like to tell that since a deep learning model has various parameters which decides how much GPU memory will be used, for example batch size, chip size, transforms etc. It will not be possible to find a size unit for a batch.

Regards,

Priyanka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PriyankaTuteja,

I´ve tested to developed few models based on Python API samples like - roads cracks detection or car detection, but still I am struggling to get some good results - the problem is with my HW - I have still 4GB of VRAM available which is not much and with levering batch size or chip size it led to unstable/inaccurate models:

so we are discussing the way to strengthen our HW (anyway the article has mentioned double of my VRAM, so I am completely out for now) - with better GPU or Azure. After we will solve this question then I will start from scratch once-again.

Thank you so much for this article! Seems to be a great source to re-start our my testing! Maybe a stupid question but could it be possible to add to Python API some calculator which could estimate, based on your GPU, the probably best chip size and batch size for particular model - with input parameter like type of the model, VRAM, batch size/chip size - and it estimates the second parameter of these two.

Sorry if this question is complete nonsense - I know, you wrote that there is lot of variables. Just an idea, because very often it happened to me that FIT function worked fine and first half or first two epochs of the training worked fine to but on the third epoch the GPU was depleted. So such a calculator/estimator would save a time on preparation.

@PriyankaTuteja thank you again for your help!

@NatalieDobbs1 what´s your issue with the DL?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 Gb is the "Can you run it " but that is for dedicated video ram.

How much ram do you actually have? and how does it stack up against the "Recommended" specs?

ArcGIS Pro 2.6 system requirements—ArcGIS Pro | Documentation

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Dan Patterson

the VRAM is dedicated. The CPU i7 and 45 GB Ram (half free) seems fine for PRO.

The problem is for VRAM only. I am using NVidia T2000 GPU.

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does it work with the sample data? And if so, how does your data, with respect to size and type compare to it?

I didn't see any specific errors on GitHub

GitHub - Esri/arcgis-python-api: Documentation and samples for ArcGIS API for Python

Nor did I see any recommendations on hardware requirement beyond that referenced before

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Imagery and Remote Sensing, Dear Dan Patterson, Dear Python team,

I´ve digged deeper a little and it seems for me that there is some problem with MaskRCNN or Jupyter VideoRAM management at all. I tried to close everything what could use VRAM and I was able to run data.show but the RAM was almost full then.

Then I tried to skip the data.show and model.lr_find() and it ended up with the same VRAM error.

See my video: ftp://ftp.arcdata.cz/outgoing/holubec/VRAM.mp4 with full workflow. What´s weird that GPU shared memory stays intact. Functions use just dedicated VRAM.

Ok my machine is not hyper-mega workstation but 6 physical cores with 48 GB RAM and 4GB of dedicated VRAM on GPU seems fine for me (it´s minimum recommended size, as Dan Patterson wrote).

Do you think changing the size of training chips would help? I have 515 tiff chips (400x400) created from 1973 testing parcels (not much against the Berlin data for the demo).

I would be happy for any idea, what could enable the test of the Deep Learning demo.

Thank you.

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vladimir,

CUDA out of memory. Tried to allocate 88.00 MiB (GPU 0; 4.00 GiB total capacity; 483.95 MiB already allocated; 64.31 MiB free; 500.00 MiB reserved in total by PyTorch)

The above error indicates that your GPU has very little memory not sufficient to train the model.

In that case, you can reduce the batch size to 2 or 4 and smaller chip size (224 minimum though) and see if it helps

Optionally, train on a CPU (it will be very slow) or, get a GPU with more memory

If you already have a GPU with sufficient memory, restart, or shut down all notebooks and apps that are using GPU so GPU memory can be freed up.

My GPU has 4GB of VRAM and almost 75% is allocated by the data.show command. And it´s still allocated. even after the result is displayed. Deleting of the Cell did not help. or 1) Is there a way how to free VRAM after displaying data.show_batch(rows=4)

data.show_batch(), model.lr_find() utilize the GPU in such a way that once these functions are run completely, though PyTorch doesn't release the memory but the memory is still reusable for further operations like model.fit().

2) Is there a way how to use RAM to help the VRAM?

The preprocessing code for prepare_data already uses RAM but for show_batch, if you still want to use RAM, you could set using the command: %env CUDA_VISIBLE_DEVICES=''. But once you set this environment variable, the training will also take place on CPU till you restart your notebook.

3) What is the recommended VRAM size?

It depends on the model that you intend to use. An object detection model such as SingleShotDetector can work with 4GB VRAM with approx. 4 Batch Size and 224 chip size. However, for an instance segmentation model like MaskRCNN, 4GB GPU memory can be insufficient, given batch size and chip size. Thus, I suggest you could reduce chip size and batch size in order to work with it.

Thanks,

Priyanka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Priyanka Tuteja,

thank you very much for reply. Just for test I set batch size to 1 and it helps a lot and now the model is learning.

Would it be possible to post somewhere - blog/documentation HW requirements for each model used by in Esri - it would help a lot to better understand what to expect from each model?

Also would be great if there could be a way how to used also GPU shared memory (not just the RAM and CPU as you mentioned above), which (in my case) is 6 times higher than VRAM and almost empty. GPU, if needed, should be able use it as its own VRAM. Is it possible to set it somewhere or is this now limit?

Is there some size unit for Batch? like kB/MB?

Thank you.

Vladimir

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @VHolubec,

You're most welcome! Hope you got some good results after training a model.

Your suggestion has been noted. You can read this blog to learn about GPU recommendation, specifications etc.

Deep Learning with ArcGIS Pro Tips & Tricks: Part 1

Additionally, I would like to tell that since a deep learning model has various parameters which decides how much GPU memory will be used, for example batch size, chip size, transforms etc. It will not be possible to find a size unit for a batch.

Regards,

Priyanka

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PriyankaTuteja,

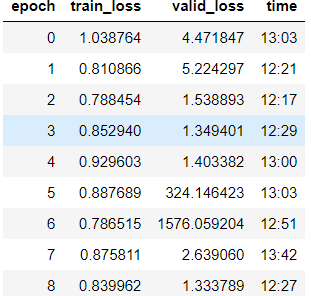

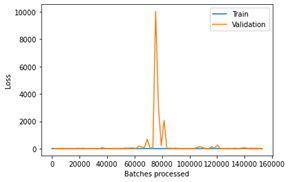

I´ve tested to developed few models based on Python API samples like - roads cracks detection or car detection, but still I am struggling to get some good results - the problem is with my HW - I have still 4GB of VRAM available which is not much and with levering batch size or chip size it led to unstable/inaccurate models:

so we are discussing the way to strengthen our HW (anyway the article has mentioned double of my VRAM, so I am completely out for now) - with better GPU or Azure. After we will solve this question then I will start from scratch once-again.

Thank you so much for this article! Seems to be a great source to re-start our my testing! Maybe a stupid question but could it be possible to add to Python API some calculator which could estimate, based on your GPU, the probably best chip size and batch size for particular model - with input parameter like type of the model, VRAM, batch size/chip size - and it estimates the second parameter of these two.

Sorry if this question is complete nonsense - I know, you wrote that there is lot of variables. Just an idea, because very often it happened to me that FIT function worked fine and first half or first two epochs of the training worked fine to but on the third epoch the GPU was depleted. So such a calculator/estimator would save a time on preparation.

@PriyankaTuteja thank you again for your help!

@NatalieDobbs1 what´s your issue with the DL?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @VHolubec

The article assisted in identifying that my machine dedicated GPU of 4 GB may be an issue with running the model since the recommendation is a dedicated GPU of 8 GB or more

@PriyankaTuteja Thanks for sharing the helpful article and I will look out for the other parts in the series

Kind regards

Natalie