- Home

- :

- All Communities

- :

- Products

- :

- Imagery and Remote Sensing

- :

- Imagery Questions

- :

- Re: Classify pixels using deep learning -- results...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Classify pixels using deep learning -- results not understandable

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

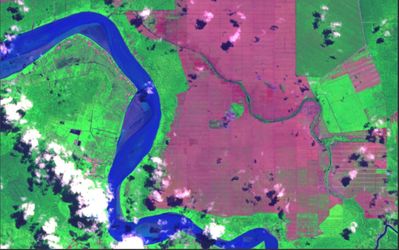

I've been trying to execute land cover classification using deep learning in ArcGIS Pro with Landsat-8 data to no avail. The results look like incomplete and I have been wondering what I did wrong. I started off with multilabel classification attempt, but it did not manage to classify anything fully. Just very patches here and there despite few hundreds of training data created with 'Training Sample Manager'; refer to the screenshot below of the classified image and the real Landsat-8 image

The classified patches overlay the Landsat-8 image perfectly and is accurate but...why is it in patches like that and not classify the whole image?

So I thought, maybe it can only handle 1 class label at a time....so I made a single 'Forest' class in the Training Sample Manager, Export Training Data for Deep Learning and train the data in the Jupyter Notebook with the codes I extract from the technical talk 'Geospatial for Deep Learning'. Then using Classify Pixels with Deep Learning tools as outlined. In all instances, the tool took more than 3 hours. For this single class, the result is worse off...practically nothing was generated except some stray pixels; refer below.

Overall, I have been following the procedure to the T but it's not producing similar results as I would expect to get out of the Deep Learning Framework. Can some give me some guidance concerning this? Because I've been at it for 2 weeks and I'm pretty much close to throwing the towel. There has to be a solution for this and I'm sure I'm just missing it.

Any help is greatly appreciated.

Azalea.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GraysonMorgan - we were able to resolve the issue over a call. We used a raster product, converted the bit depth to 8 bit on the fly. When running export training data the output format should be set to tiff. @Anonymous User could you share the brief writeup of the steps you took down, to fix your issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Heya @GraysonMorgan!

I am so sorry for the belated reply. But as Vinay mentioned, we resolved it over a call. The issue is with the pixel depth. It has to be 8-bit unsigned or it almost virtually won't work. I was, perhaps, experiencing some sort of loophole when it 'worked' but kinda warped when using 32-bit floating point. And it took longer time too when using 32-bit floating point.

I'm not sure about the issues you might be having, but if it is similar, I would suggest for you to reduce the pixel depth prior to training set sampling to 8-bit unsigned before proceeding any further. From my research, basically, that's the core feature that really give deep learning neural networks the kick it has over other machine learning methods.

Again, sorry for the late reply. The issues have been solved and I just managed to steal some time to summarize things. Feel free to reply if you still have problems. Would love to know what happened at your end.

Best,

Azalea

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which release are you on? Pro 2.7 enables you to work with sparse training samples and more than 3 bands (more than 3 bands was not supported before that). Next - here are some pointers for your workflows.

1. Capture training samples that are representative of your regions of interest. eg:

2. Ensure the output image format for your 'Export Training Data for Deep Learning' GP tool is Tiff. your metadata format should be classified tiles

3. When filling in the parameters for the 'Train Deep Learning Model' tool , set your model type to u-net. Ensure you set ignore_classes = 0 ( in the model arguments section)

4. Lastly - run the pixel classification tools and you should ideally get the results you need. you can go through the process and increase the number of samples if needed.

If things still dont work - can you provide this information:

- how many bands does your input data have?

- what is the bit depth of your input data?

- Are you using CPU or GPU for computing?

- Which software release are you running this on?

- did you try other models (DeepLab for instance)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vinay,

Thank you so much for replying! I am using 2.7. I have been counting on being able to work with a sparse number of training data. I am using Landsat-8 which I composited all the bands in. But I've configured the RGB to show just 3 bands; in case this is crucial information, and the raster is 32-bit floating point. I did try to export it to 8-bit unsigned but I produces a black raster that I can't work with (maybe I'm doing it wrong?)

Capture training samples that are representative of your regions of interest. eg:

Above is the training data that I executed.

- Ensure the output image format for your 'Export Training Data for Deep Learning' GP tool is Tiff. your metadata format should be classified tiles.

Yes. The training datasets are exported as 'Classified Tiles'

- When filling in the parameters for the 'Train Deep Learning Model' tool , set your model type to u-net.Ensure you set ignore_classes = 0 ( in the model arguments section).

I was using the Jupyter Notebook for this but maybe this is a good option

I have a CUDA GPU installed and the last time I train the U-Net model in Jupyter Notebook, it ran out of memory a few times. There are intermittent occasion where the GPU seemingly works fine and that's when I get the model definition to classify the pixels; is there any specific way to maximize the usage of GPU. And I am curious as to how this process can be done in Jupyter Notebook. I'm quite new to deep learning but I'm slowly taking everything in.

Looking forward to hearing from you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Landsat data should not be 32 bit floating point. At what point did it get converted? you can apply a stretch function to convert it to 8 bit, and then do all your processing in 8 bit space.

I also noticed you forced a learning rate (for training). Any particular reason? The stride size is blank above - I'd recommend having some overlap. Regarding running into out of memory issues, with a batch size of 2, and tile size of 256, you shouldn't run into that issue. Which GPU do you have?

If you still run into issues, we can connect directly and I can help out over a screenshare.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your email. I honestly am quite vague about the functions. And

the learning rate is based on the procedure I did in the Jupyter Notebook

where we can can select the 'optimal' learning rate.

The Landsat image has been exported as a new raster composite when we

attempted to clean out the clouds; but it didn't help much.

I've tried exporting it to 8-bit unsigned raster but exporting the training

datasets for deep learning throws an error when I use that raster. The

training dataset itself was created from the 32-bit floating though....so

that could be the cause (?)

I would love to have a screenshare session with you to go through the steps

properly. I am free from 9am to 4pm Malaysian local time and after 9pm.

Please feel free to share with me your most convenient time too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My email id is vinayv@esri.com

Lets schedule a time for a screenshare.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would love to know if there was a solution to this. I am finding the same troubles, though my case is using 1m resolution aerial data and 7 classes. I have been attempting a land cover pixel classification using deeplabV3. Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GraysonMorgan - we were able to resolve the issue over a call. We used a raster product, converted the bit depth to 8 bit on the fly. When running export training data the output format should be set to tiff. @Anonymous User could you share the brief writeup of the steps you took down, to fix your issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @VinayViswambharan , thank you for your help with this issue. I am having a very similar issue where the UNET model type does not classify the entire raster image. The prediction from the classify pixels tool outputs a very sparsely classified image with only two out of 10 classes mapped. It appears to be a very similar issue to @Anonymous User. The only difference is I am not using Landsat data but rather a georeferenced drone image. The image is an 8 bit unsigned three band raster. Additionally, I exported the training data setting the output to tiff and classified tiles. Do you happen to know if there is an obvious solution to this? Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

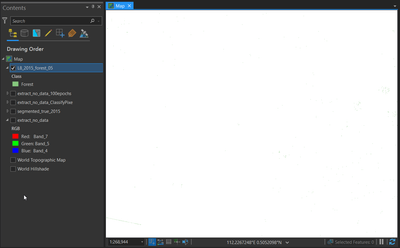

Hello John, I am facing a similar issue. I also have an ariel imagery with bit size 8 and followed the same setting in the model as recommended. Although the image didn't get classified, but the bit size of this output image is 32 bit.

Were you able to resolve this issue?

![2021-04-10 09_56_20-MyProject [Backup In Progress] - Map - ArcGIS Pro.png 2021-04-10 09_56_20-MyProject [Backup In Progress] - Map - ArcGIS Pro.png](https://community.esri.com/t5/image/serverpage/image-id/10547i9A423CBA01B70D41/image-size/medium?v=v2&px=400)