- Home

- :

- All Communities

- :

- Products

- :

- Imagery and Remote Sensing

- :

- Imagery Questions

- :

- Re: Trained UnetClassifier Model and ran Classify ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Trained UnetClassifier Model and ran Classify Pixel Using Deep Learning tool to extract building footrpints - Many pixel shapes are distorted

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Everyone,

I followed the steps stated in 'Extracting Building Footprints From Drone Data' sample notebook available at https://developers.arcgis.com/python/sample-notebooks/extracting-building-footprints-from-drone-data.... I trained the UnetClassifier model and then used Classify Pixel Using Deep Learning tool. I get the final output feature class consisting of building footprint pixels. However, many of these pixels are not of accurate shape as shown below:

After Post-processing,the shape of pixels which do not cover the building footprint wouldn't improve much.

Any suggestions as to why I am not getting complete pixel for some of the footprints?

Thank you

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried reducing the batch_size for your MaskRCNN training? - 8gb should be fine. What are the dimensions of your training images? If reducing the batch size (even down to 1) still gives errors then you may have to reduce the size of the input training images as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a quick look at the code, and it appears that when you use the pretrained_path parameter, it calls the same load function anyway. So should be good either way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your outputs are actually quite reasonable - it is generally just picking up buildings and nothing else. The problem is that your model is just not good enough yet - it probably requires extra training time or more training data to get more accurate. Deep learning requires a lot of work to get the best out of it, especially when you are trying to get the best results possible.

Also, a different model may provide better results. There is another sample notebook for building footprints which uses MaskRCNN instead of Unet:

You could also try the pre-trained Esri building footprint model - just download it and use Detect Objects on your image and see how it goes.

https://scrc.maps.arcgis.com/home/item.html?id=a6857359a1cd44839781a4f113cd5934

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

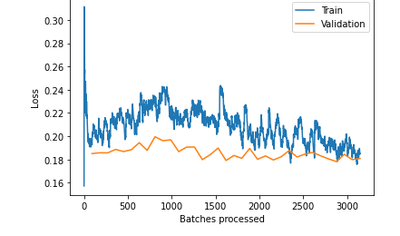

Thank you for the reply. For training data, more than 90% of the buildings of raster dataset were provided as samples. As for training time, at 35 epoch, the train_loss and valid_loss are as below:

The accuracy is 92.42% and dice-coefficient is 0.610.

My computer GPU memory is 8gb, therefore, cannot train MaskRCNN. I also tried pre-trained Esri MaskRCNN model but this model detects around 50-60% of building footprints of my study area (study area is small town with farm houses). Microsoft building footprints perform slightly better. However, Esri pretrained model performed well for densely populated areas where buildings were within touching distance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried reducing the batch_size for your MaskRCNN training? - 8gb should be fine. What are the dimensions of your training images? If reducing the batch size (even down to 1) still gives errors then you may have to reduce the size of the input training images as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

Thanks for the reply. The training image dimensions were 258 X 258, batch_size was 3 and cell size 0.3. Today, I tried training the model on batch_size 1 and seems to be working. Thank you.

So, for UnetClassifier model accuracy, I need to provide more training data. Are there other parameters that I need to consider? The model shows 92.42% accuracy and dice-coefficient is 0.610. How can dice-coefficient be improved? Also, I am using RGB combination for building extraction (also tried . Is there other band combination that can work better?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, most of my experience in deep learning is with object detection and I haven't delved too deeply into pixel classification yet. The problem is that there is no real answer - it really comes down to trial and error when trying to improve the accuracy. So much is dependent on the actual data used to train the model - you could spend days adding more training data or training for many more epochs or using different band combinations and find that 92% is the best you can do. While the accuracy figures from the validation set are a good indicator for the overall accuracy of the model, they can be misleading in some cases. It is definitely good practise to have a third set of labelled data (the test set) that you run the trained model against in order to get a more real world idea of accuracy.

ArcGIS also supports PSP-Net and DeepLabV3 models for pixel classification, so it would probably be worthwhile to try them for comparison. One of them may just work better with your source imagery.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks much Tim. I had another question and hope you do not might answering me on same feed. I have trained an object detection model like MaskRCNN using arcgis.learn module. I want to train this trained model further using more training samples. Should I use pretrained_path function or load() function while I am retraining this pretrained model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a quick look at the code, and it appears that when you use the pretrained_path parameter, it calls the same load function anyway. So should be good either way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Tim for getting back to me. I really appreciate your time and effort.