- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Data Management Questions

- :

- Re: Copy Rows to Table tool produces different out...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Copy Rows to Table tool produces different output between 10.3 and 10.3.1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using the Copy Rows to Table tool in a python script to import a CSV table to a GDB. The table is more of a matrix, and therefore has text labels in certain cells. In 10.3 I am getting the text correctly; however, my colleague using 10.3.1 is getting null values for the text. Below are the results, and I have attached the CSV file.

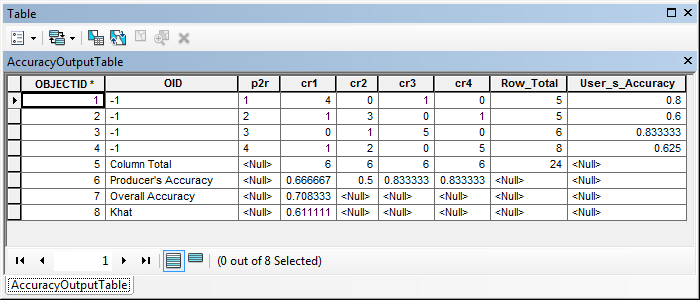

10.3 Output

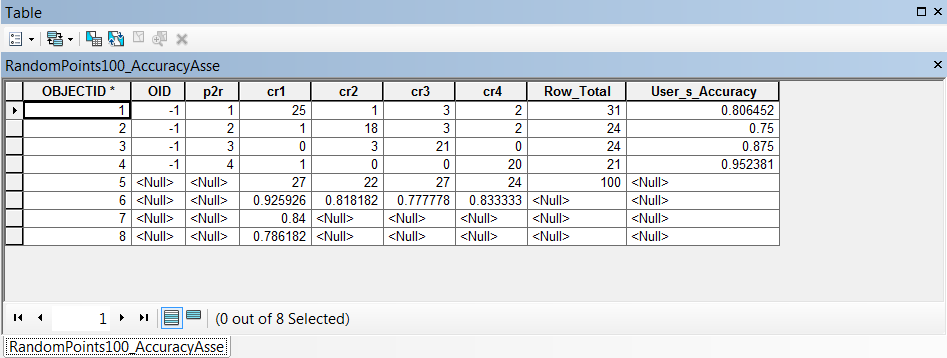

10.3.1 Output

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The behavior being seen here is most likely related to the way the software interprets CSV and other text files. Typically when a CSV, or other text file, is added to a map, and the schema of the file cannot be reliably determined, a schema.ini file is created in the same file directory to dictate how the file should be shown. If this is the case, the software will attempt to create it for you and specify what it sees as the best fir - reading off of the first few rows to guess what type each field should be.

The scema.ini file can overridden to distinctly specify how delimited text files are displayed within ArcGIS. An example of when you would want to use, or modify, the schema.ini file to override the default behavior is when ArcGIS is misinterpreting a field type. The following example shows how to do this for a field called PLOTS which should be displayed as type Text but is being interpreted as type Double in a CSV file called Trees.

[Trees.CSV]

Col14=PLOTS Text

More information on this topic can be found here: Adding an ASCII or text file table - Overriding how text files are formatted: http://desktop.arcgis.com/en/desktop/latest/manage-data/tables/adding-an-ascii-or-text-file-table.ht...

The best recommendation in this case would be to see how each case is using the schema.ini file. Simply going off of the two images it would be best to go ahead and create or modify your own schema.ini file because the fields contain both text and numeric values. It would be easy for the software to interpret these fields as number thus not allowing the string information to be shown. Editing the schme.ini file so that these fields are set to String should allow you to continue working as needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note the left & right alignment between your 2 examples.

In the OID column, the first is assumed text (left aligned), the 2nd is right aligned and is assumed to be numeric, then the text contents get dropped.

I presume the 2 tools read a different number of rows to decide whether the field is numeric or text.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The behavior being seen here is most likely related to the way the software interprets CSV and other text files. Typically when a CSV, or other text file, is added to a map, and the schema of the file cannot be reliably determined, a schema.ini file is created in the same file directory to dictate how the file should be shown. If this is the case, the software will attempt to create it for you and specify what it sees as the best fir - reading off of the first few rows to guess what type each field should be.

The scema.ini file can overridden to distinctly specify how delimited text files are displayed within ArcGIS. An example of when you would want to use, or modify, the schema.ini file to override the default behavior is when ArcGIS is misinterpreting a field type. The following example shows how to do this for a field called PLOTS which should be displayed as type Text but is being interpreted as type Double in a CSV file called Trees.

[Trees.CSV]

Col14=PLOTS Text

More information on this topic can be found here: Adding an ASCII or text file table - Overriding how text files are formatted: http://desktop.arcgis.com/en/desktop/latest/manage-data/tables/adding-an-ascii-or-text-file-table.ht...

The best recommendation in this case would be to see how each case is using the schema.ini file. Simply going off of the two images it would be best to go ahead and create or modify your own schema.ini file because the fields contain both text and numeric values. It would be easy for the software to interpret these fields as number thus not allowing the string information to be shown. Editing the schme.ini file so that these fields are set to String should allow you to continue working as needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

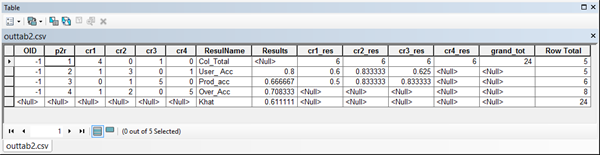

A tad confused and I must be missing something... why is the whole file being read in as a table in the first place, when

- the first row is the column headings, ( OID,p2r,cr1,cr2,cr3,cr4,Row Total,User's Accuracy )

- rows 2- 5 are the data

- (-1,1,4.000000000000000,0.000000000000000....

- ....

- -1,4,1.000000000000000,2.000000000000000....

- row 6 is the column totals (Column Total,,6.0,6.0,6.0,6.0,24.0) which will cause a problem since the text in the first column will cause the OID column to be confused since you have to have common data type and this is mixed

- the rest ( Producer's Accuracy,,0.666666666666......

Overall Accuracy,,0.7083333333333334,,,,,

Khat,,

is the results of the Kappa analysis

I would only expect a spreadsheet, or text editor to read the file in as it appears and I would hope that a table or array would enforce the common data type by column expectation. Is this not the way that tables are used in GDBs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

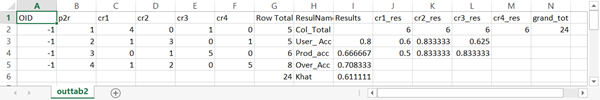

I started with a CSV file with the following values:

| OID | p2r | cr1 | cr2 | cr3 | cr4 |

| -1 | 1 | 4 | 0 | 1 | 0 |

| -1 | 2 | 1 | 3 | 0 | 1 |

| -1 | 3 | 0 | 1 | 5 | 0 |

| -1 | 4 | 1 | 2 | 0 | 5 |

Originally the values were copied/pasted into Excel to do the calculations, but in order to automate the process I used Python to make a series of calculations. I then combined the old values with the calculated value in a list. The list was then written to a CSV. As you see the table opens correctly in Excel. The only reason I was trying to bring it into a GDB is to better view it in ArcMap. The CSV is not read correctly, and I thought I would force it into a GDB to read it within the application. As stated above, clearly our two applications are reading the CSV differently as it creates the GDB table. Not sure if that is a bug or expected behavior between the versions, but it seems the schema file would alleviate my issue and allow the CSV to open with the correct field types. Thanks for all the input!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Timothy,

You may want someone in support to take a look at this. Spreadsheets and flat-files, such as Excel, csv, etc., do not enforce field types. As such, ArcGIS should scan the first eight rows to determine the type of the field.

In the case of your issue the values are showing as NULL because the system determined that the field type is numeric. From what I'm guessing this may be related to the names of the fields used in your spreadsheet not being optimized for use in ArcGIS. I properly formatted the field names and the data came across as you stated it did in 10.3.0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Freddie, I wouldn't fix it, as I indicated in my post, a table is not the place to load spreadsheets data because enforced column types is preferable. The values in rows 6 to the end of the file should be copied into new columns rather than remain in the position that they are in. The spreadsheet could have been reformatted to produce this

from this

Although it works, it is cumbersome but demonstrates a good reason not to mix spreadsheets with ArcGIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dan,

Thanks for the update, but I somewhat disagree with not mixing spreadsheets with database tables. Even outside of the ArcGIS platform there are tons of valid workflows where users store data in a spreadsheet and later need to migrate that information into a database. Similar to this case, I could replicate the same behavior within most relational databases (i.e. SQL Server, Oracle, PostGreSQL, etc.).

Although it may be cumbersome, certain practices must be followed in the creation of the spreadsheet to ensure that the data can easily be migrated. This is the reason why pages were created to assist users with this workflow.

Formatting a table in Microsoft Excel for use in ArcGIS

Understanding how to use Microsoft Excel files in ArcGIS

If you want to see an extreme, but valid, use of spreadsheets in ArcGIS you should check out plugin datasources.

Simple point plug-in data source

In a plug-in data source you can go so far as writing code that will get a text file to behave in the same manner of a feature class within ArcGIS. I'm still amazed by how many people would rather implement this instead of simply using data in a geodatabase.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Freddie...seen them, read them, try to get my students to read and follow them...but the reality is, for the vast majority of people, their problems would be negligible if:

- Keep the data on the first page and only the data... and follow the suggestions below

- analyses go on subsequent pages

- keep each column on the data page, one data type,

- don't mix and match to make things look pretty

- format columns explicitly

- no blank rows

- there is no such thing as an empty cell... all cells are coded ...

- ie not available -888888

- no value -999999

- no clue -77777 or appropriate for your data type ... you catch the drift

- if the table isn't used for analysis...what is it doing in ArcMap in the first place

- if you really really need it... hyperlink

Of course these have been my observations and not just with the Arc* things, but other software packages as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Couldn't agree more Dan.

How many post here do we see here saying "tried to get my horribly formatted piece of csv / excel / whatever" into ArcGIS and it didn't work, what can I do..."

I am an old timer, I know, but one thing I always emphasise with beginners is data, data & data, know what it is & what it means. Especially the coordinates.