- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Different-sized areas bias in thematic maps and po...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Different-sized areas bias in thematic maps and possible solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my organization, we are looking for a possible solution to deal with the different-sized bias in thematic maps at census tract level/block-group level. We need to present our data as raw counts/totals. Therefore, normalization does not make sense. Other solutions such as cartograms don't make sense either. Any ideas?

We have two problems with large census tracts/block groups. First, larger areas naturally draw the map users' attention, especially if they are styled with darker colors. Second, the population in large census tracts is not evenly distributed like in the small census tracts. In large census tracts, the population is spatially clustered in a few small areas within the tract.

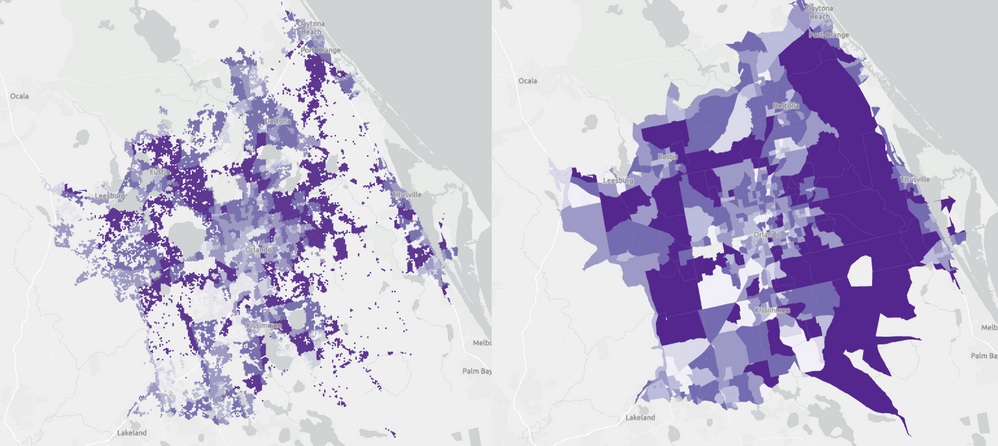

Here is my solution so far. I took advantage of the Microsoft building footprint data with all building footprints in the United States as a proxy of where the population is within large census tracts. Then, I created a hexagonal tessellation of 310 meters of side and 0.25 squared Km of area. On the left map, you can see the Orlando (FL) area's result if I keep all hexagons with one or more building footprints inside. The right-hand side map contains the original census tracts.

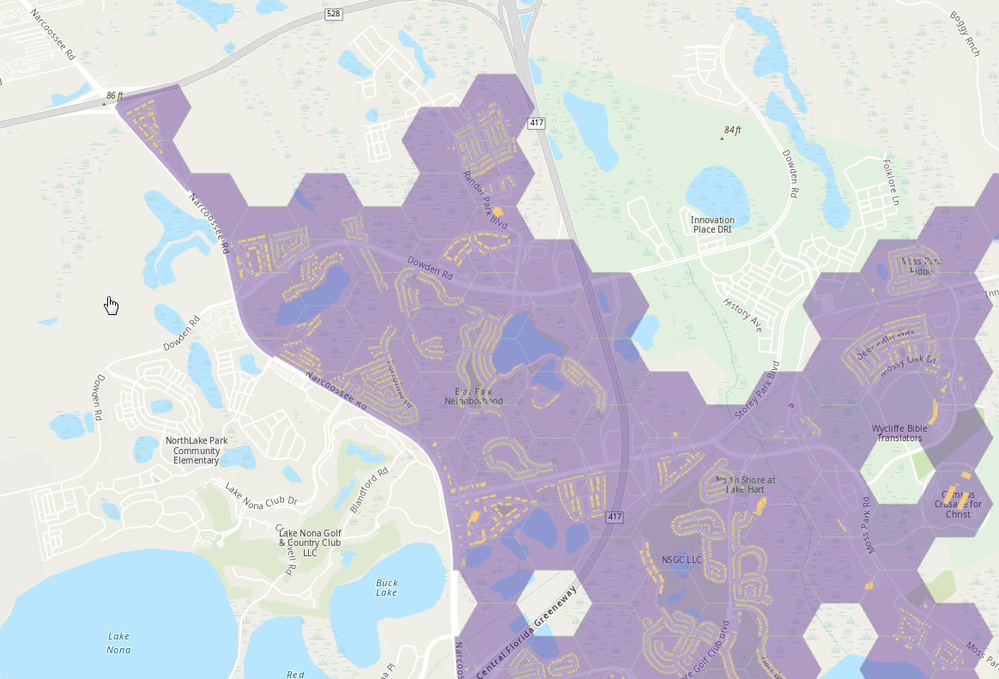

In that area of Orlando, there are one million building footprints and 40,000 hexagons. The hexagons contain between 0 to 350 buildings. If I zoom in on the left and side map, you can see that the hexagons only cover areas with buildings inside.

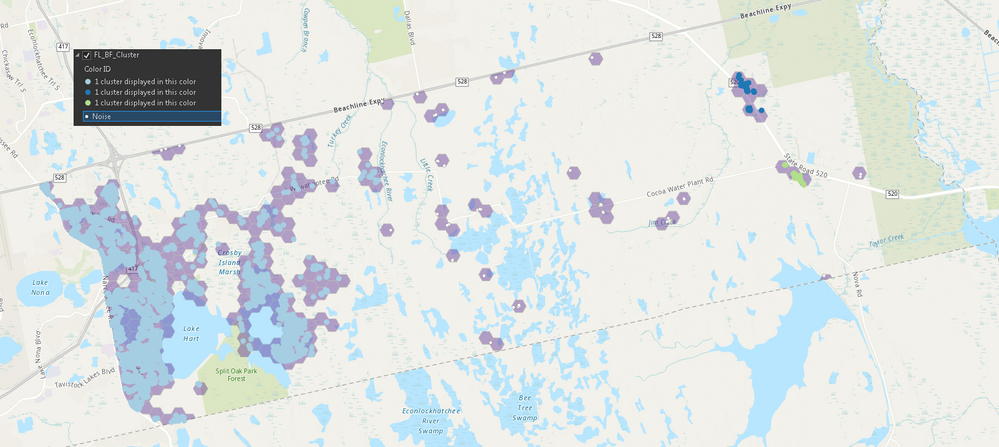

My next step will be getting rid of non-residential buildings by using land-use cover data. Finally, I am thinking of using a density-based cluster algorithm to remove the noise: isolated buildings that are not relevant for our business context:

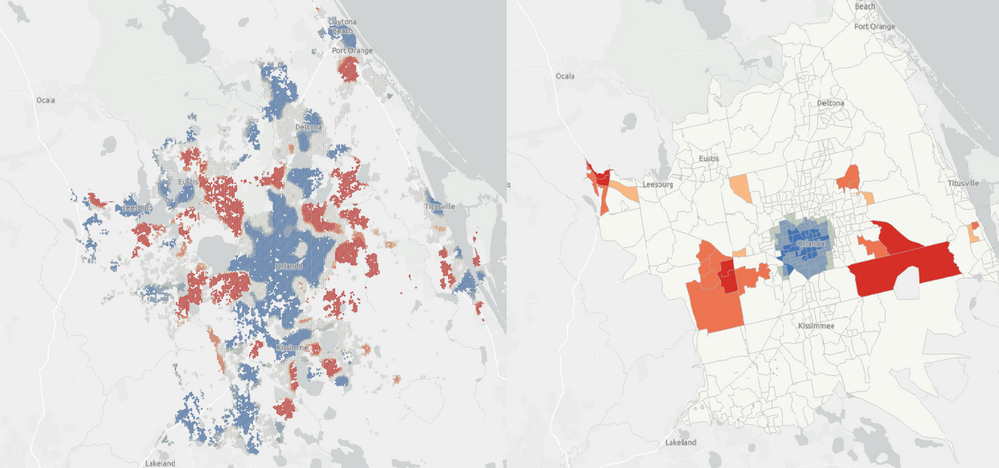

In the end, we are going to use hot spot analysis. Interestingly, the output of the hot spot analysis is quite different if we use hexagons instead of census tracts:

Do you have any idea to share with me to improve my approach or suggest a better approach?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The most common way of displaying raw data without normalizing is to use proportional symbols.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just some further thoughts - you have already recognized that disaggregating data using population\building footprints has issues, because not all buildings are residential. Using land use to assist is good, but what happens when you get mixed land use areas (which are really common) such as commercial\residential? One factor you may not have considered is that each footprint may not necessarily be one family - what about high density multi-story buildings with hundreds of people in a single footprint?

Your hexagons are very small relative to the overall area. The smaller you make them, the more chance of errors during the disaggregation. It wouldn't be hard to pick out some individual hexagons that are not correct and cast doubt on the entire map. It all comes down to the purpose of your mapping - if it is being used for policing, healthcare, policy making, strategic planning etc. then it might be quite important to ensure the results are accurate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, all your commentaries are really helpful.

Regarding the mixed land uses, commercial/residential, I can assume that the land use is residential because I am interested only in residential buildings. Typically, when a building has mixed uses, the commercial space is on the ground floor and apartments above.

Regarding the multi-story buildings with hundreds of people in a single footprint, I can take advantage of the building's height and the area of the footprint coming with the data to calculate the volume of the building. The volume should be a good proxy of the number of residents in the building, and, on average, all buildings have a rectangular prism shape. Using the volumes per hexagon, you can desegregate to hexagon level taking into account the number of residents in each hexagon. In the end, all people living in the same census tract/block group have the same demographic characteristics; therefore, the larger the volume of a building, the higher the value of the variable of analysis assigned to the building.

In any case, I am not trying to disaggregate at the hexagon level. I just wanted to reduce the visual bias produced by the large census tracts by removing the hexagons with zero buildings inside. If I overlay each census tract's outline on the top of the hexagons, it will be clear where the people are living in a large census tract. My data is still at a census tract level.