- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Detect Objects Using Deep Learning Error with ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Detect Objects Using Deep Learning Error with new RTX 3060

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

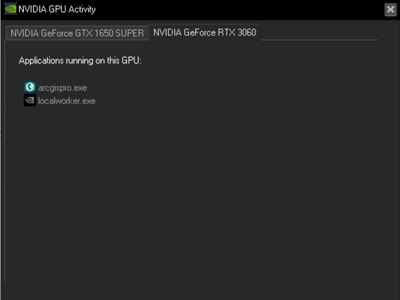

Error appears when I use "Detect Objects Using Deep Learning" in ArcGIS Pro with new RTX3060 GPU. The model used is usa_building_footprints.dlpk. I am using ArcGIS Pro 2.7.3. I have no issues running the model with my GTX 1650 Super GPU, same computer, same settings. It appears ArcPro engages the GPU as the application is shown running on the GPU:

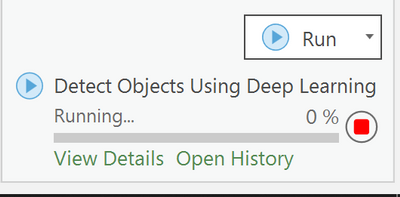

However, the program shows as "Running" but never progresses past 0%.

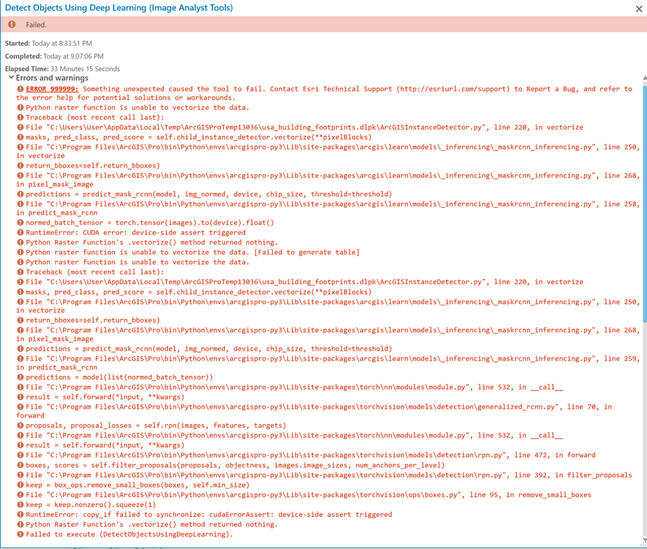

After about 30 minutes of "running", the following error message is returned:

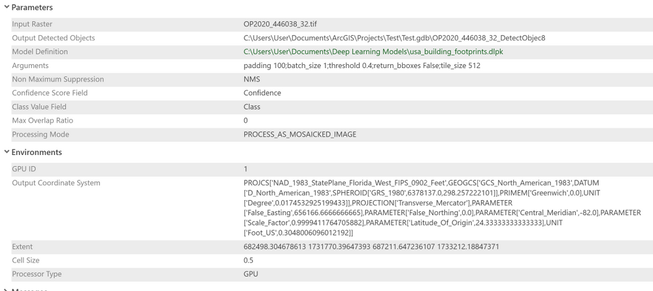

I used the Input Parameters and Environment below. Again, it runs fine with the GTX 1650 Super GPU. All drivers are up to date on the new RTX3060.

Thank you for help or troubleshooting ideas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

recent blogs on this and related topics especially since your hardware is new

Deep Learning with ArcGIS Pro Tips & Tricks: Part 1 (esri.com)

Deep Learning with ArcGIS Pro Tips & Tricks: Part 2 (esri.com)

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

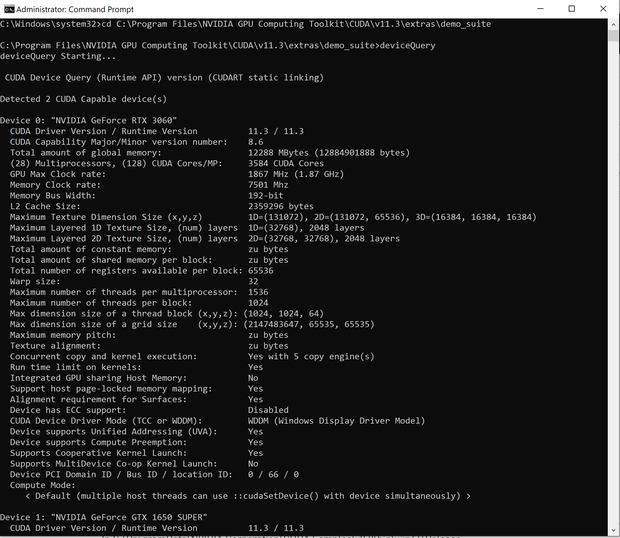

Thanks, Dan. I have worked through the recommended steps in the blogs. The CUDA Toolkit has been installed, and running deviceQuery show both cards are CUDA-capable and detected.

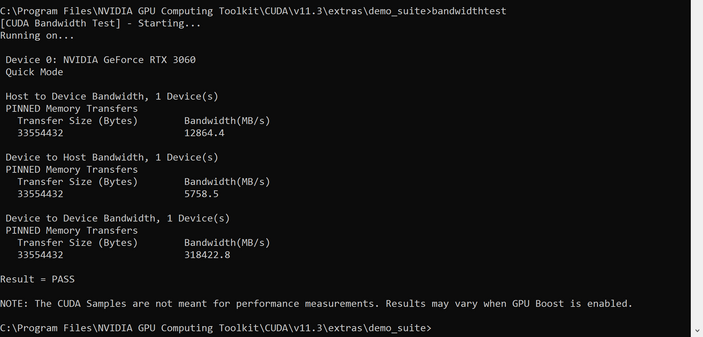

BandwidthTest passed:

The Deep Learning Libraries for Arc Pro have been installed. I am using the default arcgispro-py3 environment.

I am not sure what else to try. The out-of-box model works fine with the GTX 1650 Super, but not with the new RTX 3060.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An issue was just posted to Esri's deep learning GitHub site about this (may be caused by a lack of CUDA 11 support in the underlying packages):

https://github.com/Esri/deep-learning-frameworks/issues/17

Probably worth adding your name to that as well - the more people raising it the more likely it might get fixed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Tim. I added my name and issue to that post on GitHub.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hulseyj, I have just experienced the same problem as yourself. Did you find a resolution?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Andrew. Glad to know I am not the only one experiencing the issue. No resolution yet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried downloading this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is 100% caused by lack of CUDA 11 support and lack of updated libraries for PyTorch, TensorFlow, etc.

The latest RTX 30 series GPUs require CUDA 11.

ESRI's current "Deep Learning Frameworks" as linked above use CUDA 10.1

Unfortunately, looking at the pre-release frameworks for ArcGIS Pro 2.8 (available here: https://anaconda.org/Esri/deep-learning-essentials/files) they have still not updated to CUDA 11, or updated PyTorch/TensorFlow. I do not understand why not. I think this comes down to demand.

Everyone who has this issue should be posting and making sure that ESRI knows this is a problem for an increasing number of users -- and will obviously only increase further as more people get the latest GPUs. With how much ESRI is touting ArcGIS deep learning capabilities, this slow response is quite surprising.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Getting the same error (below) trying to train deep learning models in some instances. I am running an Nvidia Geforce RTX 3080 (laptop). I can get the same models trained on a desktop Quadro RTX 5000 that has CUDA toolkit 11.2 installed but also has an instance of CUDA 10.1 installed as well. For what its worth...

File "C:\Users\natep\AppData\Local\ESRI\conda\envs\DeepLearning\Lib\site-packages\torchvision\ops\boxes.py", line 95, in remove_small_boxes

keep = keep.nonzero().squeeze(1)

RuntimeError: copy_if failed to synchronize: cudaErrorAssert: device-side assert triggered

Failed to execute (TrainDeepLearningModel).