- Home

- :

- All Communities

- :

- Products

- :

- Imagery and Remote Sensing

- :

- Imagery Questions

- :

- Re: Deep Learning Not Using GPU

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Deep Learning Not Using GPU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

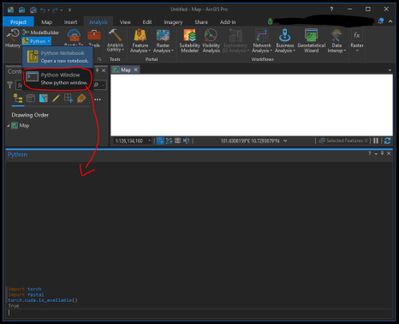

G'day, I am very new to the world of ArcGIS and would like to set up a deep learning model to automatically detect objects within an image. I have created the necessary chipped images (Using "export training data for deep leaning) for it however when I use "Train Deep Learning Model" and I select it to use my GPU (RTX 2070) it just uses my CPU. I have followed the Guide called: "Deep Learning With ArcGIS Pro Tips and Tricks: Part 1". However when I get to the very end step of using the import torch and fastai command in the python window, no results are shown.

If anyone could help me out that would be fantastic!!

Guide for settting up deep learning

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I go to test that I do not get any results, no "True" or "false" coming up. I thought I installed CUDA correctly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Stevohu,

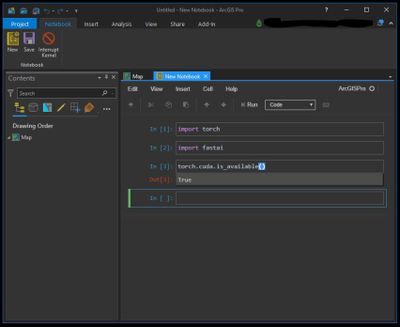

I followed the guided steps from the link you mention above, and the results was 'True'.

Another way is using Python notebook, the result also 'true'.

'Shift' + 'Enter' to run the codes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used Python Notebook and got this result.

So I ran the "Train Deep Learning Model" with the folllowing settings:

Model Type: Single Shot Detector

Zooms: 1.0

Ratios: [1.0, 1.0]

Chip Size: 256

Backbond Model: ResNet-101

Processor Type: GPU

GPU ID: 0

When it started running it maxed out my CPU (i5 9600k @ 5.1ghz) but it was not using my GPU at all

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the exact same problems. Did you ever get it figured out?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BrennaOlson ,

Have you try to update ArcGIS Pro version?