- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Ideas

- :

- Batch/Power Set Data Source

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Follow this Idea

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Often times data sources update on an annual or semi-regular basis and we start using the new datasets in that year's projects. In regional planning, much of our work is cyclical or annual, so many of our workflows involve copying last year's and updating with new data. We also sometimes get the design of some layers looking nice and need to make a new map with the same symbology and labeling, so we'll copy the map, but we still have to add the new data and import the symbology for each layer manually, or manually set the data source for each individual layer. If there were a way, maybe from the data source tab of the TOC, to change the source en masse for all layers living in that location, it would be a huge timesaver.

The 'power' part of this comes from the fact that sometimes it's not just the .gdb or folder that's named with a new year, but the feature class or .shp itself. In this case, it would be nice to be able to select the portion of the name containing the date/year with a wildcard (similar to the options available in ModelBuidler's batching tools or PowerToys PowerRename).

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is this something that you can't do with https://pro.arcgis.com/en/pro-app/latest/help/projects/update-data-sources.htm ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

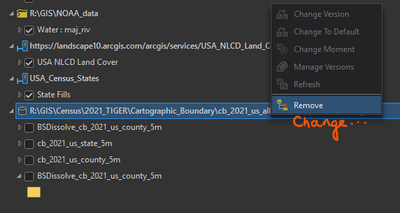

The screenshot seems to indicate that what you're asking for is https://community.esri.com/t5/arcgis-pro-ideas/replace-a-data-source-in-list-by-source-tab/idi-p/937...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

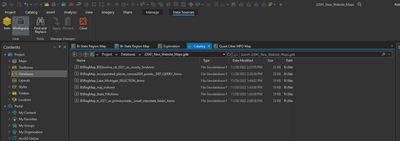

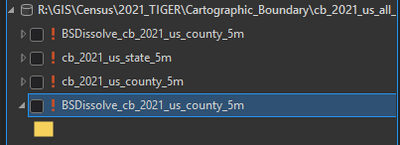

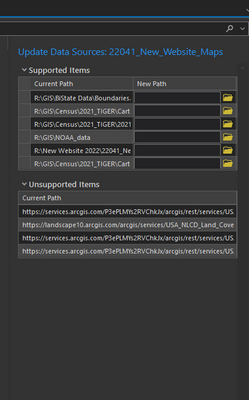

@KoryKramer I didn't know about that, thanks! I'm stuck on step 2/3 though...the buttons/rows it's describing are not there. The Item/Workspace toggles don't do anything, it just shows the catalog.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

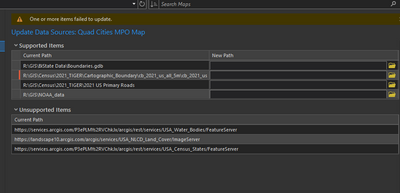

Ok, I got it to open by going the 2nd route (R click map > Update data sources), but it failed. It doesn't say why, but I suspect it's because there's no support for wildcards and the feature classes have different names. It doesn't know what to look for, so the wildcard functionality would be key.

I can see the utility of that interface for updating multiple data sources in one place, but the option to update them in the area described would be less clicks and more intuitive -- even once you get to this catalog view, you're still clicking that folder icon and navigating to individual directories, so it would make more sense if you could R click the source locations straight away from the map and go right to the Change Data Source box. That's what you're looking to update; it doesn't make a whole lot of sense for it to be a R click on Map. The list by source view is just fine, I'm not sure why we need a separate, more cumbersome one hidden in the catalog just for changing them.

Plus, it comes up very narrow, which makes the paths really hard to distinguish. You can manually resize at the expense of viewing your content, but that's not so great when you go back to other views.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@wayfaringrob As a suggestion, which is tangential to the topic here, I would recommend looking into moving to a more traditional, transactional database model. At my agency, we also used to do the annual copy and rebuild routine. Then a few years ago I discovered the transactional database model of retire and replace, where old or updated features are retired (usually by a Boolean field or similar field), features being caried forward into the new year are then copied, pasted into the same feature class, and then the new features are updated. Typically, you also record which feature retired a feature by recording a unique ID or GlobalID in a "RetiredBy" column (see Esri's Parcel Fabric model). The feature class just keeps growing every year, with all the historical years residing in the same feature class as current features. Much of the changes can be scripted in Python or Model Builder, thus automating a lot of labor and reducing your staff hours. What this gets you is a database where historical and current features reside in the same table. Predefined definition queries filter the views accordingly in your maps, this way you only have to create a single map that always points to the same feature class and will always only display the features you want/need. Layouts could be easily updated to reflect changes in years, stats, etc. This eliminated countless hours of data and cartography wrangling. It also allowed us to more easily report on past activity, as we were now able to query just one database for past stats instead of having to query multiple, one at a time.

When we first started this, we used FGDB, but after some time we upgraded to EGDB on a SQL server, which improved database performance a bit and made reporting easier, as we were able to directly connect Excel based report directly to the SQL table, but FGDB worked well in their own right.

This solution may or may not work for you, but it may be worth looking into.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@RandyCasey I'll have to look into that. Thanks for sharing! Typically these are standalone datasets published by external parties, so we would have to figure out a way to compare them to identify which features have not changed, but we could definitely work that into a model I suppose.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

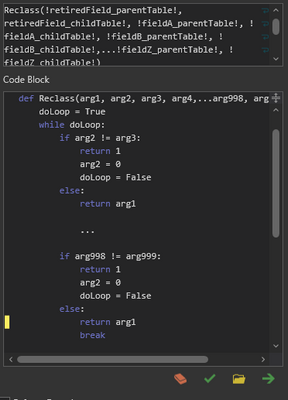

Well, the best method of doing that would be in Python but if that is not an option for you then a model could be constructed to perform similar actions: not as efficient, but it would get the job done. The key would be to make sure all of your existing features have a unique ID field. This field should not be the OBJECTID field or the GlobalID field. We have adopted a simple Unix timestamp for our UID fields, which can be generated using Field Calculator and Sequential Calculations (How To: Sort and create a sequentially ordered ID field in an attribute table in ArcGIS Pro (esri.co...). This value should remain consistent and should be maintained by all parties who maintain/update/edit the data. Once this has been established, it is a fairly simple task to create a model that would reconcile the features with matching UIDs. This concept assumes that the parent feature class (the one maintained by you) and the child feature class (the one brought in from the 3rd party) are in the same map and have been related by the UID, and <retiredField> being set to "None" using Field Calculator (which sets the field to Null) in all features in the child feature class (this is important!). The model would need to join the two feature classes by UID, then with the Field Calculator, compare the matching fields in the join with a code block to find if any have been updated (i.e., calculating from the <retired field> column in the parent side of the join:

Using Code Block:

...repeating for each field to be compared until the while loop returns false or all fields have been compared and the "else:" after the final if statement has been activated (this must be put in after the final if statement, or your while loop will loop infinitely).

Once the update has finished, the model can then remove the join, find any features activated in child feature class (select by attribute using the retired field where the field = 0), then append them into the parent feature class.

If you are maintaining a true transactional database model (where you have a Retired By field), you could perform one more join, this time only in your parent feature class, where you join the newly appended features to their recently retired counterpart and update the Retired By Field with the GlobalID of the newly appended feature, thus maintaining a historical chain of events in your parent feature class.

A model to do all of the above would take some time to create and fine tune, but it could be done. Again, a Python script would be way more effective and less fiddly, but you have to use the tools you know.

I have probably oversimplified a few steps here, so a lot of experimentation research into the appropriate GP Tools would need to be performed before you could have a final working model, but the overall process is logical and could be completed.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.