- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Questions

- :

- Re: How to bulk insert multiple features from JSON...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to bulk insert multiple features from JSON array with Add Feature output connector in GeoEvent Server?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have begun working with GeoEvent Server and for an early project I am using the Add Feature output connector to insert features from a standard JSON array. The JSON is being piped in from the Receive JSON input connector. I have noticed that GeoEvent Server will create an event for each element of the JSON array. The JSON is structured so that each element represents a new feature. I would like to know if there is a way to treat all of the array elements as a single event which in turn would lead to a single transaction. GeoEvent Server seems to create the events very quickly but I can count the seconds that pass in between each new insert. I can always call the Add Feature REST endpoint directly or run a stored procedure, but I would prefer to use GeoEvent Server if it will support the volume coming in. Again, I have just begun working with the software and I imagine there might be a way to run a bulk insert. The add feature output connector does have an option for setting a feature count transaction threshold, so there must be a way. I may look at developing a custom transformation connector (this would be incredibly helpful wink wink) but I would prefer sticking with the out of the box tools for the time being.

I am not really sure how to resolve this issue. There might be a solution that relates to the structure of the GeoEvent Definition or the structure of the JSON array. Again, I would like to insert multiple rows in a single transaction using the add feature output connector.

Thanks in advance to any helpful responses.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi James,

To help clarify, are you looking to treat all objects in your JSON array as a single record (analogous to a single row in a database table), or are you looking to simply 'queue' individual records (objects in the array) in such a way that any adds/edits to your feature service is done in 'bulk'? You had asked:

I would like to know if there is a way to treat all of the array elements as a single event which in turn would lead to a single transaction.

This would imply that you want to merge all array elements (or objects) so that they are a single event record (i.e. row in a database table).

Likewise you had also asked:

Again, I would like to insert multiple rows in a single transaction using the add feature output connector.

This would imply that you want to maintain each array element as its own respective event record. Rather then adding these one-by-one in real-time, you would like to add several in bulk. No?

I assume you are asking about how to achieve the latter scenario, but I would like to be completely sure since both questions entail entirely different responses. If I am to assume the latter, you might want to look into the Add a Feature output connector properties, "Update Interval (seconds)" and, as you've pointed out already, "Maximum Features Per Transaction".

The "Update Interval (seconds)" parameter is important here since it can be used to specify how frequently the output connector will essentially queue processed event records, make a request to the feature service of interest to add those processed event records as new feature records, and then finally flush its cache to make room for additional processed event records. By default, this property is set to 1 second but if you wanted to, you could adjust this to be something of your choosing like 2 minutes (120 seconds). Using 120 seconds as an example, GeoEvent Server will queue all processed event records (from your input connector) for 2 minutes. Once the 2 minute window has lapsed, GeoEvent Server will make a request to the feature service to add its queued event records as new feature records. GeoEvent Server will then flush its cache to make room for the next set of processed event records only to repeat the same process 120 seconds later. The important thing to keep in mind with this property is that it works using an iterating time interval, not record count (!= 'add every time 200 event records have been queued'). If you know that your data is coming into GeoEvent Server at a consistent rate (e.g. 20 event records per second), you could hypothetically adjust this property to match what you're looking for in respect to a certain 'transaction size'.

Of near equal importance here is the "Maximum Features Per Transaction" property. This property controls for how many records to include in any single feature service request to add new feature records. By default this is set to 500 which means that each add request will contain a maximum of 500 records. If we go back to the above example involving a 120 second update interval, let's say you've queued 1800 records over that period of time. This property will ensure that 4 transactions are made to the feature service (500 + 500 + 500 + 300 = 1800) to add new feature records. It's important to note that this property doesn't provide inhibit the number of requests made (!= 'only make 2 requests at the maximum transaction size regardless of how much data there is still'). You might be thinking that this property isn't very useful for your scenario, and that might be true, but I thought it is important to share in case you need the requests being made to your feature service to generally contain a certain record count (and nothing more). Realistically, this property was intended to help reduce the burden on an external server/service by providing transaction management.

If you're looking to find out more information regarding the former scenario, please feel free to let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gregory,

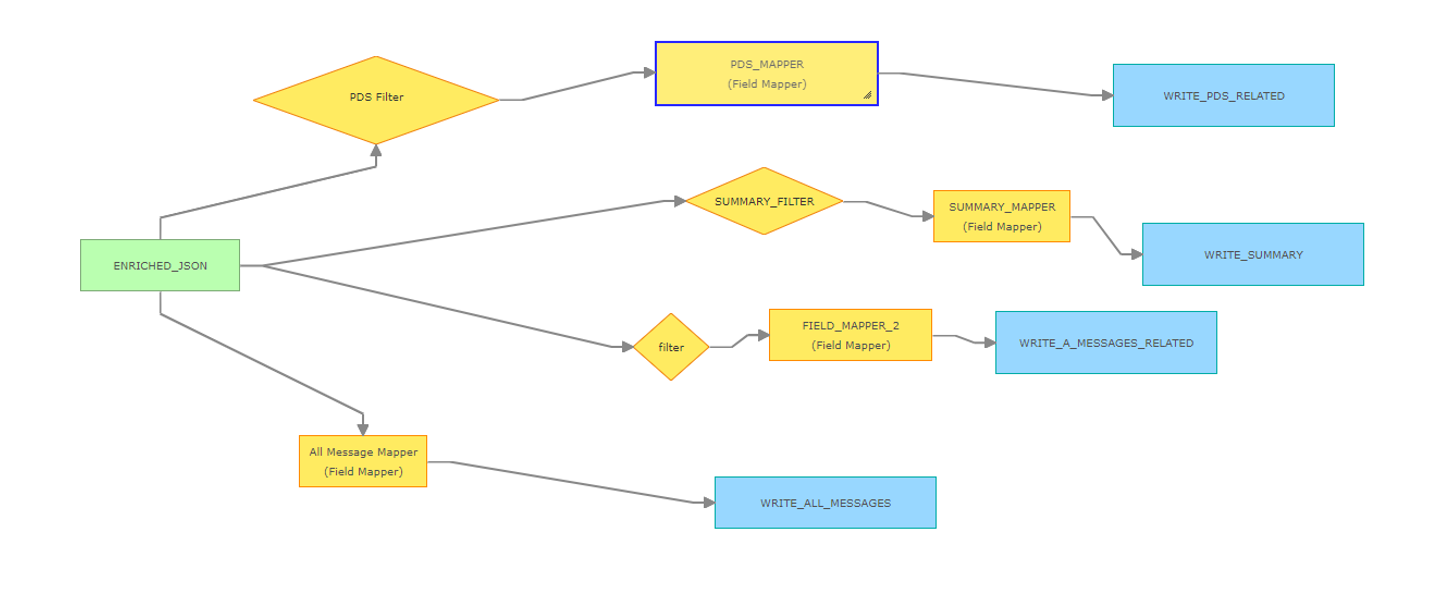

Thank you for your response. I am trying to insert multiple records in a single transaction. I have tried altering the update interval and max transactions but I think the lag might be due to a number of filters that each event has to be checked against. The service we are working with can deliver data to four separate tables based on the characteristic of each event. These would be related tables that only receive data if a certain object is present in each JSON element. I turned off the related table processors and the events piped in at a pretty good rate. I'm attaching an image that shows what our service looks like. The processor at the bottom is receiving the largest volume of data. Again, the output is handled at a solid rate when I turn off the three processors at the top. I could always place the input in a separate service that handles the related tables.

Do you know if filters can cause a service to slow down?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi James,

You're welcome. To answer your question about whether filters can cause a service to slow down, the short answer is yes* (* with some nuances to consider). Any time that you add filter or processor elements to a GeoEvent Service, you are effectively introducing additional processing overhead cost. Each element requires a varying amount of system resources in order to perform its designated work on the inbound real-time data. When we start to take into account the rate, schema, and size of the data (as well as other external factors such as network latency and system resources), this type of discussion can very quickly become more nuanced. I don't bring this information up to give the false impression that a single filter tanks the performance of a GeoEvent Service. That's not true. Rather, the takeaway here should be that all of these variables can have varying effects on the total throughput of a GeoEvent Service (and by extension, a GeoEvent Server site overall). While it's certainly best to consider the above points when building out a GeoEvent Service, I don't think its directly related to the problem you described.

What strikes me as interesting is what you said about how the behavior improves when three of your four processors (I read this as routes) are disabled. This tells me that there might be an issue with the design of your GeoEvent Service as it relates to event record throughput. It's important to consider how GeoEvent Server handles event records when there's more than one element in the service to send data to. Take your GeoEvent Service for example. You have one input connector (named "ENRICHED_JSON") that is configured to send data down four separate paths to four different elements (PDS Filter, SUMMARY_FILTER, filter, and the All Message Mapper). If your data is coming in at a rate of 1000 events per second for example, GeoEvent Server is actually taking a copy of those 1000 records and is sending them to each of the 4 elements. At any given point in time, this actually works out to 4000 events being processed per second since the data that is coming in is replicated for each route. The filters might be discarding a lot of the data downstream, but that doesn't change the initial pressure at the start of the service. It makes sense to think that the bottom route is handling 'the most' data, but the truth of the matter is that each of the first four elements is handling the same amount of data (they're just doing different things with it). Its the 4th element that is passing the most data through.

If you're interested in learning more about GeoEvent Server best practices, ideal service design, performance, etc, I would recommend that you check out some of our best practice conference session recordings (example).

If you'd like to send me a direct message, perhaps we can set up some time to discuss this in more detail. I still have some questions about the behavior you're seeing happen, the rate of your data, what the end-goal is as far as writes to the feature service are concerned, etc. I don't want to mislead you with any particular advice if the issue is in fact related to something else entirely that wasn't covered here. Let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gregory,

Apologies for my delayed response. I did some additional testing and I believe the filters are causing the lag I have been seeing. I load tested over 500,000 features into the primary table and GeoEvent was able to handle the data efficiently. I turned the other connectors on and was able to count the inserts as they processed. I am following your guidance and designing the JSON schema so that we receive the data on separate input services. Thank you very much.