- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Reviewer

- :

- ArcGIS Data Reviewer Blog

- :

- Reporting data quality using Attribute Rules and P...

Reporting data quality using Attribute Rules and Python

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This post comes courtesy of Bailey Ohlson, from Austin, Texas. Bailey is a student at the University of Texas at Austin where she is finishing her Master of Arts (M.A.) in Geography.

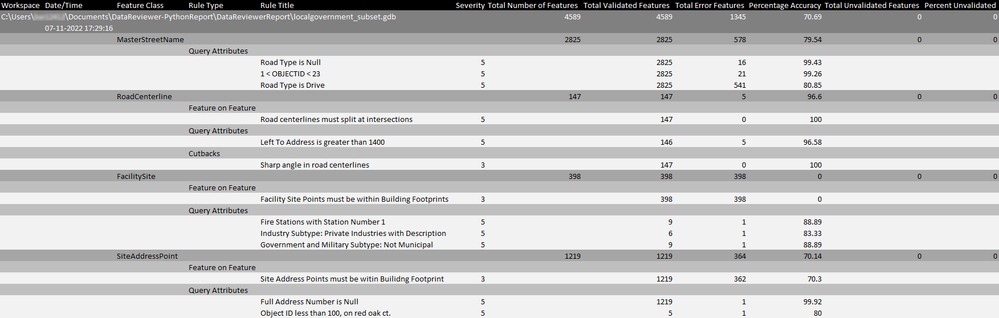

Data Reviewer for ArcMap provides the functionality to create different reports based on the records generated by running reviewer checks on a dataset. These reports provide a quick way to assess data quality in an easy-to-read table report format in Excel to inform stakeholders and other non-GIS professionals. The Automated Check Report By Origin Table report included information such as:

- Date and time the tool was run

- Feature Class name

- Check Type

- Check Title

- Total Records

- Total Results

- Percent Accuracy

This blog explains how a similar Excel report is generated in the Q2 2022 release of ArcGIS Pro by extracting data from Attribute rule error layers and feature classes that contain validation attribute rules using ArcPy and OpenPyXL.

The Evaluate Rules geoprocessing tool (or the Evaluate command in the Error Inspector) in ArcGIS Pro evaluates features based on conditions identified in validation attribute rules and stores the results of that evaluation in a series error tables in your database. However, more information is required to create a Data Quality Report than can be found only in the error tables. Data must also be extracted from the features themselves and linked to the data from the error tables, ArcPy can be leveraged to achieve this task.

First, all the feature classes in a dataset and their corresponding attribute rules must be identified. For each attribute rule, their parent feature class can be used to extract information for each attribute rule like the rule ID, rule name, rule type, rule severity, the subtype filter, and the input filters, using ‘arcpy.da.Describe’.

def attributeRule_info(feature_class):

rule_info = []

attrule = arcpy.da.Describe(feature_class)['attributeRules']

for rule in range(len(attrule)):

error_number = attrule[rule]['errorNumber']

rule_type = attrule[rule]['type']

if(error_number == 998 or rule_type != 'esriARTValidation'):

pass

else:

rulelist = []

rlid = attrule[rule]['id']

error_number = attrule[rule]['errorNumber']

type = attrule[rule]['checkParameters'].get('reviewerRuleType')

name = attrule[rule].get('name')

severity = attrule[rule].get('severity')

subtype_code = attrule[rule]['subtypeCode']

input_filter = attrule[rule]['checkParameters'].get('inputFilters')

# Add to list

rulelist.append(rlid)

rulelist.append(error_number)

rulelist.append(type)

rulelist.append(name)

rulelist.append(severity)

However, the feature class itself does not hold information about the number of features that are in error for a given feature class. This information is stored in the system-generated error layers (GDB_ValidationLineErrors, GDB_ValidationPointErrors, GDB_ValidationPolygonErrors, and GDB_ValidationObjectErrors) that are created after authoring attribute validation rules on your data . A search cursor, ‘arcpy.da.SearchCursor’, is used to iterate through each error layer and count the number of features that are in error for a given attribute rule.

def attributeRule_errorCount(ruledata):

# Generate set of Rule IDs

id_list = {rd[0] for rd in ruledata}

for id in id_list:

a = 0

for el in errorLayers:

# Build search cursor for all row RuleIDs and ErrorStatuses

with arcpy.da.SearchCursor(el,['RuleID','ErrorStatus']) as cursor:

for row in cursor:

if(row[0] == id and row[1] == 1):

a = a + 1

else:

pass

for rd in ruledata:

if rd[0] == id:

rd.append(a)

Note: Validation attribute rules based on the Composite check are not currently supported by the logic in this script and are excluded from the report.

Inserting and formatting data in Excel

Once the data for each feature class is associated with its corresponding error layer information, then the OpenPyXl python library, available with ArcGIS Pro, is utilized to insert and format the data in Excel. First, a new Excel Workbook is created, and the first worksheet is activated before adding data.

wb = Workbook()

wksht = wb.active

wksht.title = "Reviewer Attribute Rules Report"

# Create column headers

labels = ["Workspace","Date/Time","Feature Class", "Rule Type", "Rule Title", "Severity",

"Total Number of Features","Total Validated Features", "Total Error Features",

"Percentage Accuracy", "Total Unvalidated Features", "Percent Unvalidated"]

wksht.append(labels)

print("Finished opening Workbook")

Then, the data extracted from the feature classes and the error layers is inserted into the workbook and the cell color, height, width, and more are adjusted.

def workbookFormat_header():

'''Formats the cells in the Excel Workbook for user readability.'''

black_fill = PatternFill(patternType='solid', fgColor = '000000')

darkGrey_fill = PatternFill(patternType='solid', fgColor = '808080')

white_text = Font(name='Calibri', size=11 , color='FFFFFF')

for i in range(1,13):

# Row 1 - Column Headders

wksht.cell(row = 1, column = i).fill = black_fill

wksht.cell(row = 1, column = i).font = white_text

# Row 2 & 3 - GDB Averages and date-time

wksht.cell(row = 2, column = i).fill = darkGrey_fill

wksht.cell(row = 2, column = i).font = white_text

wksht.cell(row = 3, column = i).fill = darkGrey_fill

wksht.cell(row = 3, column = i).font = white_text

#Adjust Column Width

wksht.column_dimensions['A'].width = 11

wksht.column_dimensions['B'].width = 18

wksht.column_dimensions['C'].width = 18

wksht.column_dimensions['D'].width = 18

wksht.column_dimensions['E'].width = 49

wksht.column_dimensions['F'].width = 8

wksht.column_dimensions['G'].width = 24

wksht.column_dimensions['H'].width = 23

wksht.column_dimensions['I'].width = 18.5

wksht.column_dimensions['J'].width = 19

wksht.column_dimensions['K'].width = 25

wksht.column_dimensions['L'].width = 19def attributeRule_organize(ruledata, n, type_color, data_color):

type_list = {rd[2] for rd in ruledata}

for tl in type_list:

typename = RevRules.get(tl)

wksht.append(['','','',typename,'','','','','','','',''])

#Format color of row

for i in range(1,13):

wksht.cell(row = n, column = i).fill = type_color

n += 1

for r in ruledata:

if r[2] == tl:

wksht.append(['','','','',r[3],r[4],'',r[5],r[6],r[7],'',''])

for i in range(1,13):

wksht.cell(row = n, column = i).fill = data_color

n += 1

else:

pass

return n

Once all the relevant data is found and inserted into the Excel Workbook, a table is created with the data and the formatting specified in your script.

To learn more about the python library OpenPyXL please visit the following link: openpyxl - A Python library to read/write Excel 2010 xlsx/xlsm files — openpyxl 3.0.10 documentat...

About the author

Bailey Ohlson

I am GIS professional from Austin, Texas with a B.S. in Geology from Texas A&M University (Gig ‘em Aggies!) and an M.A. in Geography from the University of Texas at Austin. I love finding ways to use GIS at the intersection of geology, hydrology, and geography, and have enjoyed improving my skills in python over the last few years. I am also an avid outdoorsman and can often be found taking a walk outside or planning my next backpacking trip.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.