- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Interoperability

- :

- ArcGIS Data Interoperability Blog

- :

- Continuous Integration / Continuous Delivery with ...

Continuous Integration / Continuous Delivery with ArcGIS Data Interoperability

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Continuous Integration / Continuous Delivery (CI/CD) is a software development methodology. I'm borrowing the term and applying it to data, not software, specifically data that endlessly changes over time and that you need to integrate into your systems of record, well, continuously!

Always one to jump in the deep end, my example is taking a feed of public transport vehicle positions (accessed via a REST API) that refreshes every 30 seconds and pushing the data through to a hosted feature service in an ArcGIS Enterprise portal and also into a spatially enabled table in Snowflake. I will use a web tool for the processing as high availability is obviously advisable.

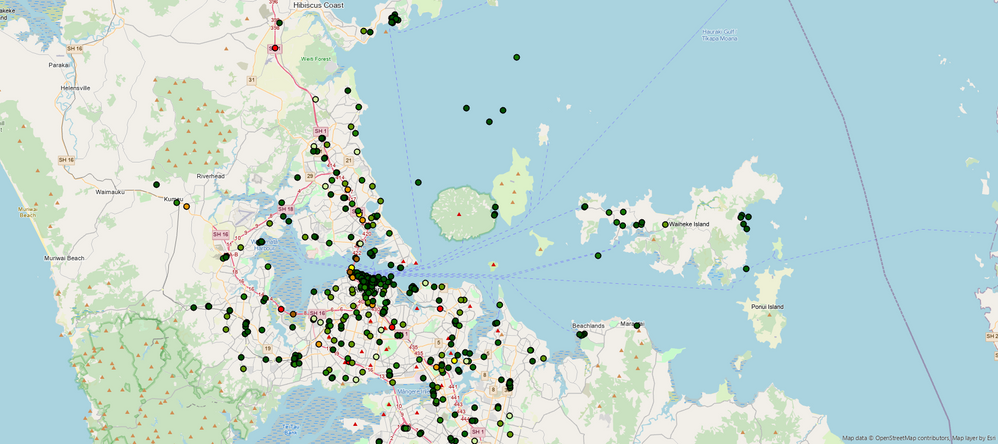

Here are bus, train and ferry positions a few moments ago (early Saturday local time) in Auckland, NZ.

I'm probably working at the extreme end of continuous bulk data integration frequency, I expect the vast majority of integrations are performed at intervals of hours or days, but at least you'll know what can be achieved.

Bear with me while I have some fun with my integration scenario 😉.

Lets say I work at Fako (a fictional name), who have solved the first-mile/last-mile problem of e-commerce. Fako has identified that commuter networks are very efficient for bringing people and retail goods together, buying or selling, when the riders are the buyers and sellers. In partnership with transport operators we remove a few rows of seats in each vehicle, both sides of the aisle, and replace them with grids of smart storage lockers to which internet shopping can be 'delivered' by our stevedores at our warehouses co-located with transport terminals. Buyers order from any web site for delivery on a day and route, sellers sell on our website and we transfer items to routes anywhere on the network. We're freight forwarders. Fako's mobile app lets customers use their phone to unlock the locker their item is in any time during their trip. Some lockers are refrigerated, we have our own meal kit line. A very popular feature of our mobile app lets riders bid in auctions for abandoned items. Fako pays transport operators the equivalent of a rider fare per item, greatly boosting their effective ridership. Fako doesn't have to buy a fleet of delivery vehicles and the transport operators are getting increased revenue. Business is booming!

Fako's back end systems run on Snowflake. To make everything work, Fako needs to maintain the network status continuously as spatially enabled Snowflake objects. Let's see how!

First the boring way, for which I happen to have disqualified myself by choosing a frequency Windows scheduled tasks don't support, would be to copy a Spatial ETL workspace source fmw onto my Data Interoperability server and configure a scheduled task based on the command line documented in a log file from a manual run as the arcgis user:

Command-line to run this workspace:

"C:\Program Files\ESRI\Data Interoperability\Data Interoperability AO11\fme.exe" C:\Users\arcgis\Desktop\ContinuousIntegration\VehiclePositions2Snowflake.fmw

You should carefully consider this option for your situation, it is robust and simple.

Now for the non-boring way. Like I said, I pushed myself in this direction by working with data that updates in bulk at high frequency. I make a web tool that performs the integration then calls itself after waiting for the source data to update.

Whoa a web tool that calls itself, no webhook and no scheduling? Its crazy simple (possibly also just crazy).

I made two Spatial ETL tools, one real and one a dummy that does nothing, but has the same name and parameters (none in this case).

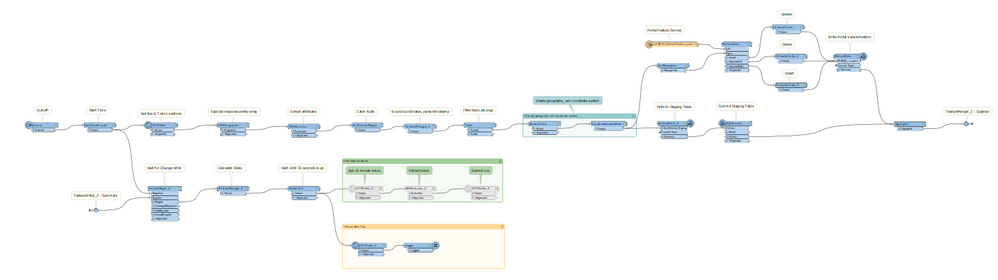

I shared a history item of the dummy version of VehiclePositions2Snowflake as a web tool and recorded the submitJob URL. It is important the web tool be asynchronous so when it gets called it doesn't block the workspace waiting for a response:

Then edit the real ETL tool, in the final HTTP step, to call the submitJob URL. Run the tool and from its history item overwrite the dummy web tool.

I'll let you surf the tool yourself but basically the upper stream fetches the vehicle data and synchronizes it to the portal and Snowflake and the lower stream waits for this and for 30 seconds to elapse then makes the HTTP call.

Then just run the web tool once manually and you're off to the races, it will repeat endlessly. I'm sitting here refreshing my Pro map (no cache on the feature service layer) and seeing the transport fleet move around.

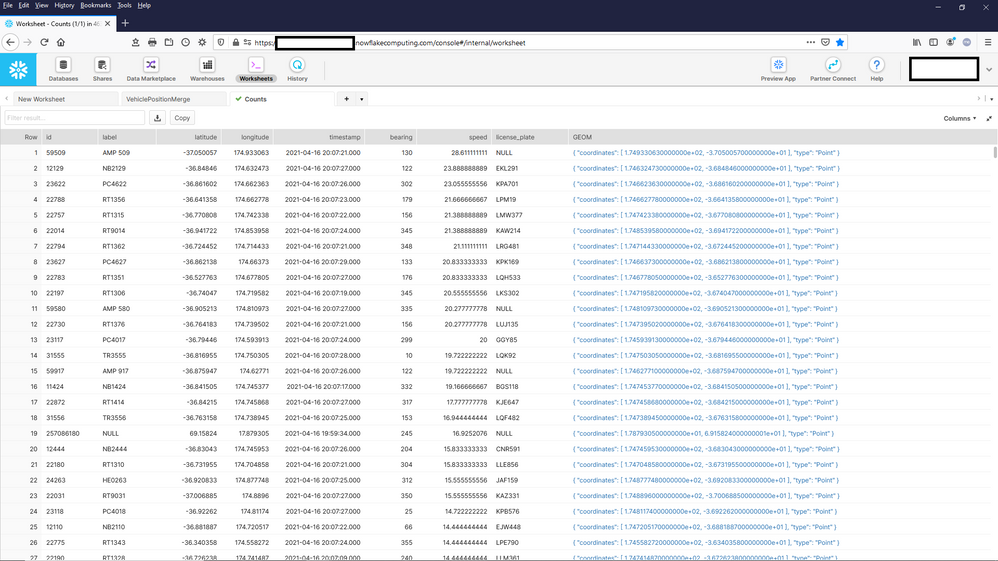

In Snowflake my data is also refreshing:

Back to some boring details, don't forget when publishing Spatial ETL tools as web tools Data Interoperability must be installed and licensed on each tool hosting server and when using web connection or database credentials like I am here, go to the Tools>FME Options dialog and export the required credentials (right click for the menu) to XML files, put these on your server and import them into the Workbench environment as the arcgis service owner. If manually running a workspace on the server you might have to change the Python environment too. Lastly, while the blog download has an FMW file the tool you publish to your server should have an embedded source.

Now that was fun!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.