- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Interoperability

- :

- ArcGIS Data Interoperability Blog

- :

- How FME Flow (or Form) can write to ArcGIS Enterpr...

How FME Flow (or Form) can write to ArcGIS Enterprise with no local ArcGIS install

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A recent blog of mine discussed how ArcGIS Data Interoperability relates to FME but also clarified the licensing situation when FME Flow is being used to access ArcGIS executables, particularly for writing to an Enterprise geodatabase. In that situation, other than for the file geodatabase exception noted, the ArcGIS software to be used must be ArcGIS Server or Enterprise. This isn't always convenient for an FME Flow site, so in this blog I'll give you an alternative - namely using a web tool without a local ArcGIS install.

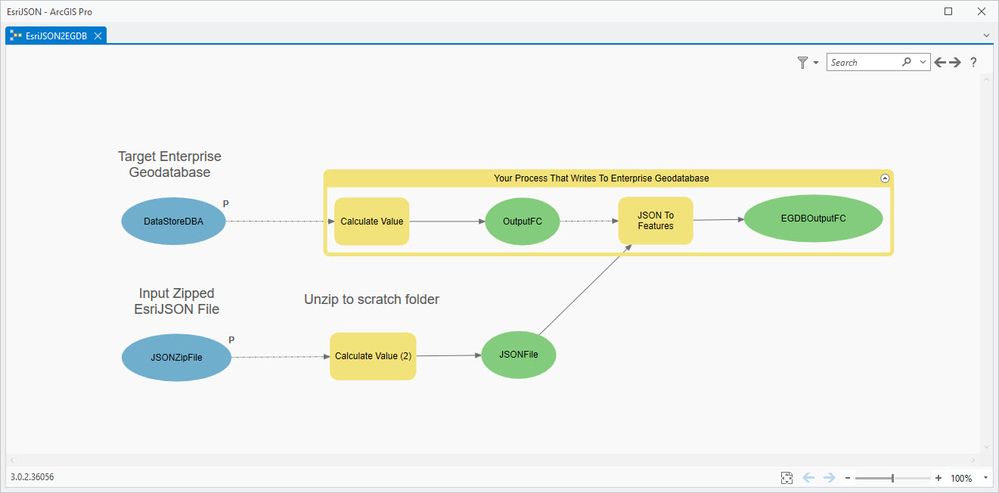

Here is my example, a model tool:

The whole point here is to give FME Server users a way to write Enterprise geodatabase objects using a web service and not an executable. If your interest is in reading Enterprise geodatabases with FME Flow you can already do this with the ArcGIS Server Feature Service reader provided you publish a feature service from an Enterprise geodatabase object. Let's get started.

The important bits in the above Model are:

- The input workspace parameter is the target output Enterprise geodatabase

- The input data parameter is a file-based format FME writes and ArcGIS reads

At writing, the teams at Safe Software and Esri are putting effort into enhancing the FME EsriJSON reader/writer to fully support a schema object, but we're not done yet. Regardless, I'm going to use EsriJSON as my data protocol. I made test data with the Features to JSON geoprocessing tool, not FME yet.

There are other options like GeoPackage you might choose, and more coming in the future, but I want to show you how to use EsriJSON for now, not least because you will be able to trust the geometry and schema to be reliable in a destination geodatabase.

Is there anything special about the Enterprise geodatabase workspace parameter? When you add it to your model it is just a database connection file with ".sde" extension but put a little thought into it. I made a new connection file with the Manage Registered Data Stores dialog, and indeed registered my Enterprise geodatabase as a data store. When publishing a web tool, datasets may be copied to the server or referenced from data stores. If your web tool needs to leverage any data that isn't static then do what I did. Making the Enterprise workspace a parameter protects it from being copied but any other datasets need to have this behavior managed.

Why is the EsriJSON file zipped? Obviously this helps file upload speed but it is also necessary because of how we use the web tool - i.e. via its REST endpoint and calling from FME Server. When a web tool supports a file input parameter the file must be supplied as a URL or server or portal item and not a POST upload. Any JSON item uploaded unzipped will be recognized (incorrectly in our case) as GeoJSON, found to be invalid and rejected. Zipping it protects it from this behavior. Even then, EsriJSON isn't a supported portal item type and we have to tell the system it's a Code Sample item. This is a bit ironic, given I'm the no-code guy in the office, but I'll take the leave pass.

My web tool doesn't do any fancy geoprocessing, it only serves to demonstrate the EGDB writing approach, but we'll step through it for completeness.

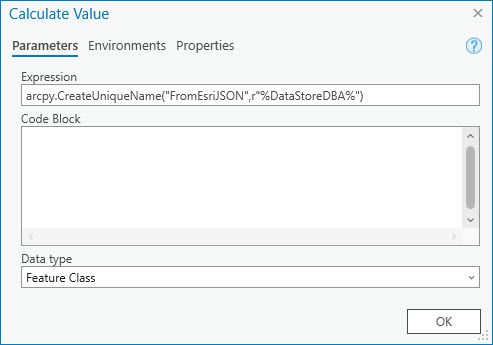

The first Calculate Value model tool downstream of the workspace variable generates a unique output feature class name for the target geodatabase. The only notable thing is I make sure to use raw string processing for the workspace variable (r"%DataStoreDBA%") because on the server it is a complex path with metacharacters like "\a" that would otherwise be interpreted. For example:

C:\arcgisserver\directories\arcgissystem\arcgisinput\EsriJSON2EGDB.GPServer\extracted\p30\DataStoreDBA1.sde\FromEsriJSON0

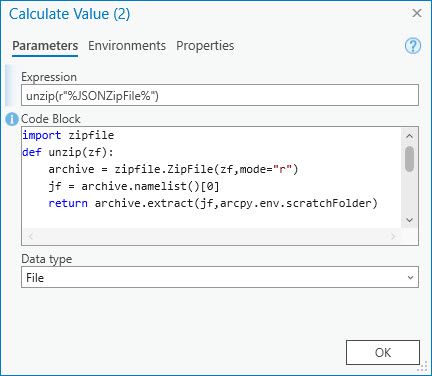

The second Calculate Value model tool downstream of the data file input unzips the data to the scratch folder.

On the server an example path in the job folder looks like:

C:\arcgisserver\directories\arcgisjobs\esrijson2egdb_gpserver\jf37903613c4f4e5685efc5e0861fdbbd\scratch\FeaturesToJSON_OutJsonFile_Nelson.json

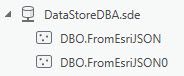

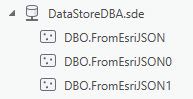

Otherwise the only processing is the write we want - the JSON to Features geoprocessing tool sends data to where we want it (your processing may be much more complex). After a couple of executions I have data in my EGDB:

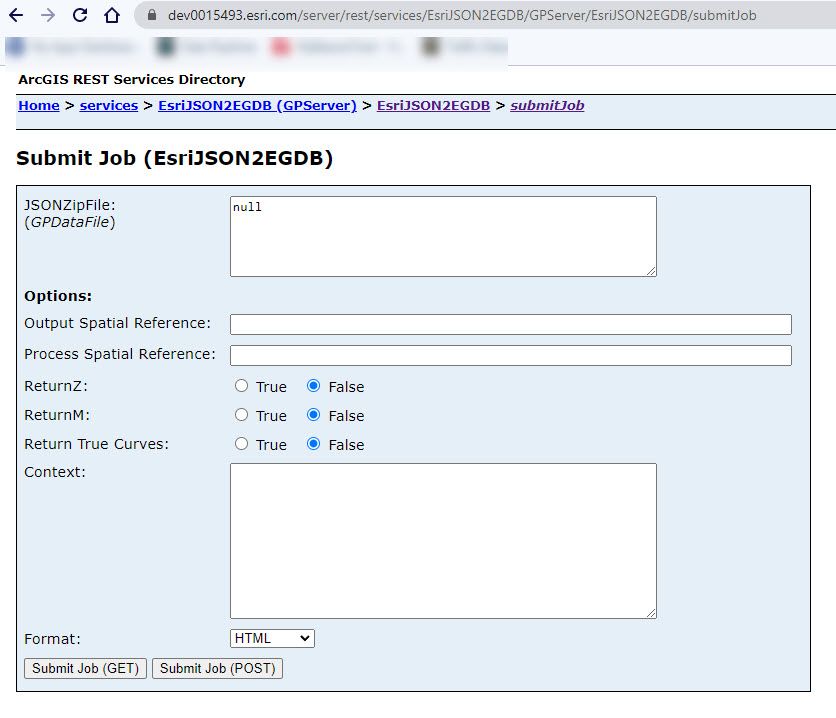

After publishing a tool result as a web tool I have a portal tool item with a REST submitJob endpoint:

Now we have something FME can use via HTTP to write data to the Enterprise geodatabase. Before we dig into that there are a couple of things to consider. In this workflow you are responsible for managing timeouts and contention in the data going up to the portal (where the REST endpoint gets its input data), processing, and in the target database. I went into my Server Manager console and made sure to limit my web tool to a single instance with a 15 minute timeout. That way I can handle large files and always one at a time.

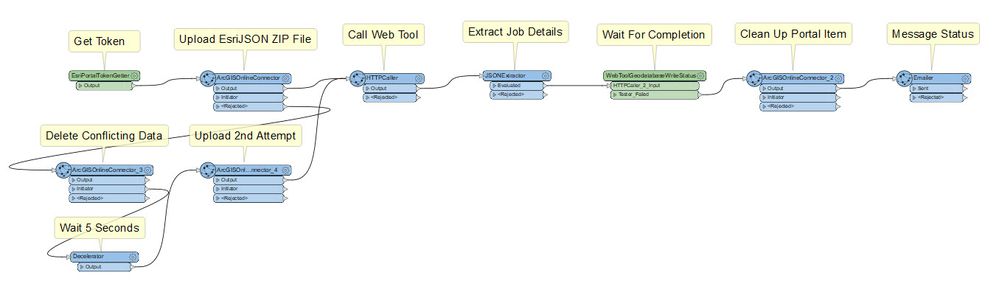

Drum roll please - here is the FME workspace (Main canvas) that calls the web tool:

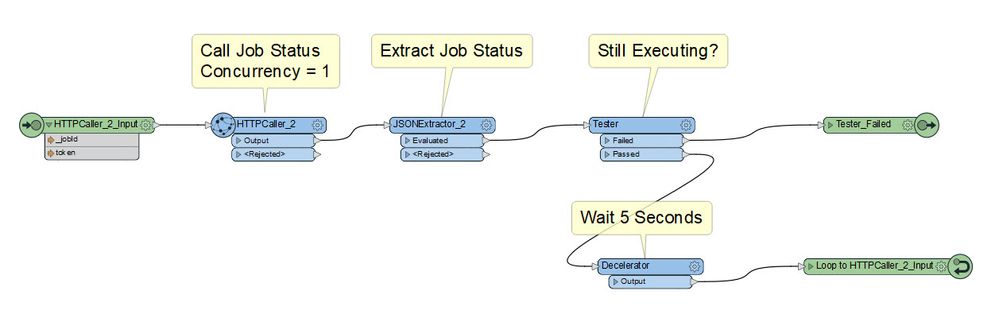

...and the looping custom transformer in the middle looks like this:

Everything is in the blog download (authored in Pro 3.0) but let's step through the FME workspace. In your real world, FME Flow will be cooking up data to be written to the EGDB. I don't do that in my sample but you will have to make file-based data to send. My input parameters are a user-defined zipped JSON file and the web tool home URL (not published, as it is static):

https://dev0015493.esri.com/server/rest/services/EsriJSON2EGDB/GPServer/EsriJSON2EGDB

The first step is to get a portal token, that's the first (green) transformer. Then the input zip file is uploaded as a portal Code Sample item. Code Sample items contain a file that must have a unique name per folder, regardless of whether the Code Sample item name is itself unique. Just in case of a name collision I delete any existing items with the same content name (found by rejection), then upload the input data.

After that it's just HTTP to send each job, a looping custom transformer to wait for job completion, and then I delete the portal item we are finished with and finally email a result message to any interested parties.

Now I'm off to the races, any time my FME job runs I get data written to my EGDB, and without installing any ArcGIS software on my FME machine!

Don't forget EsriJSON is a work in progress at Esri & Safe Software but you can get going now with other file-based formats.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.