- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Interoperability

- :

- ArcGIS Data Interoperability Blog

- :

- Building a Data Driven Organization, Part #4: Auto...

Building a Data Driven Organization, Part #4: Automate Refreshing Large Shared Services

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If you are sharing hosted feature services, optionally with child services like Map Tile, WFS and OGC services, and your source data changes regularly, you will want to automate refreshing service data without breaking item identifier or metadata elements so your customers' maps and apps keep working. This blog shows how - using ArcGIS Data Interoperability.

First the obligatory map of our study area and data, street addresses in Norway:

Just to be clear I'll restate the scenario.

- You are sharing a hosted feature service which may be large

- You may be sharing services published from the hosted feature service

- The source data might not be managed in ArcGIS

- The source data changes regularly and you want to apply the changes to the service(s)

- You don't want Portal or Online item identifiers to change when you refresh the data

- You want to automate this maintenance with minimal downtime

- You don't want to write any code

It is well understood that Data Interoperability can detect dataset changes and apply them to a publication copy of the data. This works well and allows for zero downtime if you write changes incrementally to a feature service. However, when you are dealing with millions of features this can be time consuming, both to read the original and revised datasets and to write the change transactions. It also risks encountering network issues during very long transactions.

We need an option that just replaces service data efficiently and quickly. This can be done by maintaining a file geodatabase copy of the source data on your Portal or Online and replacing service data using a truncate and append workflow.

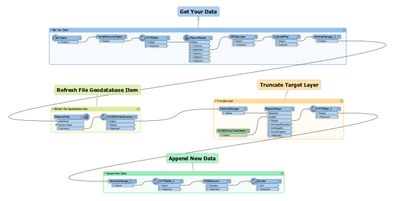

Here is the Data Interoperability tool that shows the pattern.

The blue bookmark is whatever ETL you need to get your data into final shape, in my case I'm downloading some data, doing some de-duplication and make a few tweaks to fields.

The pale green bookmark is where I write the data to a zipped file geodatabase and overwrite a file geodatabase item in Online. The tan bookmark is where I truncate my target feature layer.

The brighter green bookmark is the final step where I call the append function that reads from the file geodatabase item and writes into the target feature layer.

All very simple isn't it!

For roughly 2.7M street address features and from my home network the whole job, including waiting for the asynchronous append operation to complete, takes about an hour.

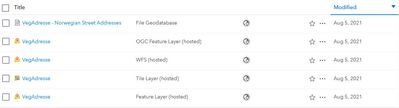

But wait there's more! I published Map Tile, WFS and OGC services from my target feature service, here is everything in my Online project folder:

The caches for each child service take a few extra minutes to refresh but the process is automatic (Vector Tile services need a manual cache rebuild from the item settings page).

If I was doing this for a production environment I would move processing to an Enterprise server like this earlier blog describes and schedule the task to run overnight at an appropriate interval.

There you have it, automated, efficient bulk refresh of hosted feature services and their derived products. The tool I'm describing is in the blog download, have fun!

Note for Enterprise users: Currently ArcGIS Enterprise does not support file geodatabase (filegdb) as an append format, you must use shapefile (or Excel or CSV if working with tables). To append shapefiles it is likely you will need to specify a fieldMappings dictionary in the layerMappings parameter to map the field names in your shapefile to the target feature service.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.