- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Error at Multiprocessing - “Manage Tile Cache” too...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error at Multiprocessing - “Manage Tile Cache” tool in parallel processing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We use the tool "Manage Tile Cache" on the local machine Desktop in ArcMap 10.5, for ArcGIS Server 10.3.

Parameter "area_of_interest" is the feature class created by CacheWorx "Coverage To Feature" Level 19

The help says: "For the fastest tile creation, your CPU should be working near 100% during the tile creation"

Having 16 cores CPU, and using ParallelProcessingFactor 100% or 16

the CPU usage is very low - between 3 to 8 percents

In order to find a workaround I tried to use a script with multiprocessing.

Unfortunately, it has an error at the run time. Please help to make it working.

The script

#run “Manage Tile Cache” tool in parallel processing

import arcpy

import multiprocessing

import os

import glob

import sys

import traceback

from multiprocessing import Process, Queue, Pool, \

cpu_count, current_process, Manager

arcpy.env.overwriteOutput = True

arcpy.env.scratchWorkspace = "in_memory"

AAA_Imagery = "D:/gisdata/imagery/public_AAA_Imagery/AAA_Imagery"

Manage_Mode = "RECREATE_ALL_TILES"

Scales__Pixel_Size___Estimated_Disk_Space_ = "564.248588"

Best_WebM = "D:/gisdata/imagery/BestMosaic_2017test.gdb/Best_WebM"

Level_20_coverage = "D:/gisdata/imagery/Levels_coverage.gdb/Level_20_coverage"

def execute_task(bundleAOI):

try:

result = arcpy.ManageTileCache_management(AAA_Imagery, Manage_Mode, "", Best_WebM, "ARCGISONLINE_SCHEME", "", Scales__Pixel_Size___Estimated_Disk_Space_, bundleAOI, "", "591657527.591555", "282.124294")

print(arcpy.GetMessages())

print "result: " + result

except Exception, e:

e.traceback = traceback.format_exc()

raise

if __name__ == '__main__':

#get individual bundles, add it to a dictonary

bundles = {}

count = 1

for bundle in arcpy.da.SearchCursor(Level_20_coverage,["*"]):

bundles[count] = bundle

count += 1

# create a process pool and pass dictonary of extent to execute task

pool = Pool(processes=cpu_count())

pool.map(execute_task, bundles.items())

pool.close()

pool.join()

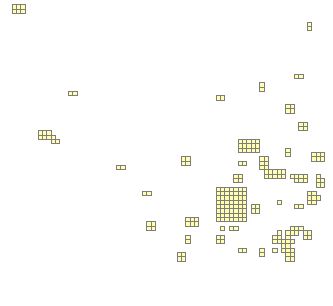

The error (see the image)