- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Interoperability

- :

- ArcGIS Data Interoperability Blog

- :

- Make ETL Data Sources Dynamic With Modelbuilder

Make ETL Data Sources Dynamic With Modelbuilder

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In an earlier post I introduced a technique for capturing map extents from user input and sending these as parameters to a Spatial ETL Tool. This made the spatial extent of the processing dynamic with user input. The key was wrapping the ETL tool with ModelBuilder to take advantage of its ability to interact with a map.

This post is along similar lines except showing how to capture a user's selection of feature classes to process at run time. This makes the feature types being processed dynamic with user input.

First some background. The FME Workbench application used for authoring Spatial ETL tools is designed for repeatable workflows with known input feature types, and the work centers around managing output feature characteristics. In ArcGIS we are used to geoprocessing tools being at the center of data management and needing to handle whatever inputs come along. We're going to make Spatial ETL a little more flexible like ArcGIS with some modest ModelBuilder effort.

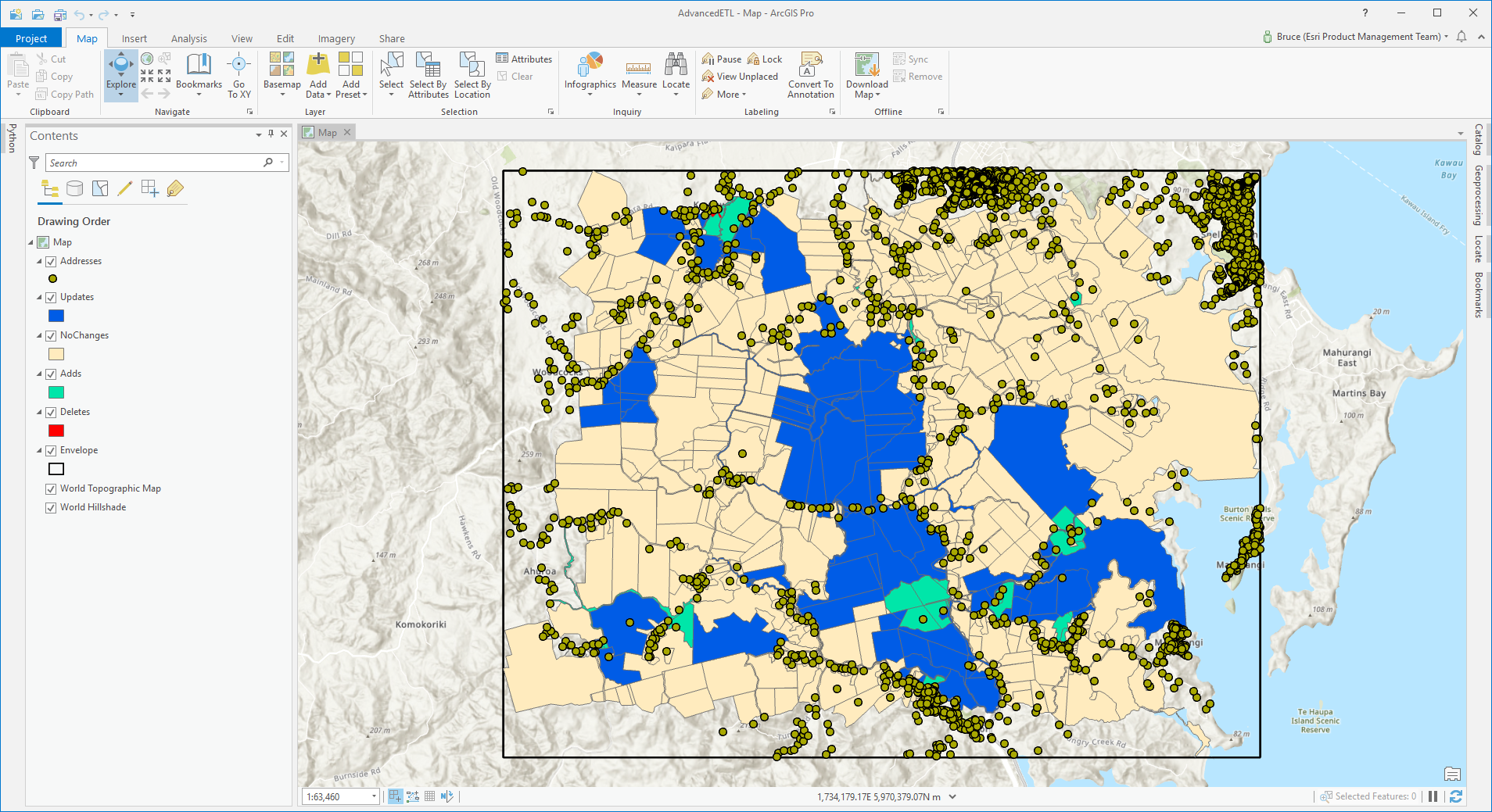

Here is some data:

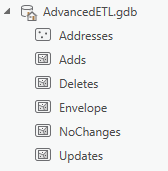

In my project database it looks like this (the main point is it is all in one geodatabase):

...and my Project Toolbox has a Spatial ETL Tool and a Model:

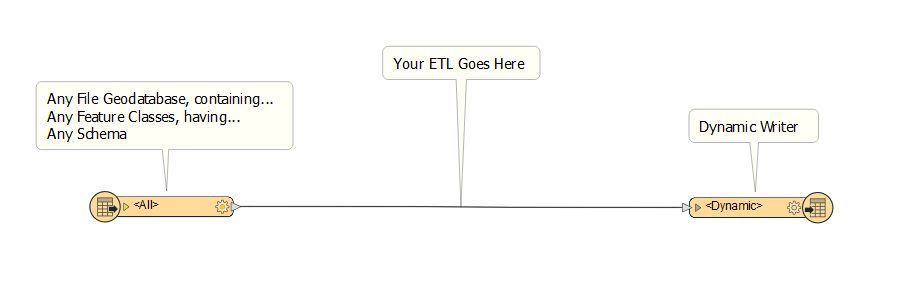

The Spatial ETL Tool...

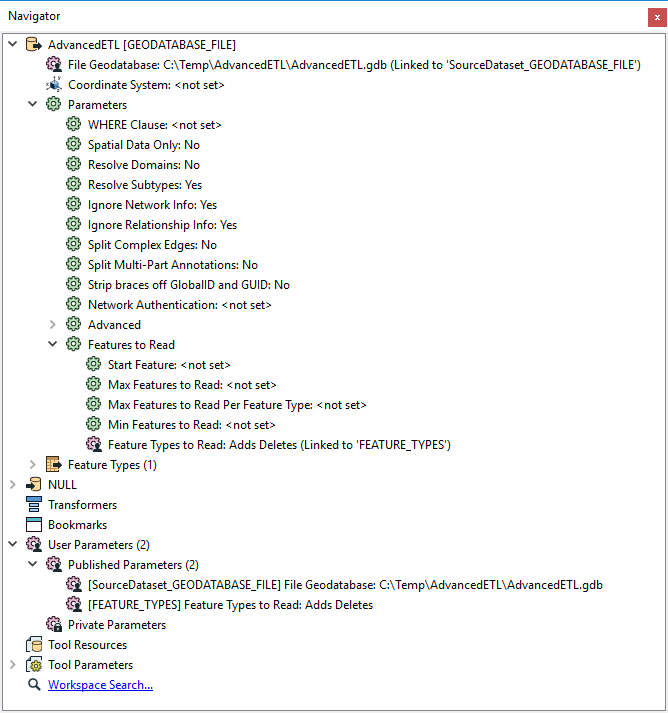

...does absolutely nothing! Well, it reads some default feature types from a default File Geodatabase, then writes them all out to the NULL format (great for demos, it never fails). The trick here is I made the 'FeatureTypes to Read' input parameter of the File Geodatabase reader a User Parameter (you right click on any parameter to publish it this way).

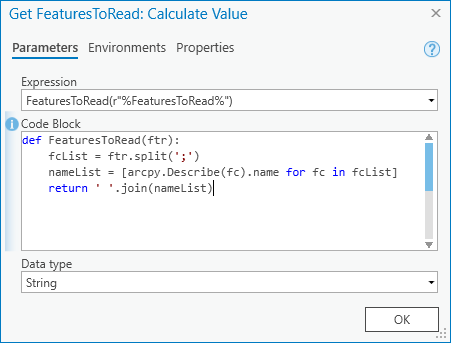

The only other thing to 'know' ahead of the Modelbuilder stuff is that the ArcGIS Pro geoprocessing environment is smart enough to see Spatial ETL tool inputs and outputs that are Workspaces in geoprocessing terms (Geodatabases, Databases, Folders) as the correct variable type in ModelBuilder but that usually other FME Workbench workspace parameters you might expose are seen as String geoprocessing parameter type. This means in our case if we choose multiple feature classes from my project home Geodatabase, like say 'Adds' and 'Deletes', then the ETL tool wants the value supplied to be a space-separated string like 'Adds Deletes'.

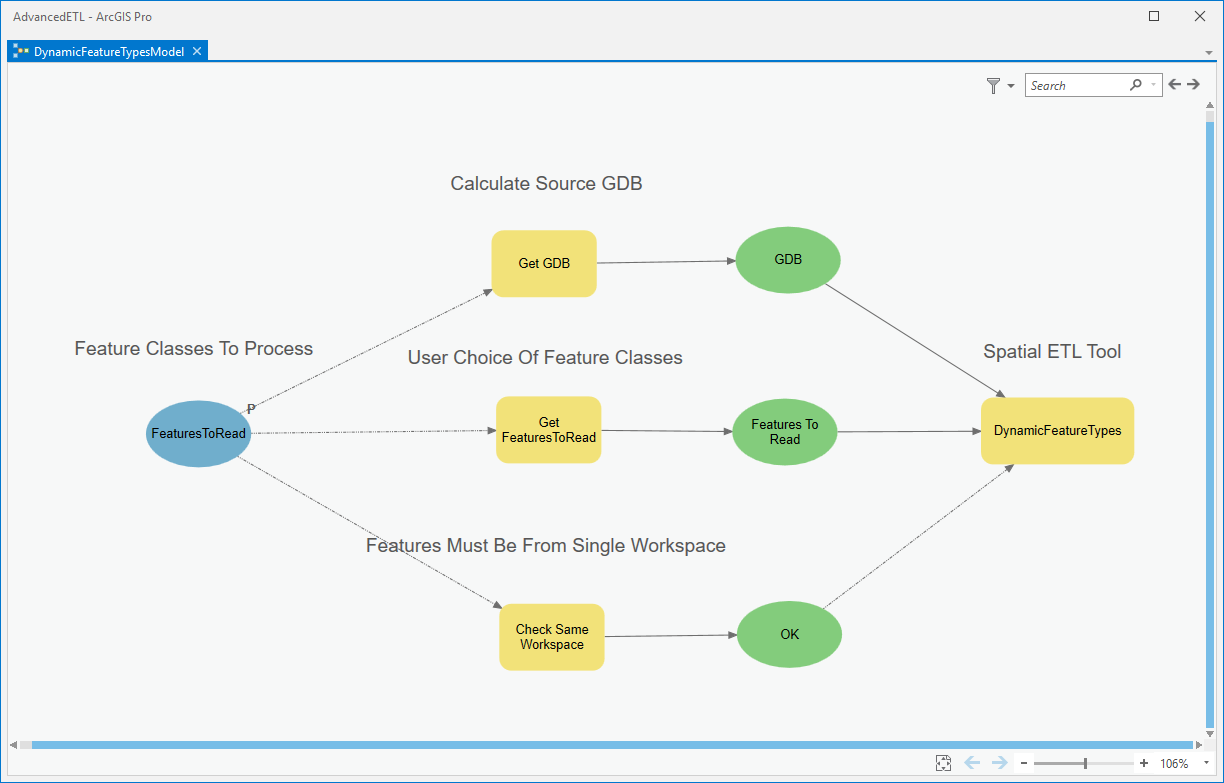

Here is the model, DynamicFeatureTypesModel. Its last process is the Spatial ETL tool DynamicFeatureTypes. There are three processes ahead of it.

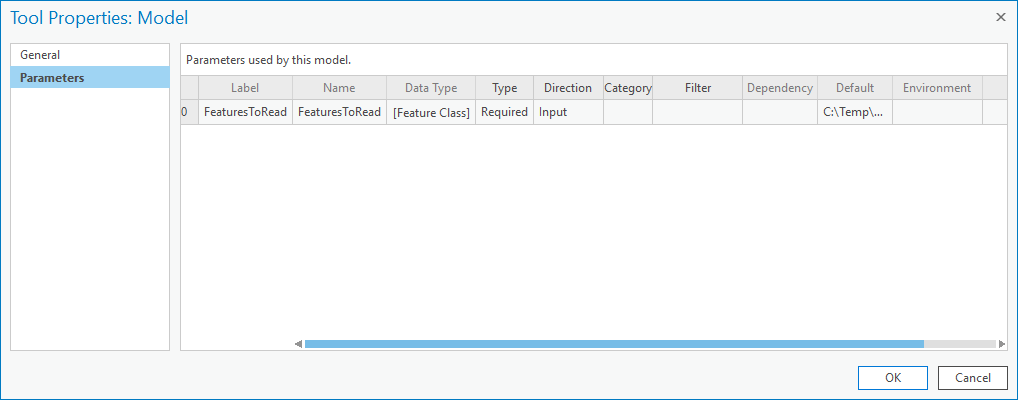

On the left is the sole input parameter 'FeaturesToRead', of type Feature Class (Multi Value) (you could use Feature Layer too with a little more work in the model to retrieve source dataset paths):

There are three Calculate Value model tools, their properties are:

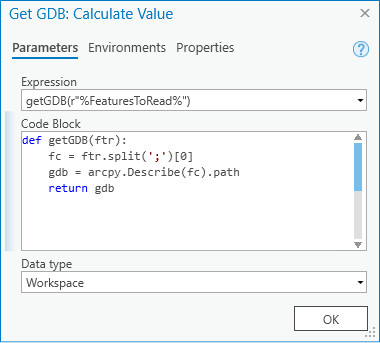

Get GDB:

This returns the Geodatabase of the first feature class in the input set.

GetFeaturesToRead:

This returns the names of the feature classes as a space-separated string.

GetGDB and GetFeaturesToRead supply the ETL tool input parameter values.

CheckSameWorkspace:

This returns a Boolean test that all input feature classes are from the same Geodatabase. It is used as a precondition on the Spatial ETL Tool as that is designed with a File Geodatabase reader and must receive that format data and only once.

That's it! The DynamicFeatureTypes model can be run like a normal project geoprocessing tool with the ability to select any desired inputs, and the Spatial ETL tool behind the scenes takes what it gets. If you select inputs from different File Geodatabases the precondition check will prevent the tool from executing.

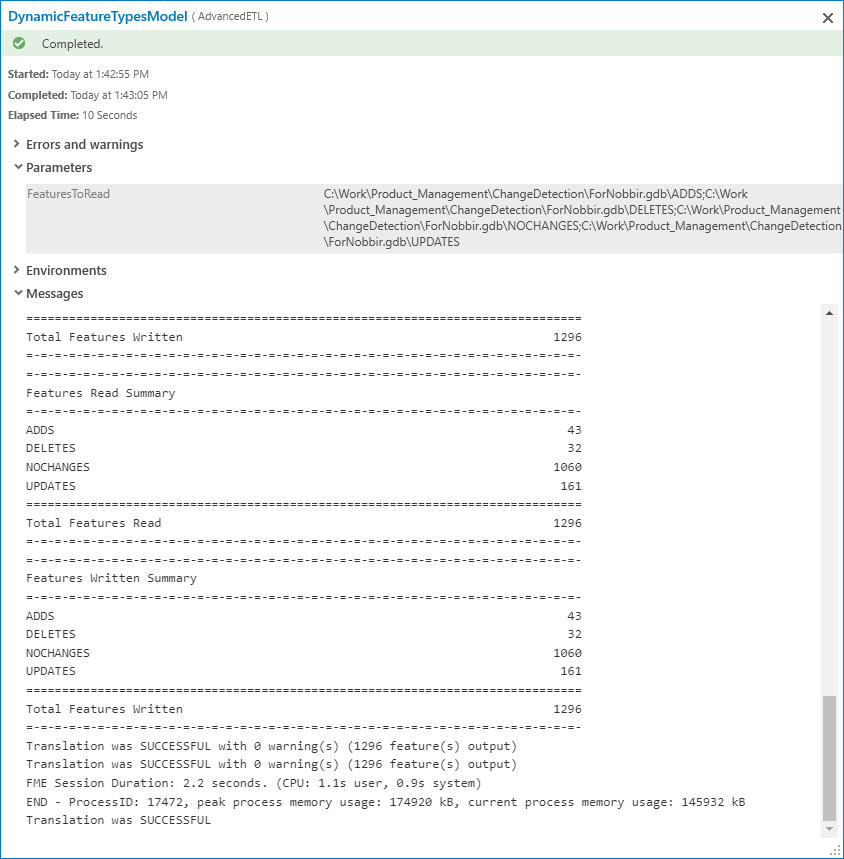

Here is the details view from a run with data from a different Geodatabase.

Please do comment in this blog with your comments and experiences. The project toolbox and ETL source are in the post attachment.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.